What is ALO-LLM?

AI Logic Organizer for Large Language Models (ALO-LLM)

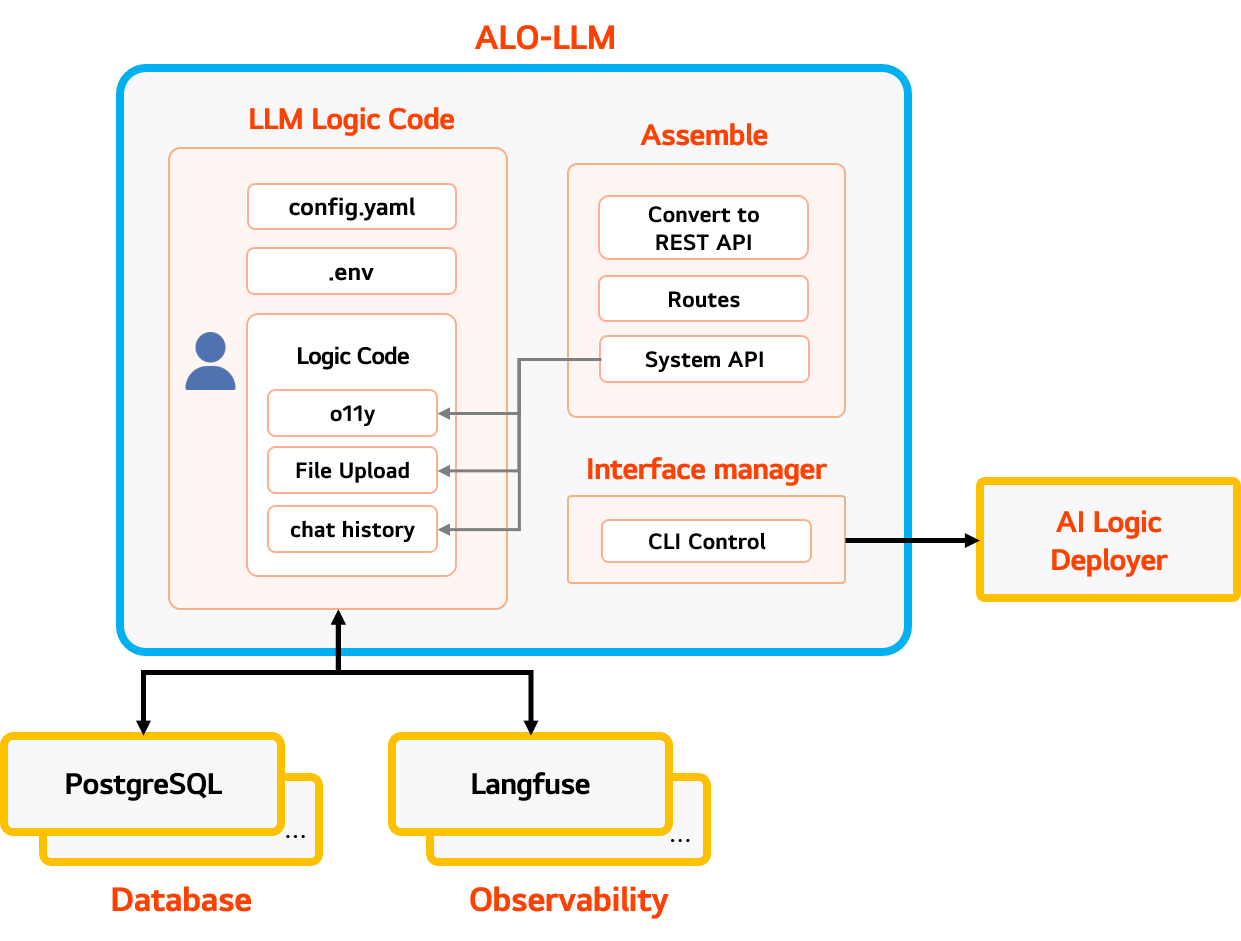

ALO-LLM (AI Logic Organizer for Large Language Models) is a development framework that assists in converting user-written logic code into a FastAPI format, registering it with LLMOps, and automatically building and operating the infrastructure environment. LLM logic developers can handle the entire process of Service API development, registration, and operation conveniently and efficiently without complex settings.

Standard development is accomplished using ALO-LLM. This type of development follows pre-defined specifications and processes, characterized by converting to the FastAPI format, registering with LLMOps, and automatically building and operating the infrastructure without complex settings. Developers can easily develop and deploy APIs through the following steps:

LLM Logic Code Writing: Write environment variables in the .env file and define the logic code using Langchain syntax. Service API Conversion: Install ALO-LLM and write a config.yaml file to define the necessary libraries and API mapping, then convert the logic code to FastAPI using a CLI command. This approach significantly reduces development time and cost, increases stability, and allows for quick service deployment.

Custom development is carried out without using ALO-LLM. It involves developing software tailored to specific customer requirements and business processes, allowing users to freely write code for their specific purposes. However, since it does not use the convenient features of ALO-LLM, developers need to perform all settings and management directly, following these steps:

LLM Logic Code Writing: Users can freely write code but must define necessary library installation commands in the prerequisite_install.sh file. Writing the config.yaml file: Implement the logic in FastAPI format and write the AI Logic Deployer address and description in the config.yaml file. This method provides developers with finer control and special features, but it takes more development time, is more costly, and can be more complex to maintain.

Through ALO-LLM, developers can focus solely on creating innovative LLM-based services without worrying about basic infrastructure.

Key Features

ALO-LLM provides the following core functionalities:

Simplified Development ALO-LLM simplifies the process of developing and deploying LLM-based services.

Automated API Generation Automatically converts user-written logic code into a FastAPI service.

Integrated Deployment Provides a streamlined process for registering and deploying services to the AI Logic Deployer.

CLI for Easy Management Offers a command-line interface (CLI) to manage the entire lifecycle of a Service API.

User Scenario

A typical ALO-LLM user scenario includes:

- Installation and Setup: The developer installs ALO-LLM in their local environment.

- Develop LLM Logic: The user develops the LLM logic code.

- Configure for ALO-LLM: The user creates a

config.yamlfile to define the service API. - Local Testing: The user tests the service API locally using the

alm apicommand. - Register and Deploy: The user registers and deploys the service API to the AI Logic Deployer using the

alm registerandalm deploycommands.