ALO

Mellerikat AI Learning Organizer(ALO) is a core development framework designed to support the easy, efficient development and continuous operation of high-quality ML/LLM services. ALO enables developers to focus solely on service logic development without wasting time on complex technical processes. AI solutions and service APIs developed using ALO are systematically managed within Mellerikat’s MLOps/LLMOps environment, allowing access to a wide range of operational features.

ML/LLM Service Framework

Standardized ML/LLM Service Development Framework

ALO provides a standardized framework for developing ML and LLM services, enhancing both development efficiency and service quality.

Consistent Development Environment and Code Quality : By providing standardized coding conventions and formatting, ALO ensures code quality and simplifies service maintenance. This enhances development efficiency and improves collaboration productivity across teams.

Increased Development Focus : ALO offers essential Ops functionalities for efficiently operating ML/LLM services, allowing developers to focus solely on developing service logic for ML/LLM applications.

Synchronized and Reliable Operating Environment : ALO-ML containerizes AI solutions to minimize discrepancies between development and production environments. This ensures consistent model performance and supports predictable, stable AI service operation. Likewise, ALO-LLM facilitates operation in a containerized environment based on the AI Logic Deployer, enabling seamless deployment and management.

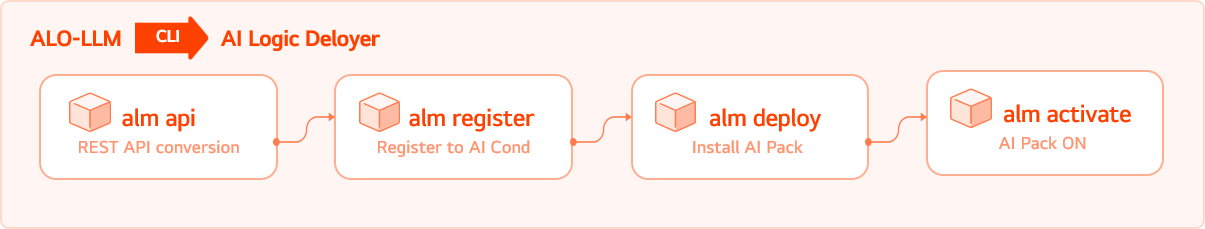

Easy and Fast Control via CLI Commands : Both ALO-ML and ALO-LLM support command-line interface (CLI) commands. Core features such as converting into ALO-ML format or into a REST API format are supported through intuitive CLI commands, helping developers quickly and easily get started with full-scale MLOps/LLMOps workflows.

Simple ALO Package Download and Installation : ALO can be easily and quickly installed via pip or uv on Windows or Linux development environments.

Integration with AI Conductor : AI solutions or service APIs developed with ALO can be registered with AI Conductor for version-controlled management, supporting continuous MLOps/LLMOps operations.

ALO-LLM

LLM Service API Development and Simplified Operation

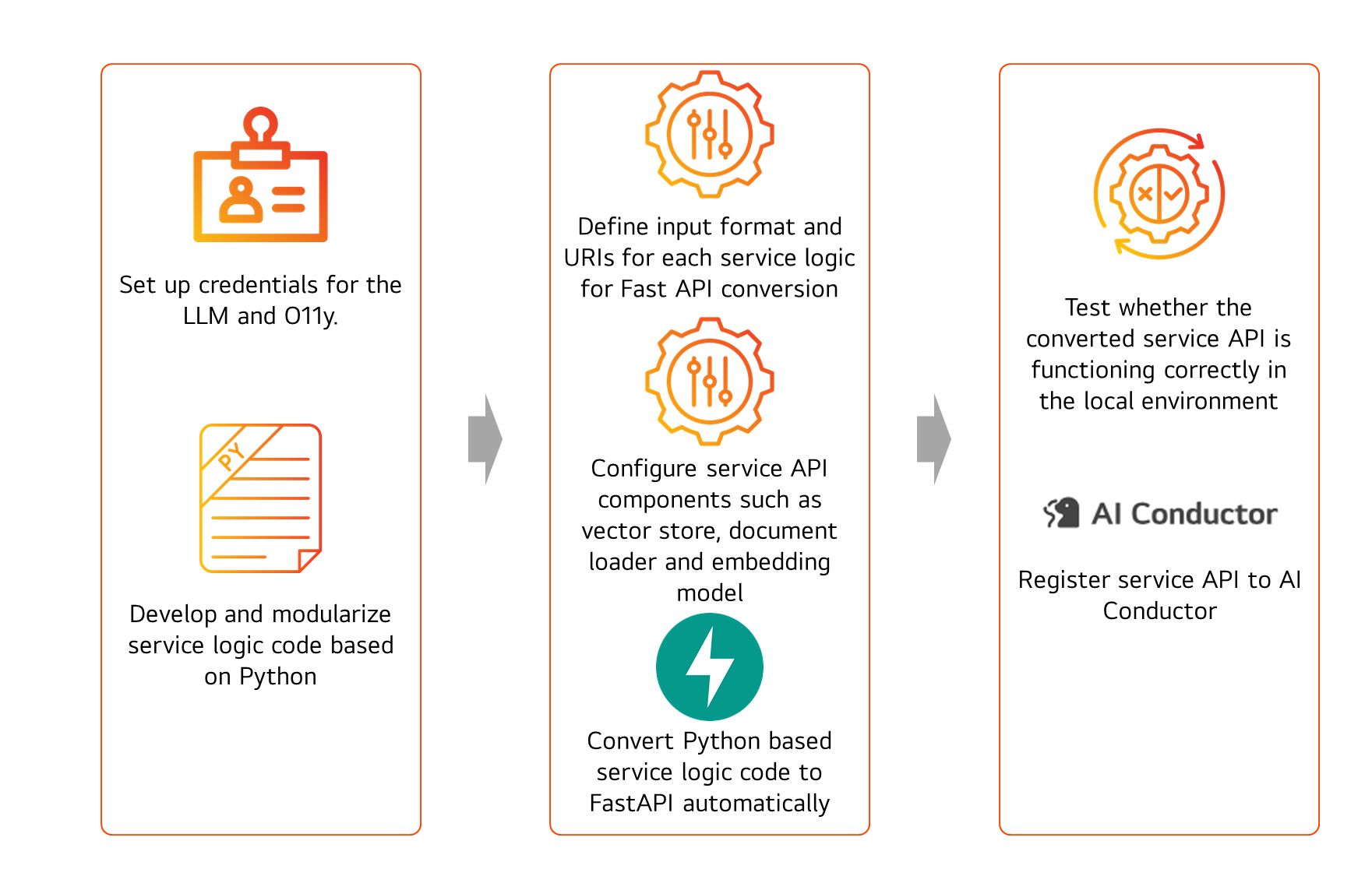

ALO-LLM is an optimized framework for LLMOps, designed to simplify the development and packaging of LLM Service APIs and support seamless service operation through AI Logic Deployer. The ALO-LLM package can be easily downloaded and installed via pip in any preferred Windows or Linux development environment. Developers can freely implement LLM service logic within the ALO-LLM framework, and intuitive CLI commands are provided to easily convert the logic into a REST API format. The converted Service API, once authenticated and registered as an AI Solution, can fully utilize the various features provided by the LLMOps environment.

ALO-LLM Demo

Automatic REST API Conversion Support

LLM services must be accessible in a callable format to connect with various systems or users. REST API is the most widely used interface method, enabling efficient, consistent, and easy operation across web, app, and internal services. ALO-LLM supports automatic conversion into REST APIs required for service operation, allowing developers to focus solely on service logic development. Even without specialized knowledge of REST APIs or specific software development skills, developers can build and deploy LLM services with ease.

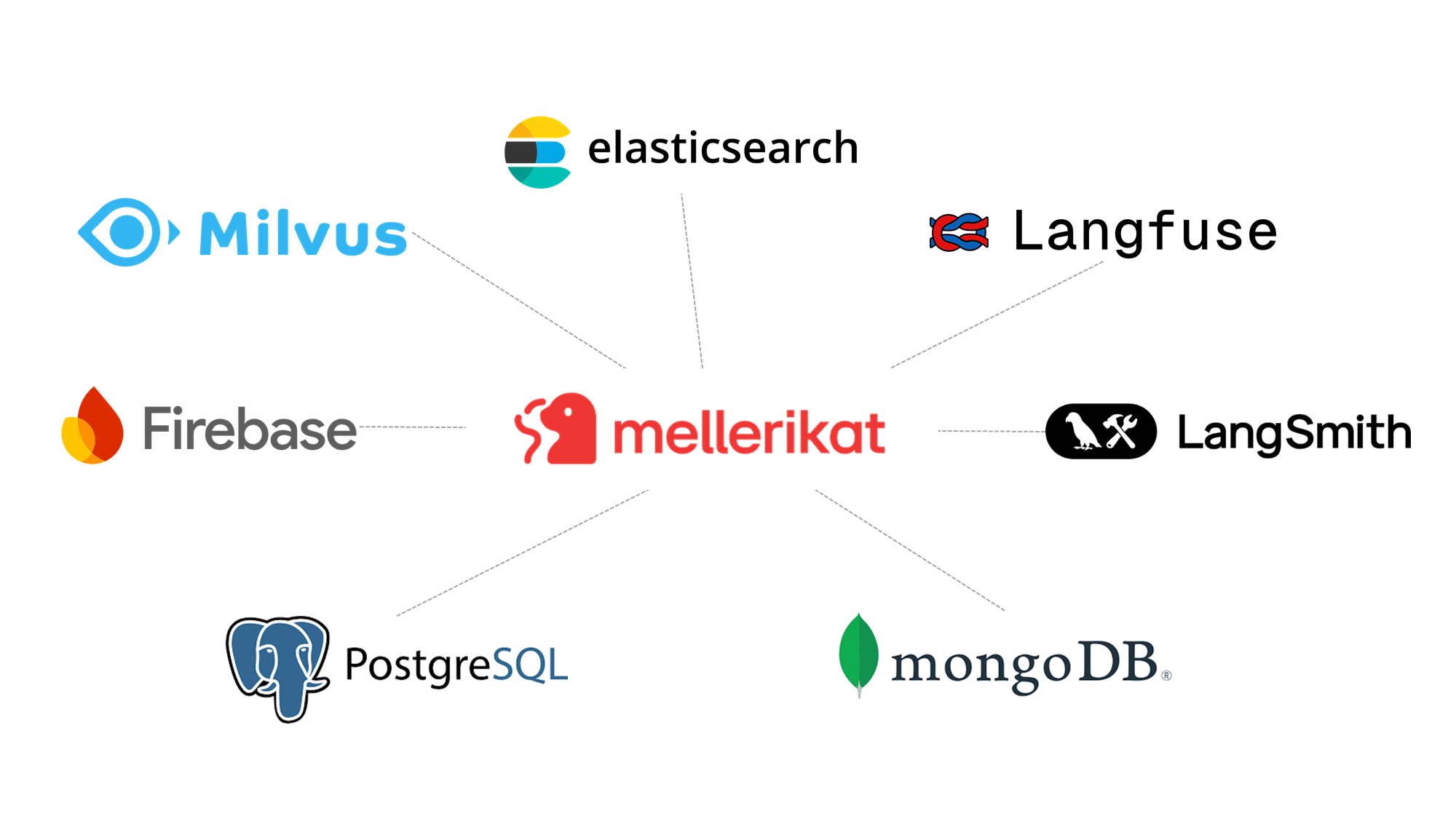

Integration Support with Databases and Observability Tools

ALO-LLM supports integration with databases (DB) that store essential data for service logic, as well as observability tools designed to improve service performance and reliability. This enables efficient data extraction and analysis, collection of user feedback, and real-time monitoring—maximizing operational efficiency. Through this integration, developers can transparently track response quality, processing speed, and cost, while continuously improving the performance of AI services.

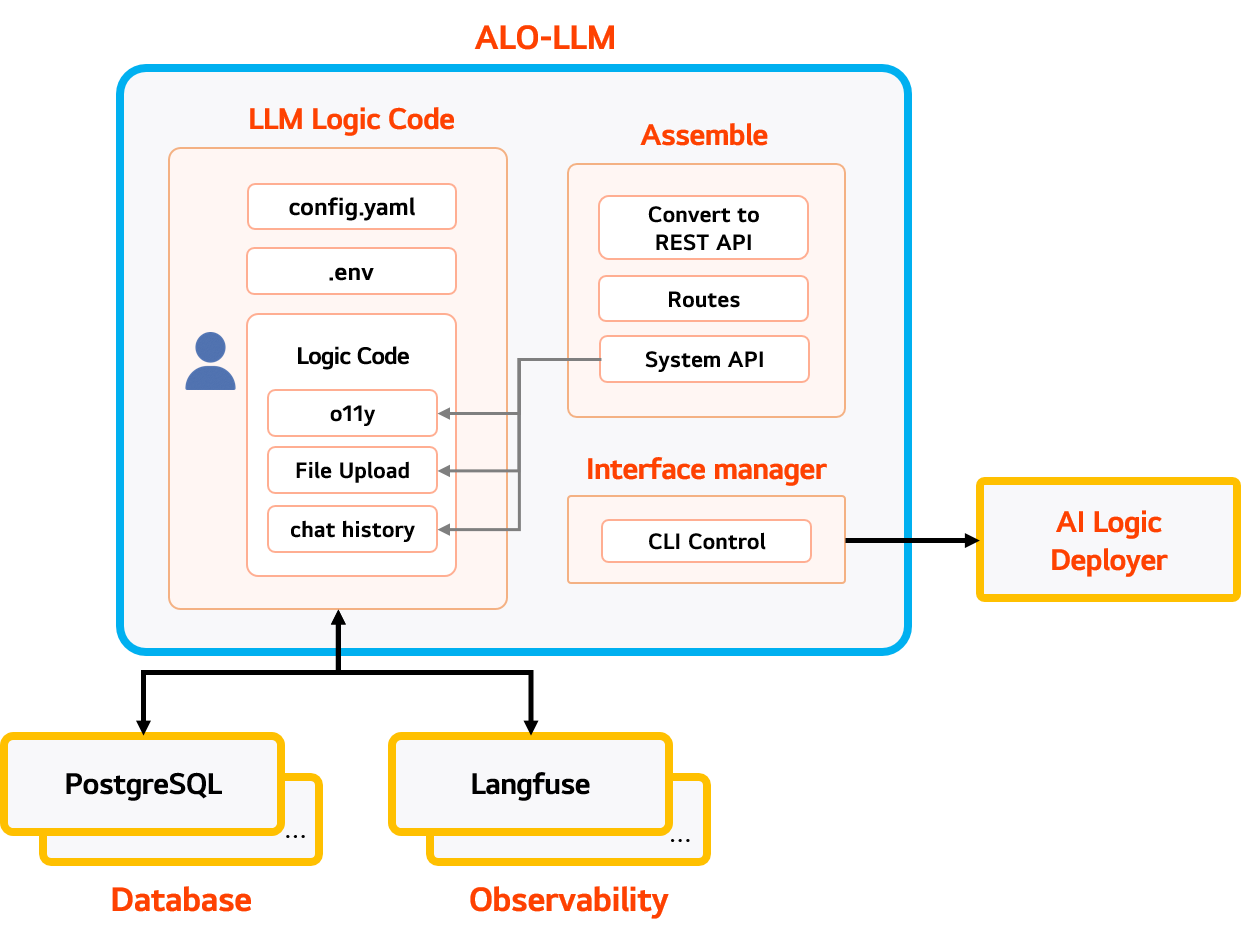

ALO-LLM Architecture

Optimized System for LLMOps Integration

Users can download the ALO-LLM package to develop logic code for LLM services. For scalable and continuously evolving LLM services, integration with databases and observability tools is essential. ALO-LLM offers easy integration with various databases and observability (o11y) tools, as well as system APIs for functions like file upload. Code developed within the ALO-LLM framework is automatically converted into REST API format for LLM services. Developers do not need to learn REST API concepts—conversion can be done easily using CLI commands provided by ALO-LLM. The converted Service API is authenticated and registered as an AI Solution, enabling deployment to production environments through AI Logic Deployer and access to various LLMOps features.

ALO-ML

Optimization of ML Model Development and Operation

ALO-ML is an optimized framework for converting ML algorithm code into deployable AI solutions and enabling efficient MLOps-based service operation. ALO-ML packages can be easily downloaded and installed via pip in any preferred development environment, whether Windows or Linux. Developers can freely implement ML service logic within the ALO-ML framework, and intuitive CLI commands are provided to facilitate seamless conversion into the ALO-ML format. Once converted, the ALO-ML AI solution is authenticated and registered, making it possible to leverage a wide range of MLOps functionalities.

ALO-ML Demo

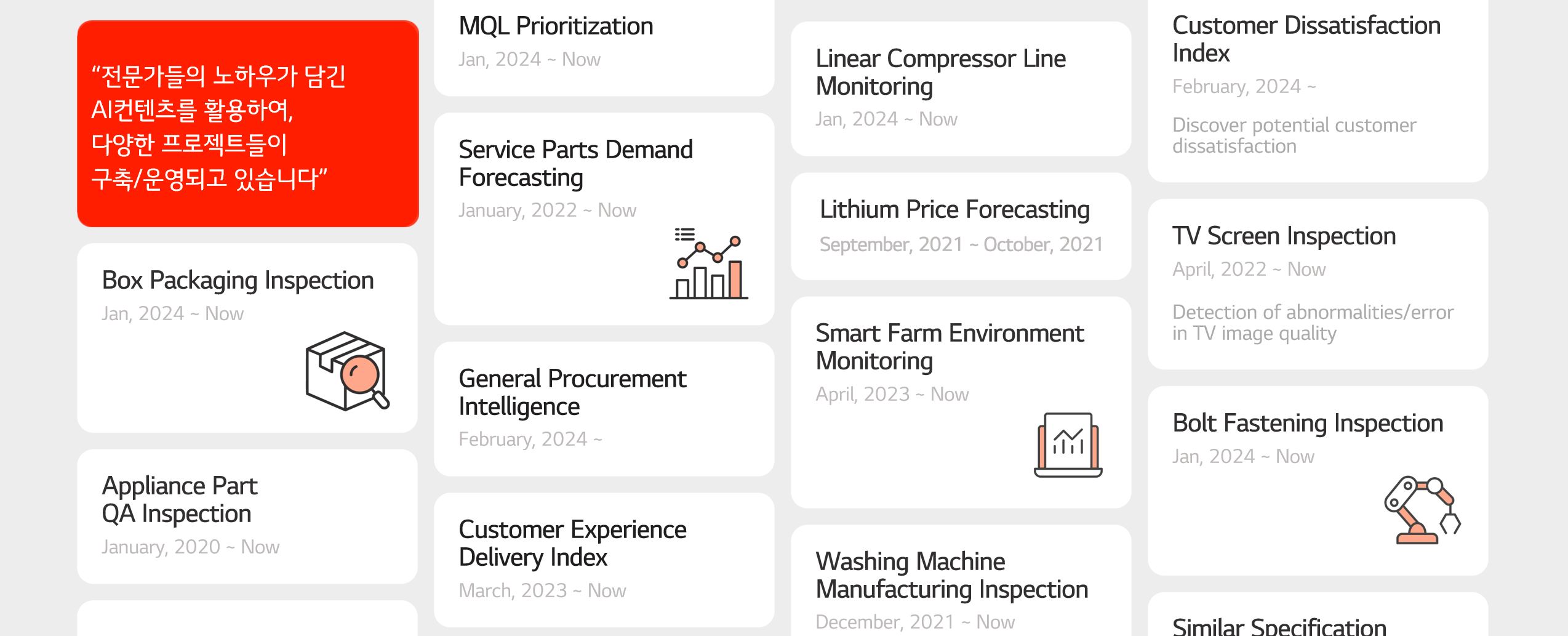

Utilization of Industry-Proven AI Content

Mellerikat provides AI content that has been technically validated and proven effective across various industrial sectors. These AI contents are designed to be compatible with the ALO-ML framework, allowing for easy scalability and service operation. Users can download the verified content, make minimal modifications to suit their specific problem context, and quickly develop and register AI solutions with ease.

Continuous Model Performance Improvement

ALO-ML provides functionality to continuously monitor AI model performance and detect performance degradation, enabling timely updates with the latest technologies. Using inference results collected through Edge Conductor, new datasets can be generated. Through data re-labeling and retraining, high-quality training datasets can be constructed to enhance the model’s performance.

Efficient AI Model Training and Deployment

AI solutions registered through the ALO-ML framework can be seamlessly trained and deployed using Mellerikat’s MLOps solution, which provides a web-based UI. This allows users to efficiently train, deploy, and monitor AI models without dealing with complex processes, streamlining the entire AI lifecycle.

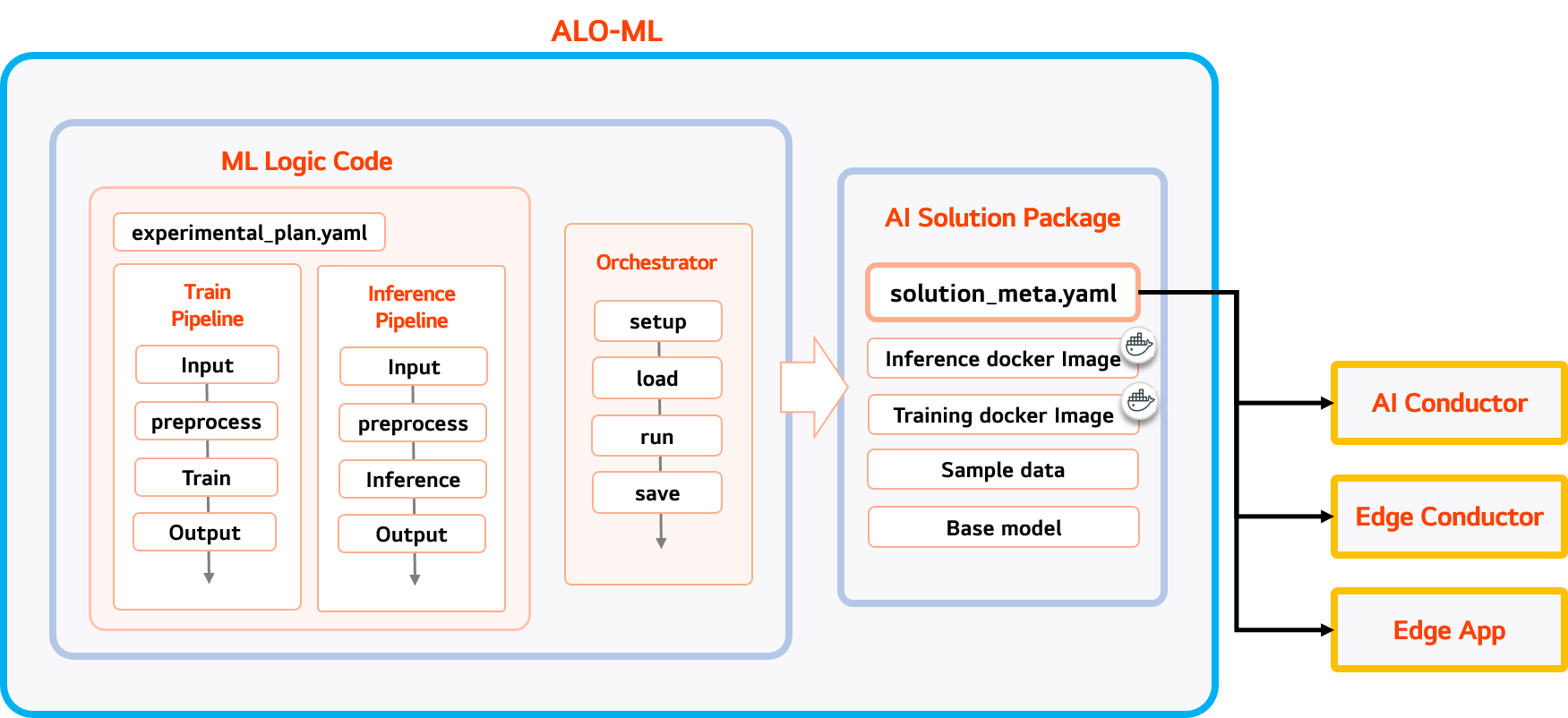

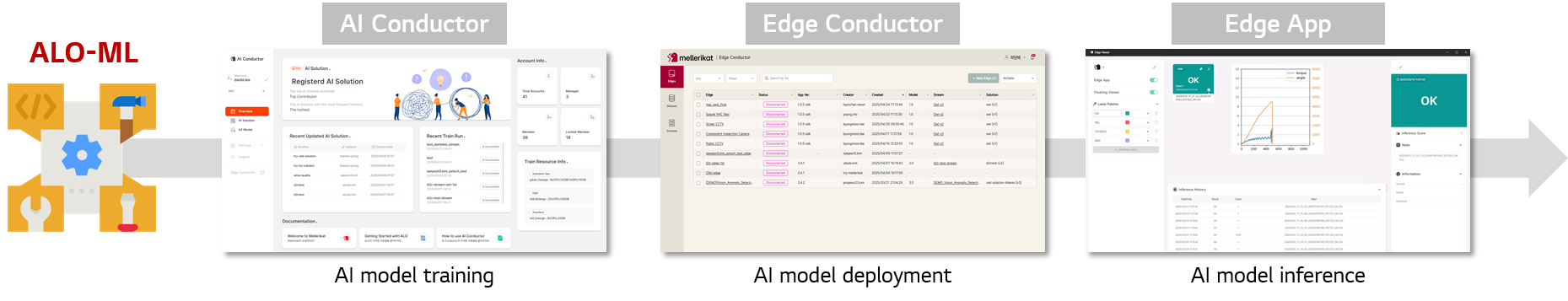

ALO-ML Architecture

Optimized System for MLOps Integration

Users can download the ALO-ML package to generate Train and Inference Pipeline code for ML services. To build an ML-powered service, both a training pipeline for model learning and an inference pipeline for applying the trained model are essential. The code written by the user is automatically converted into the ALO-ML format, which is compatible with MLOps. The resulting AI Solution Package can then be integrated with MLOps components—such as AI Conductor, Edge Cond, and Edge App—enabling users to fully leverage the wide range of MLOps functionalities.