EVA

EVA acts as a brain that connects with the entire physical world of industries, understanding and making autonomous decisions.

Through an intelligent flow from sensing to control, EVA transforms the physical world.

EVA Key Features

Six Core Capabilities of EVA

Natural Language–Driven Intelligent Interface

Users describe their requirements in everyday language, and EVA automatically transforms them into protocols optimized for AI inference. Even without complex configurations, users can precisely define and analyze real-world environments.

TCO Reduction through GPU Resource Efficiency

Powered by Multi-Foundation Model optimization, EVA processes significantly more data with the same GPU resources, ensuring cost efficiency even in large-scale enterprise environments.

Multi-Cloud & On-Premises Deployment

From multi-cloud SaaS environments to fully isolated on-premises networks, EVA provides a flexible deployment architecture tailored to enterprise needs, enabling rapid scaling without infrastructure constraints.

Instant Integration & Data Orchestration

Existing cameras are connected simply by registering an RTSP address, while analysis results are delivered through standardized Webhook interfaces, enabling seamless integration with various systems in real time.

Continuous Performance Evolution via User Feedback

From detection area configuration and Image Guided Detection to false-positive feedback, EVA continuously learns through direct user interaction and evolves into an AI optimized for each site.

Industry-Specific Vertical AI Composition

Specialized AI models for manufacturing, logistics, security, and more can be modularly combined to quickly build scenario-driven Vertical AI solutions tailored to each industry.

Seamless Connection to the Physical World

Enter a URL and create a scenario

Simply input a streaming URL (RTSP, HTTP) and write a detection scenario — it instantly becomes an AI-powered camera.

With simple sensor registration, EVA connects effortlessly to complex physical environments.

Video showing instant AI activation by registering a camera in EVA

Enhanced Intent with Scenario Intelligence

Create your goals simply as “scenarios”

EVA precisely interprets user intent through scenario-based configuration, improving efficiency and detection accuracy.

Video showing scenario-driven intent enhancement

A Physical AI Platform for All Industries

EVA — The brain linking understanding to action in the real world

EVA acts as an autonomous brain connected to every physical environment in industry.

Through a continuous flow of sensing to control, it transforms the physical world.

Check out various use cases on the Usecase page.

Video showing multi-domain scenario detection and alerting

Simple Field Customization

Region-Based Detection

With a simple mouse drag, users can set a Region of Interest (ROI).

EVA focuses detection only inside the selected area, eliminating unnecessary noise and drastically reducing false alarms.

Image-Guided Detection for Domain-Specific Object Recognition

Easily detect user-defined objects without complex coding or data collection.

With an intuitive UI/UX, simply specify the target object and EVA automatically customizes the vision model to fit your needs.

AI that Evolves with Feedback

EVA continuously improves detection by learning from user feedback.

It evolves to make more accurate decisions in similar future situations,

minimizing false alarms and becoming fully optimized for each site.

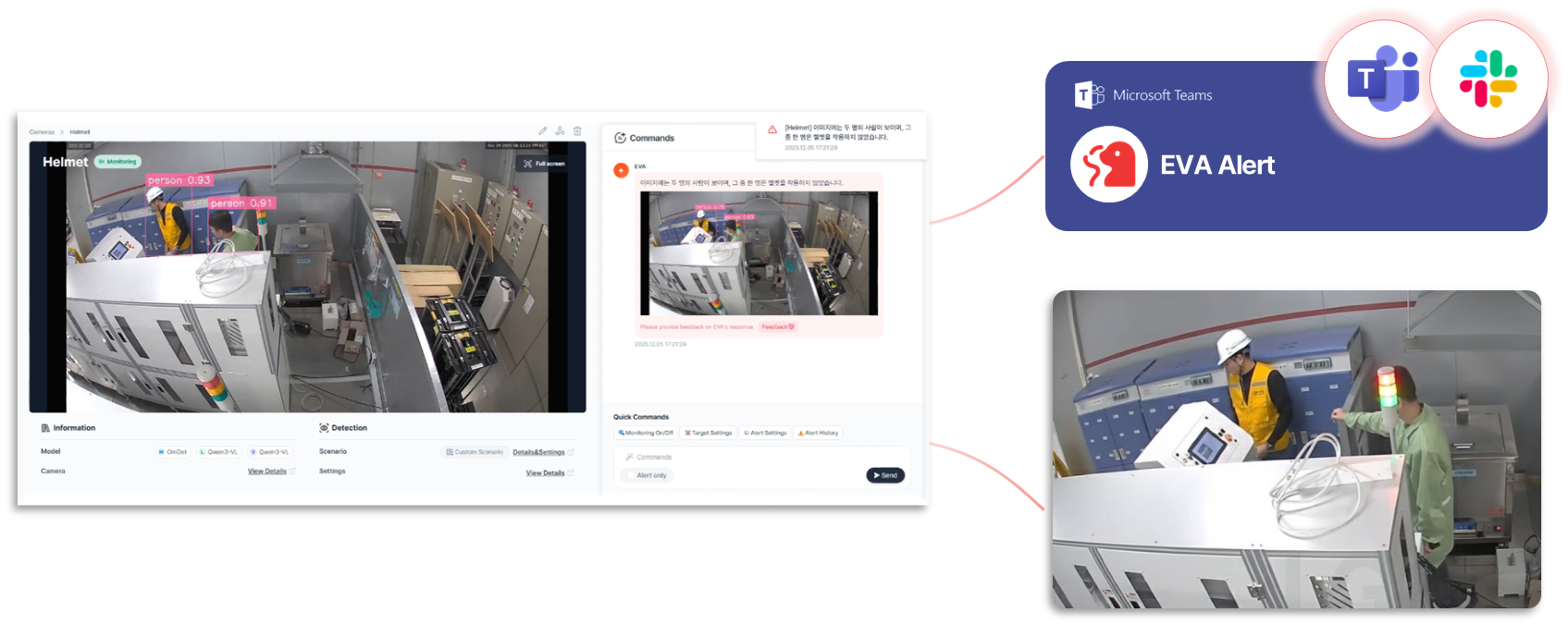

Beyond Detection, Toward Action

A complete closed-loop that changes the real world

EVA understands the physical world and immediately applies results back into reality, enabling full intelligent closed-loop control.

It not only sends alerts through various communication channels, but also connects directly with physical devices

— lights, gates, robots — to control environments in real time.

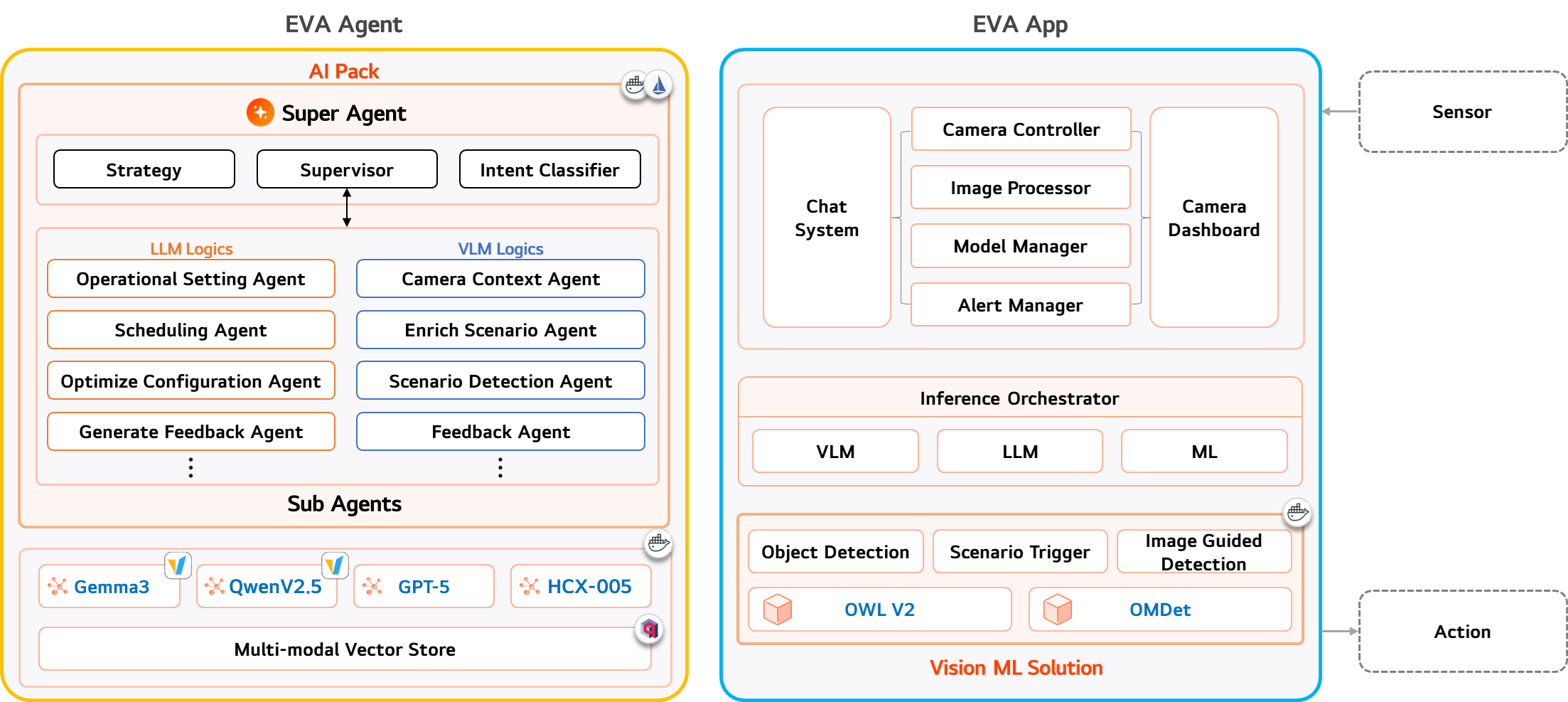

Service Architecture

Efficient and Powerful System Design

EVA is a platform built upon a multi-intelligence structure that integrates various advanced foundation models.

Vision-Language Models (VLM), Large Language Models (LLM), and Vision Foundation Models operate independently yet complementarily.

With high scalability, users can flexibly choose and combine the optimal AI stack for their environment.

A Unified Flow of Sensing → Understanding → Control

EVA App connects with the physical world to recognize environments in real time,

EVA Agent interprets with optimal foundation models to deliver deep understanding,

and decisions are immediately executed through device-level control.

This converts industrial sites, cities, and facilities into fully autonomous Physical AI systems.

Learn More

EVA is deployed through collaborations with various partners. Explore real case applications and up-to-date documentation below.

Case Study & Usecase

Discover real implementation benefits at Why EVA.

Partnership

We collaborate with industry leaders including Cisco,Naver Cloud, Megazone, and more.

Documents

Check the Manual andRelease Notes for details and updates.