Mellerikat LLMOps

LLMOps is a tool that allows developers to easily deploy and operate services in the cloud environment without worrying about the details. It automates the complex and diverse processes required to deploy large language model (LLM)-based services, enabling developers to easily deploy, operate, and enhance AI Agent services.

Listen to the podcast below for more details about mellerikat LLMOps.

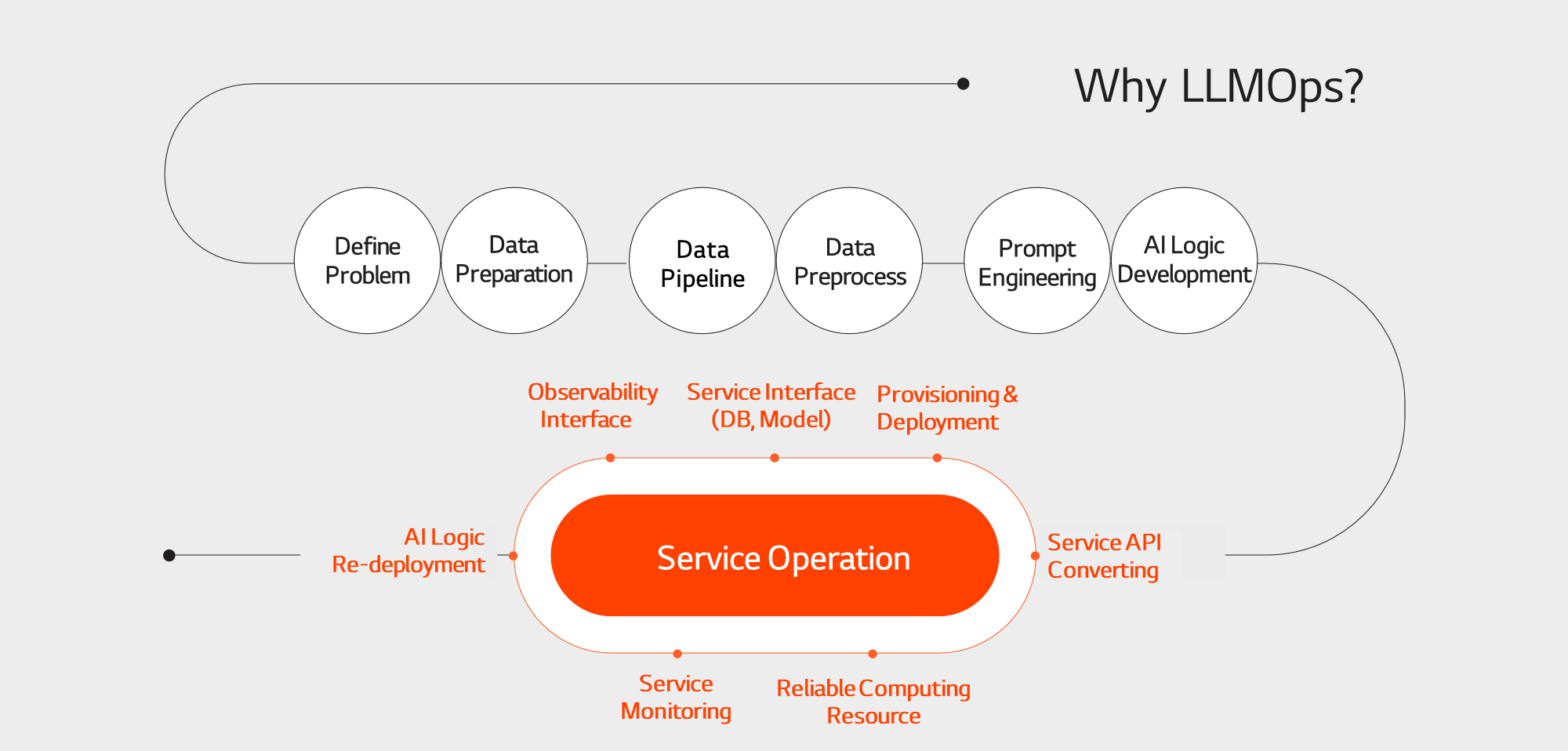

Why LLMOps?

Why Sustainable LLM Service Operation is Difficult

Unlike simply running an LLM service locally, operating an LLM-based service requires a complex process.

For example, you need to handle containerization, database and service integration, monitoring system setup, network and security configuration, and deployment script creation.

Repeating these processes takes a lot of time and effort, leading to waste across the organization.

LLMOps is a solution that addresses various technical challenges in the development and operation of LLM-based services, allowing developers to focus on more creative and productive tasks.

Service Architecture

LLMOps for Easy Development and Sustainable Operation of High-Quality LLM Services

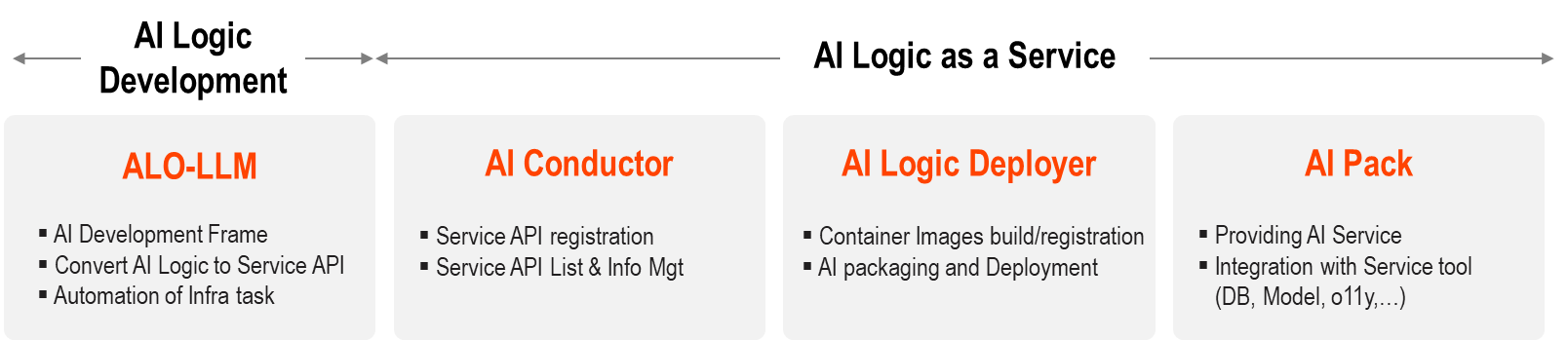

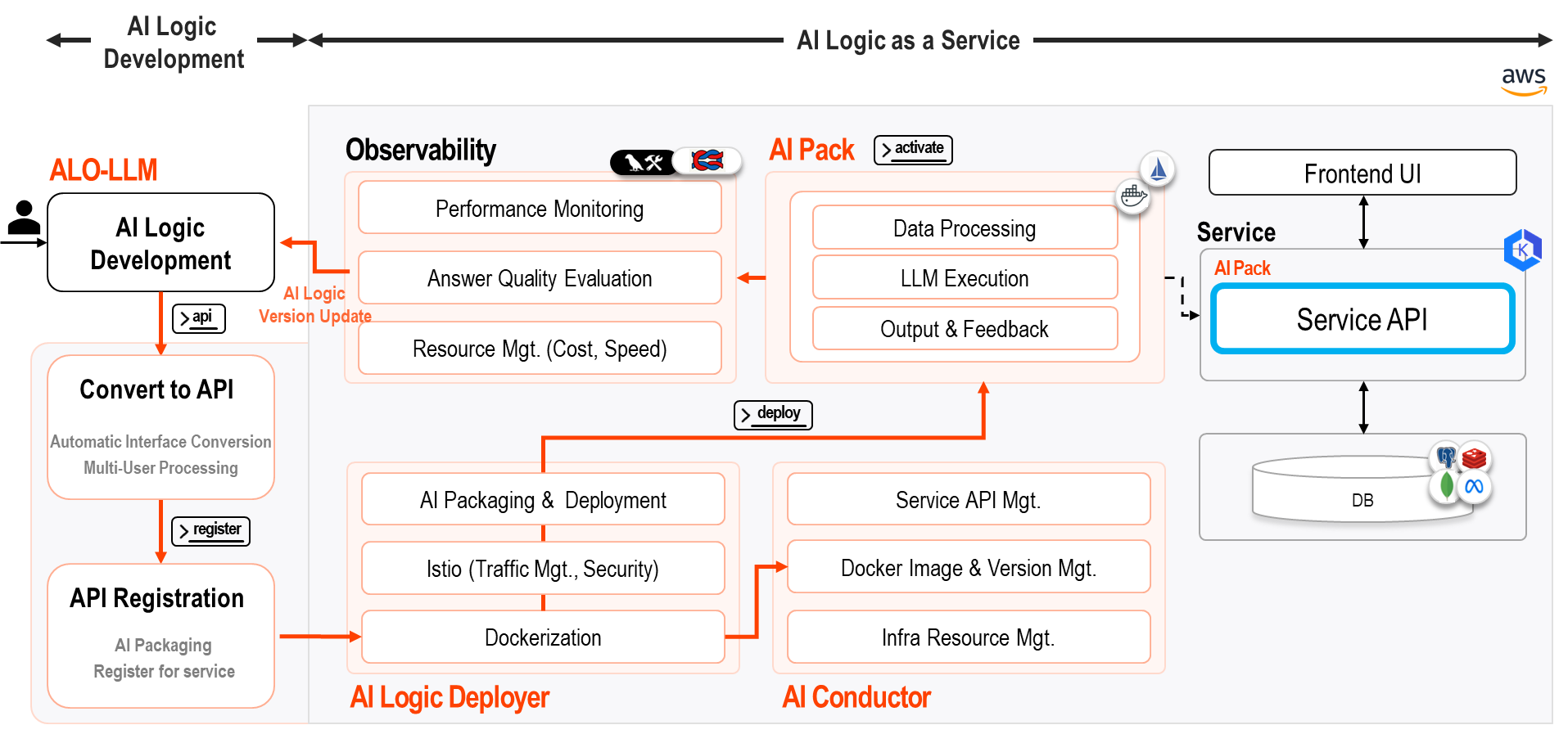

Mellerikat LLMOps is a solution composed of four core elements: AI Learning Organizer (ALO), AI Conductor, AI Logic Deployer, and AI Pack.

This solution provides the necessary features at each stage to easily solve the difficulties of complex API development and continuous operation, making users' work more convenient.

It also supports integration with observability tools to collect feedback gathered during the LLM service process and continuously improve the service.

AI Logic Development

ALO-LLM: A Standardized LLM Development Tool for Easy and Simple Development

The standardized development tool ALO-LLM helps developers easily build high-quality LLM services.

This tool provides consistent coding standards and formats to maintain code quality and make service maintenance simple.

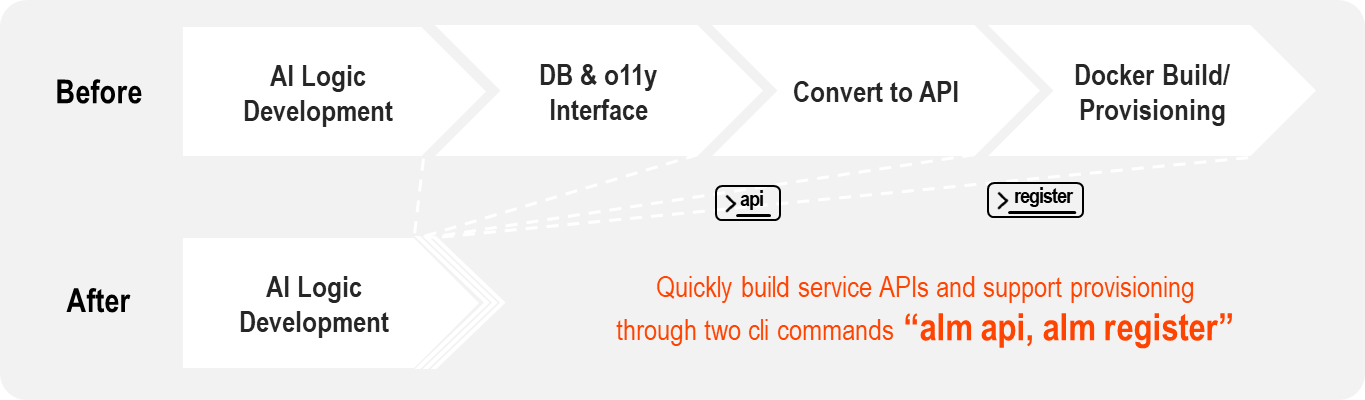

In addition, it supports automatic service API conversion, making the development process much more efficient and faster.

AI Service Operation

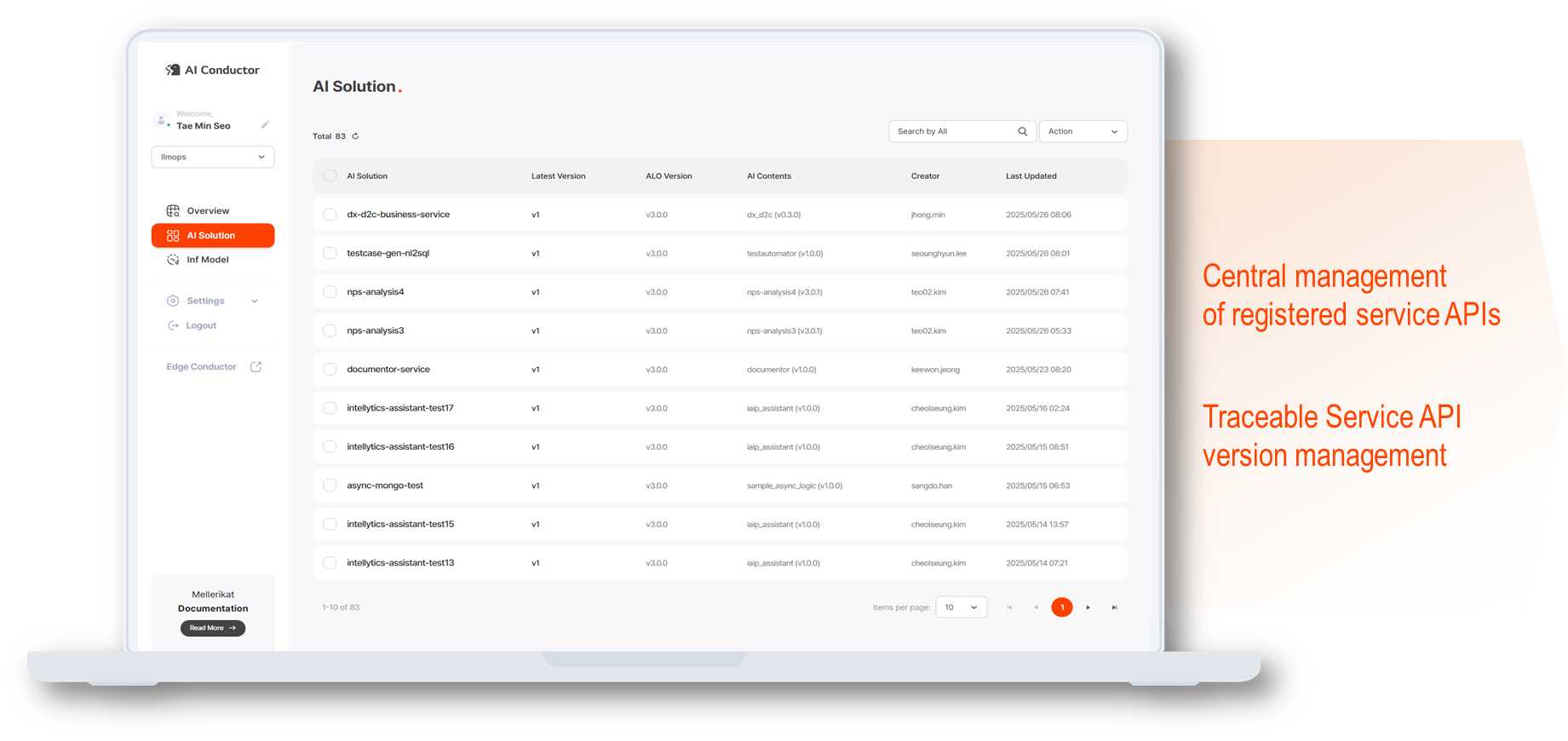

AI Conductor: Comprehensive Monitoring of Registered Service APIs

Register service APIs developed with ALO to the AI Conductor, and users can manage the service APIs in operation by version through AI Conductor.

AI Conductor provides a web-based UI for registering meta-information required for service API operation and comprehensive monitoring, supporting continuous LLMOps operation.

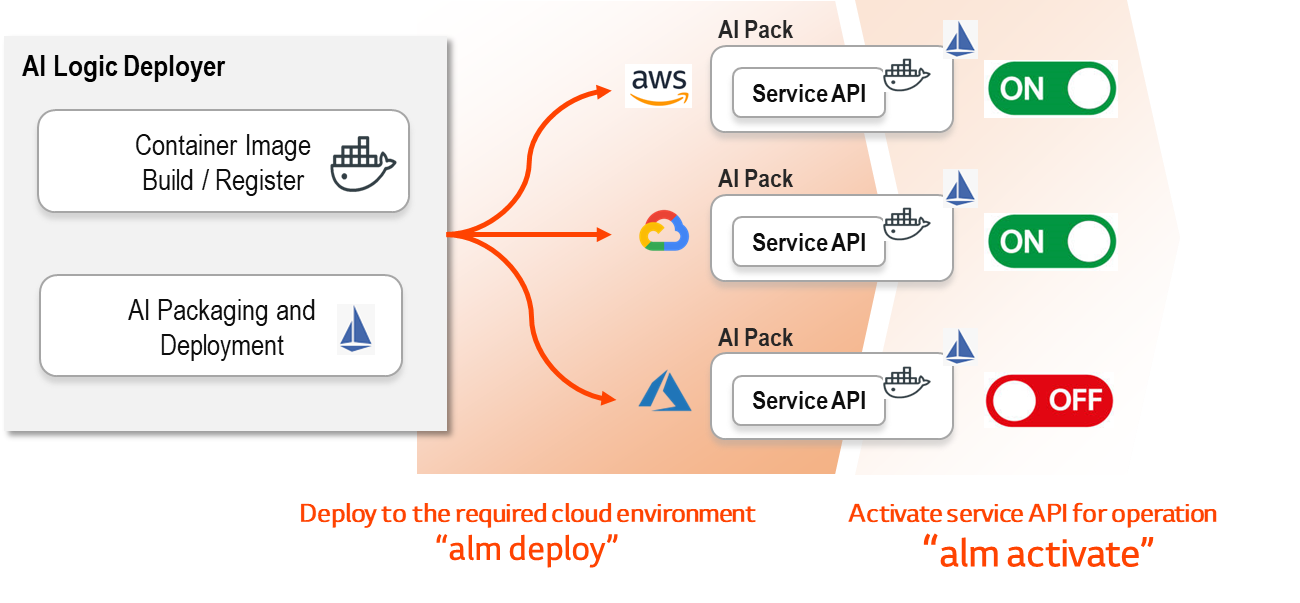

Easily Deploy Service APIs to Various Cloud Environments with AI Logic Deployer

You can easily deploy service APIs to various cloud environments.

Without complex settings or procedures, you can conveniently deploy and operate service APIs in your desired cloud environment through an automated deployment system.

As a result, the complex technical operation process is greatly reduced, allowing you to start services quickly and providing a better experience for developers.

In addition, as the operation process becomes simpler, there is no longer a need to spend a lot of time on complex server management or deployment tasks, greatly improving overall operational efficiency.

Operate Service APIs Easily with Simple Commands: AI Logic Deployer

To operate service APIs, you need to dockerize the application and apply istio, a service mesh, which requires a lot of know-how and time.

Also, deploying applications to Kubernetes Pods and managing traffic was not an easy task.

AI Logic Deployer allows you to solve these with simple CLI commands.

It automates complex processes to containerize applications and easily add traffic management and security features through istio.

With AI Logic Deployer, you can easily deploy and operate service APIs without spending a lot of time on complex technical operations.

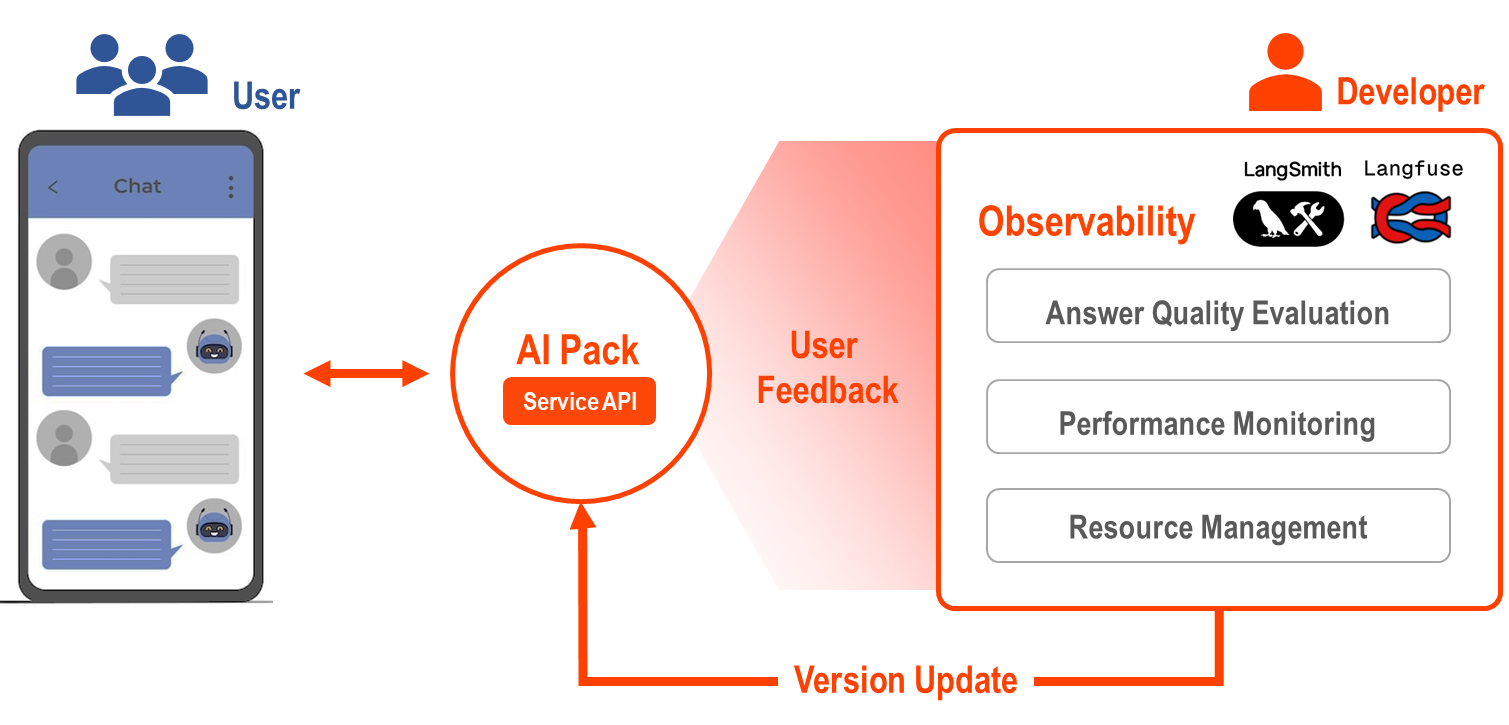

Seamless Integration with Various Service Tools by Packaging with AI Pack

AI Pack supports an efficient and flexible operating environment by integrating with various service tools and combining diverse features.

AI Pack enables you to maximize operational efficiency by linking with databases containing data required for LLM service operation and observability tools for service performance improvement,

allowing data extraction/analysis, user feedback collection, and monitoring.

With AI Pack, you can simplify complex operating environments and implement smarter operational strategies.

Easily Manage Answer Quality and Optimize Logic with AI Pack and Observability

AI Pack goes beyond a simple AI execution tool and is integrated with an observability module that allows real-time monitoring and analysis of answer quality, processing speed, cost, and more.

Through this, developers can continuously evaluate the quality of answers provided by AI and improve performance.

In addition, you can transparently check costs according to processing speed or resource usage, enabling efficient operation.

Mellerikat LLMOps

"Start a New Paradigm of AI Operation with Mellerikat LLMOps!"

Mellerikat LLMOps solves the difficulties of sustainable LLM service operation.

It automates complex deployment and operation processes, saving developers' time and effort so they can focus on creative work.

Now, with LLMOps, you can easily deploy APIs in the cloud environment and provide a better experience to users through efficient operation.

"Join us in shaping the future of LLM-based services!"