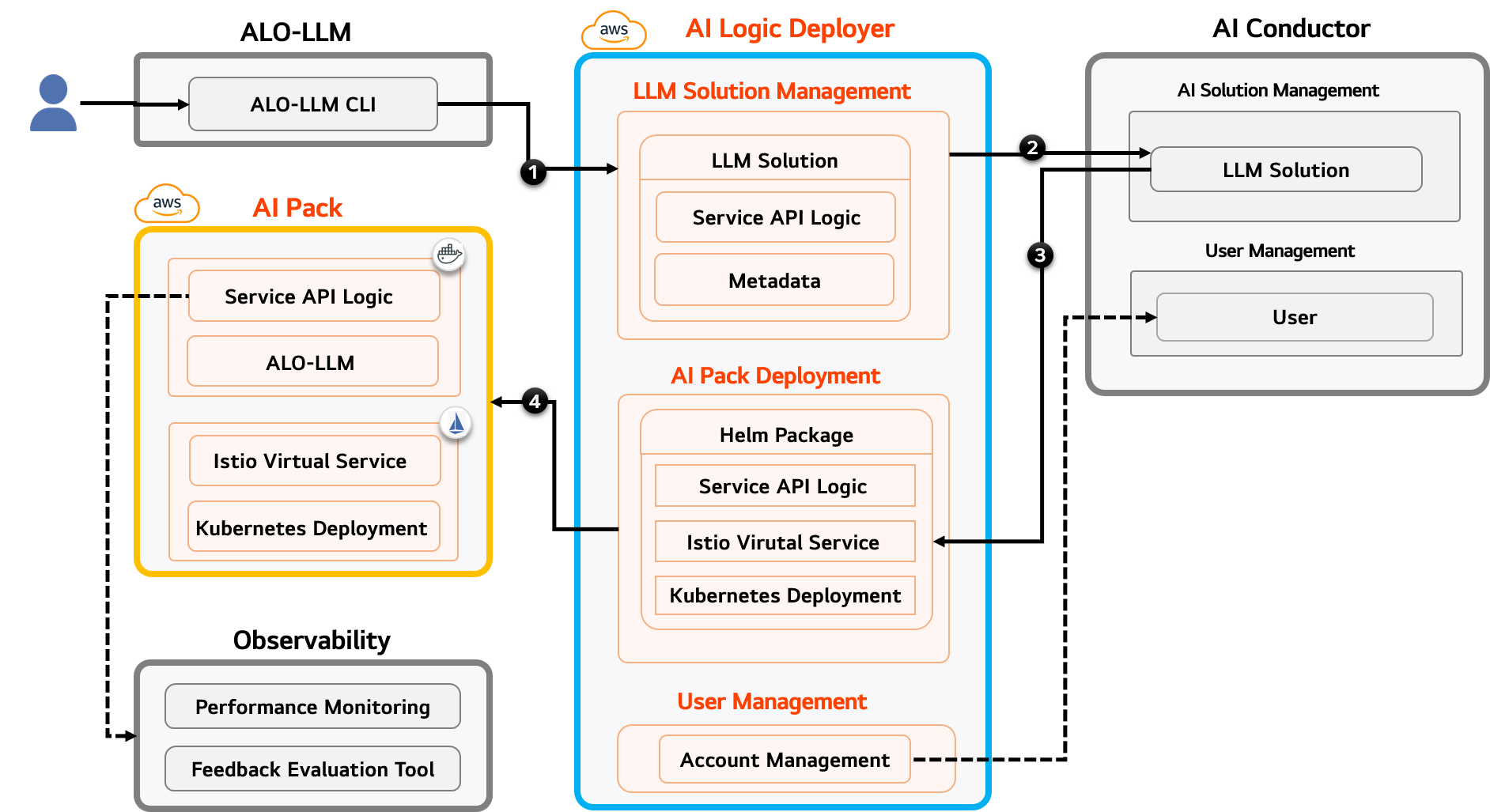

AI Logic Deployer

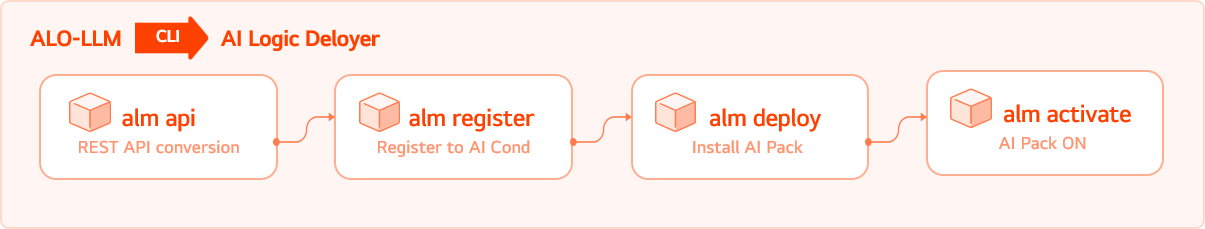

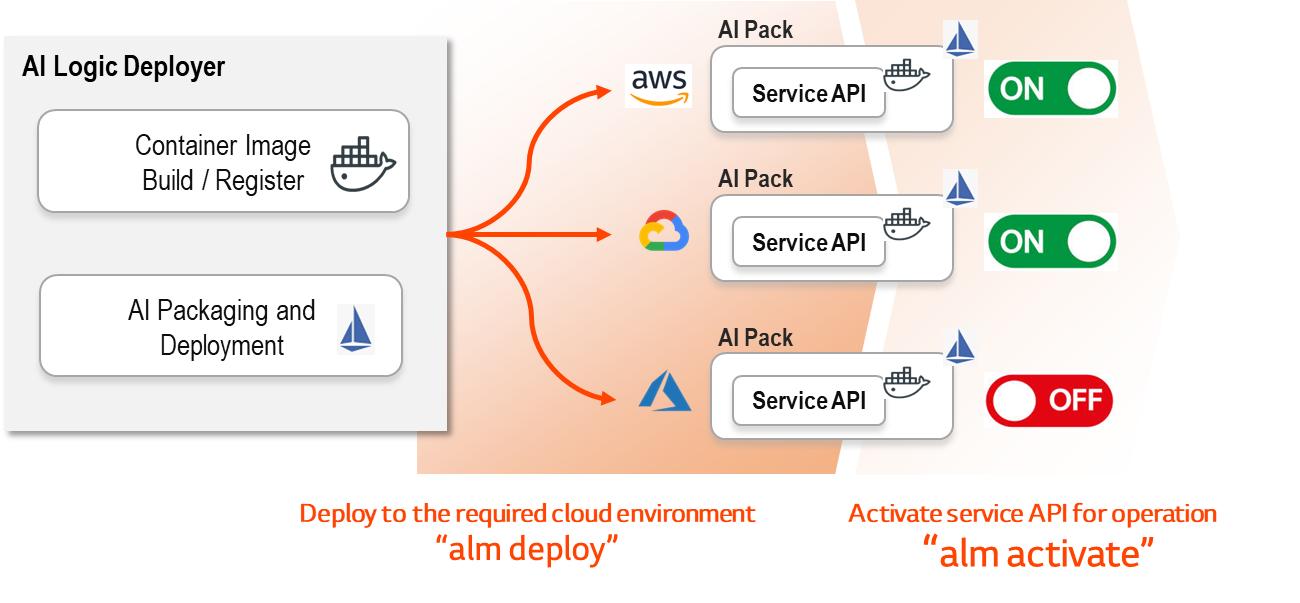

AI Logic Deployer is a tool that radically simplifies the deployment and operation of LLM services. It supports a variety of infrastructure environments and automates the entire process—from service API packaging, registration, and deployment to activation—using only intuitive CLI-based commands. This allows developers to launch services quickly and manage them reliably, without the burden of complex operations, enabling them to focus more on service development and improvement.

Simplified Workflow

Automated Deployment Environment That Simplifies Complex Operations

AI Logic Deployer is designed to reduce the burden of complex API development and operational environments. It automates the entire workflow—including packaging services as Docker images, configuring an Istio-based service mesh, and deploying to Kubernetes Pods for traffic management—all with a single CLI command. This automated process allows developers to operate Service APIs quickly and reliably, without the need to worry about infrastructure setup or deployment strategies. By minimizing the time spent on technical operations, AI Logic Deployer empowers developers to focus their efforts on development and service improvement.

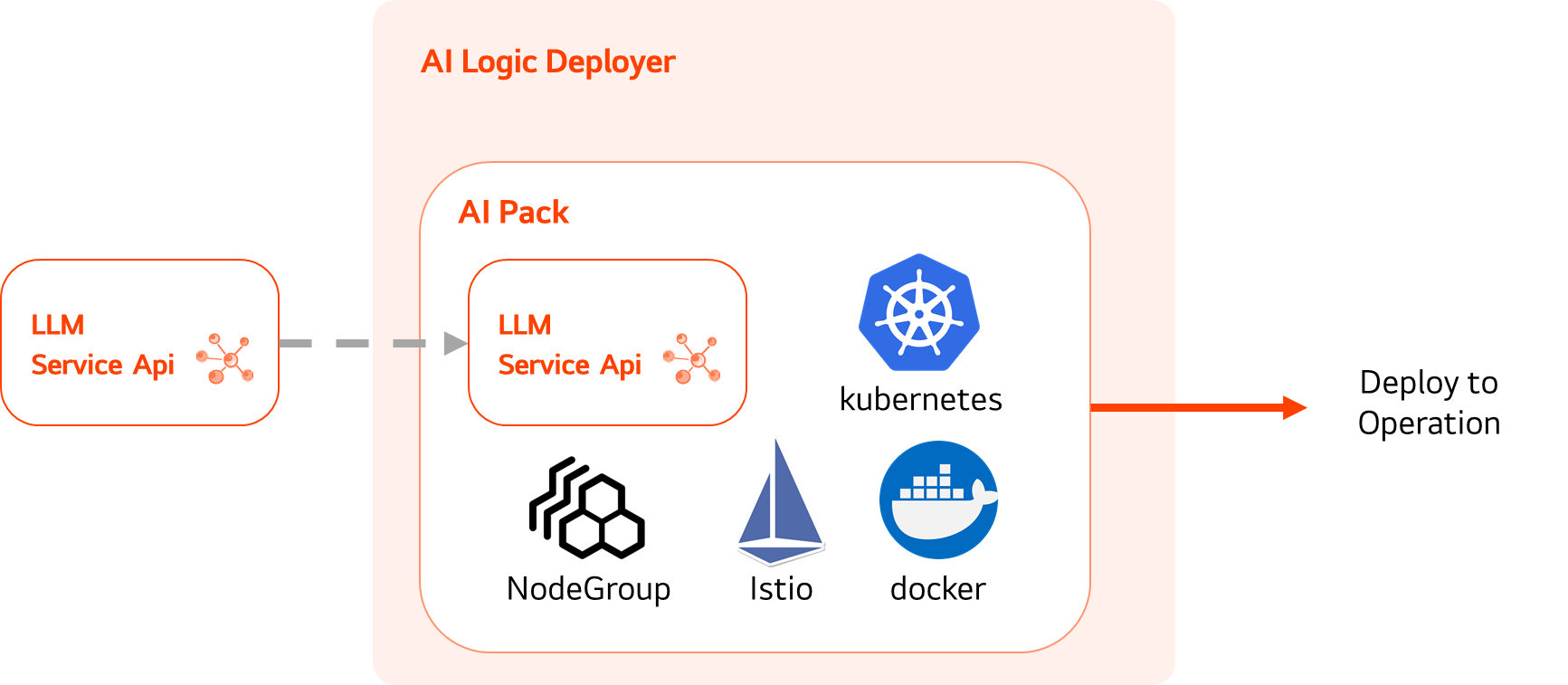

AI Pack

Optimized Environment for LLM Service Operation

AI Pack, one of the core components of AI Logic Deployer, provides an optimized infrastructure environment for the stable deployment and operation of Service APIs. Before executing the Service API in a containerized Docker environment, AI Logic Deployer automatically installs the AI Pack to establish the necessary infrastructure foundation. This goes beyond simple service deployment—it ensures that the LLM Service Logic operates smoothly and integrates seamlessly with the frontend, databases (DB), and observability tools through its API interface, delivering a fully optimized operational environment.

Provision of an Independent Service Operation Environment

The infrastructure environment automatically set up through AI Pack is independently configured to ensure it does not affect other LLM services. For example, even if a specific service experiences a temporary interruption due to memory overload from excessive user traffic, only that service is impacted—other LLM services continue to operate stably. This design ensures the overall stability of the system.

Multi-Cloud Compatibility

Support for Various Cloud Deployment Environments

It is designed with high compatibility for major cloud platforms such as GCP, Azure, and AWS, allowing flexible configuration across various operational environments. By adopting a Helm-based installation method, the system can be set up quickly and easily without complex configurations. This flexibility and simplicity enhance architectural design freedom and reduce the burden of technical operations, providing developers with a more efficient service development and operation experience.

Micro-Service

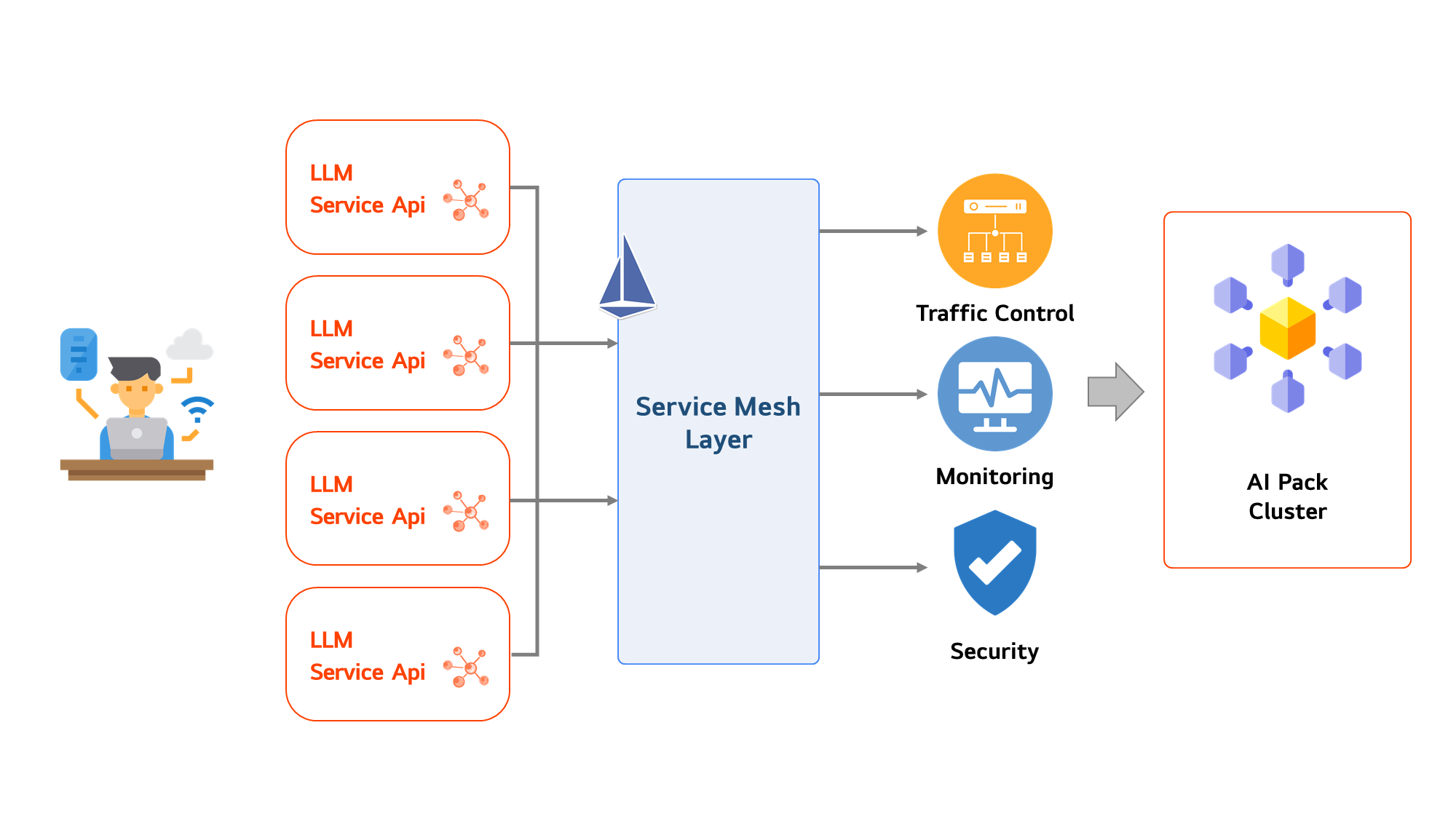

Reliable Microservice Support Based on Service Mesh

Based on a Service Mesh architecture, communication between AI Packs operating in the service environment is centrally managed at the infrastructure layer, enhancing both operational efficiency and security. This enables easier traffic control, real-time monitoring, and fault handling—delivering a more stable and reliable LLMOps service.

Architecture

System Architecture for Simplified Operation

Developers use the ALO-LLM CLI to register LLM Service APIs with AI Conductor. During registration, user authentication is performed using the user management features provided by AI Conductor. The registered Service API is packaged into a Helm chart by AI Logic Deployer and deployed as an AI Pack on a Kubernetes platform with Istio Service Mesh. Once deployed, the Service API running within the AI Pack is continuously monitored through integrated observability tools, ensuring stable and transparent operation.