Quick Start Experience

What You'll Learn in This Guide

The Quick Start Experience is an integrated process that lets you try all of EVA's core features at once. Follow the steps to quickly experience EVA's power — from camera connection to AI detection and real-time monitoring.

Instantly connect a camera to EVA using only an RTSP URL

Define objects to detect and create detection scenarios with the connected camera

Choose the right AI model (ML, LLM, VLM) for your use case

Set detection intervals and notification conditions

Start real-time monitoring and receive alerts

Prerequisites Before Starting

Prepare the following items to ensure a smooth experience with EVA.

Verify Your Try EVA Account

Check the account information sent after your trial application (delivered via email)

Have your EVA login URL and password ready

After logging in, confirm that the Camera List page loads correctly

Prepare an RTSP URL

If using your own camera: Find your camera's RTSP URL

- Example URL format:

rtsp://username:password@ip-address:port/stream - Refer to your camera manufacturer's documentation for the exact RTSP path

- Example URL format:

If using a demo camera: Use the demo RTSP URL provided in the email (if requested during trial signup)

Plan Your Detection Scenario in Advance

What do you want to monitor? (e.g., workplace safety, access control, inventory management)

When do you want to receive alerts? (e.g., no hard hat detected, intrusion after hours, item movement)

Planning ahead makes scenario creation much faster and more accurate

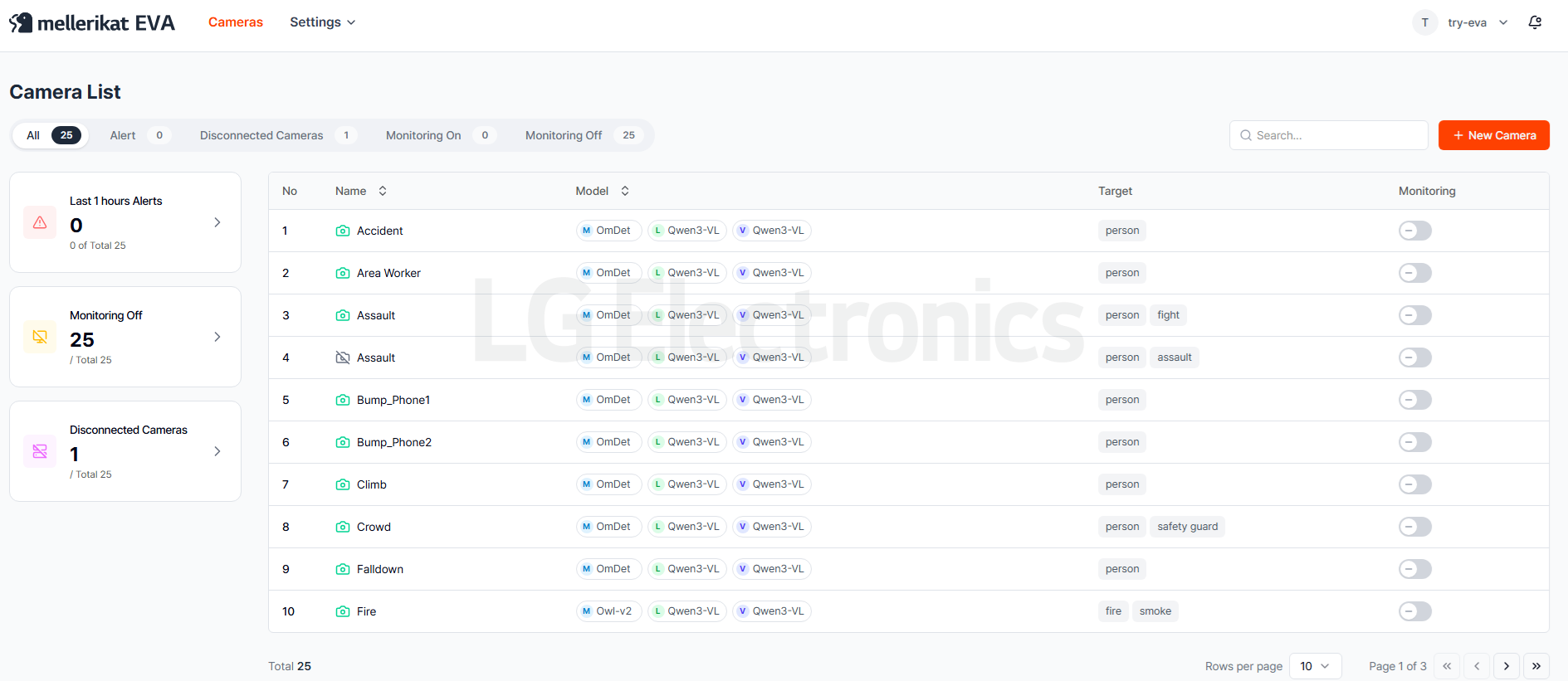

Access the Camera List Page

Step Description

The Camera List page is the central hub that appears right after logging into EVA. Click the "+ New Camera" button here to begin registering your first camera.

- Role of the Camera List Page

- View status of all registered cameras at a glance

- Turn monitoring On/Off for each camera

- Check recent alert activity

- Add, edit, or delete cameras

Actions

- Log in to EVA

- Go to the EVA access URL provided during trial signup

- Enter your credentials — the Camera List page will appear automatically

- Click "+ New Camera"

- Click the "+ New Camera" button in the top-right corner

- You will be taken to the Camera Registration page

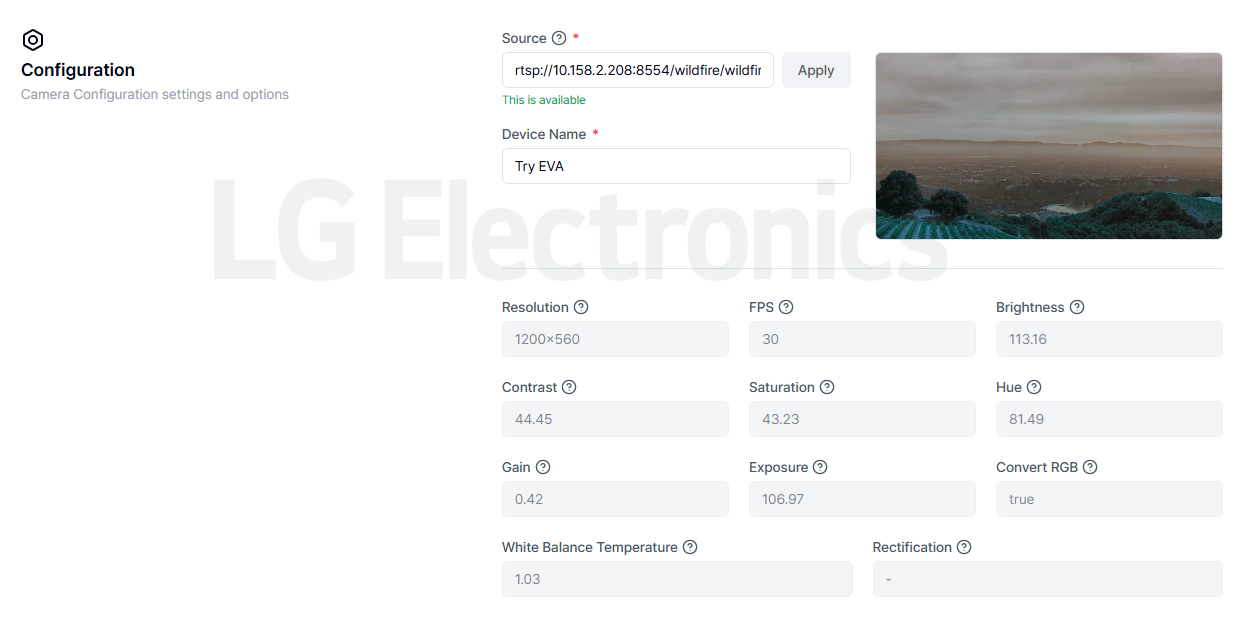

Configuration - Camera Connection Settings

Step Description

Configuration is the first step to connect your camera to EVA. Simply enter the RTSP URL — EVA will automatically test the connection and retrieve resolution and FPS information.

- Overall Camera Registration Flow

- Configuration: Connect camera and enter basic info

- Detection Scenario: Write detection scenarios

- Model: Select AI models

- Detection Settings: Set detection interval and filtering

Actions

- Enter Source (RTSP URL)

- Paste your prepared RTSP URL into the Source field

- Format:

rtsp://username:password@IP-address:port/stream-path - Example:

rtsp://admin:password123@192.168.1.100:554/stream

- Click Apply

- Click "Apply" — EVA will test the connection automatically

- On success: A still image from the camera appears in the Preview area

- Resolution and FPS are filled in automatically

- On failure: "This is not available" message appears

- Troubleshooting Connection Failure

- Double-check RTSP URL format

- Ensure the camera is powered on

- Check network connectivity (firewall, RTSP port open)

- Set Device Name

- Enter a unique, descriptive name for easy identification

- Use a systematic naming convention for future multi-camera management

- Review Camera Details

- Resolution, FPS: Automatically displayed

- Brightness, Contrast, Saturation, Hue, Gain: Image quality info shown

- Add Camera Metadata (Optional)

- Add location, direction, lighting conditions, etc.

- Example: "1F lobby main entrance, employee access route"

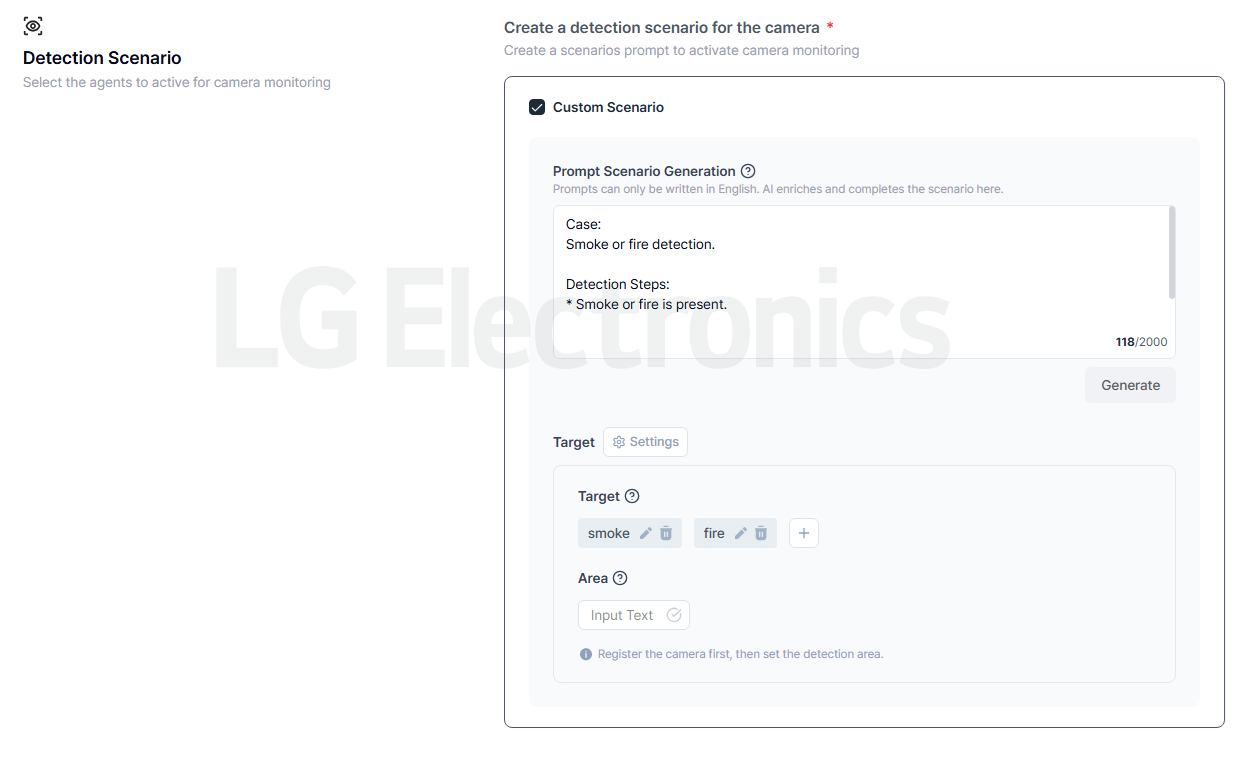

Detection Scenario - Set Up Detection Rules

Step Description

Detection Scenario is the heart of EVA. Write in natural language what, when, and how you want to detect — EVA's AI understands and executes it automatically. No coding or complex configuration required.

- Custom Scenario: Applies only to the current camera — ideal for camera-specific conditions

- Common Scenario: Reusable across multiple cameras

- Both can be used together (e.g., common safety rules + camera-specific rules)

- This guide focuses on Custom Scenario. See "Advanced Configuration" for Common Scenario

Actions

- Enter Scenario Prompt

- Describe the situation you want to detect in natural language

- The more specific and clear, the more accurate the detection

- Safety example: "Alert me if someone in the workplace is not wearing a hard hat"

- Access control example: "Notify me if the door opens outside business hours"

- Quality control example: "Alert me if a product falls off the conveyor belt"

- Generate Enriched Prompt

- Click "Generate"

- AI analyzes your prompt and creates a structured Enriched Prompt

- Objects, conditions, and triggers are clearly defined

- Review & Edit Enriched Prompt

- Confirm the AI understood your intent correctly

- You can manually edit the Enriched Prompt if needed

- Set Targets

- Detected objects (Targets) are auto-generated from your prompt

- Add or remove targets as needed

- Adjust Detection Sensitivity (Optional)

- Click "Setting" on each target to adjust sensitivity

- High: Detects more (reduces missed detections, may increase false positives)

- Low: Only confident detections (reduces false positives, may miss some)

- Start with default and adjust later based on real-world results

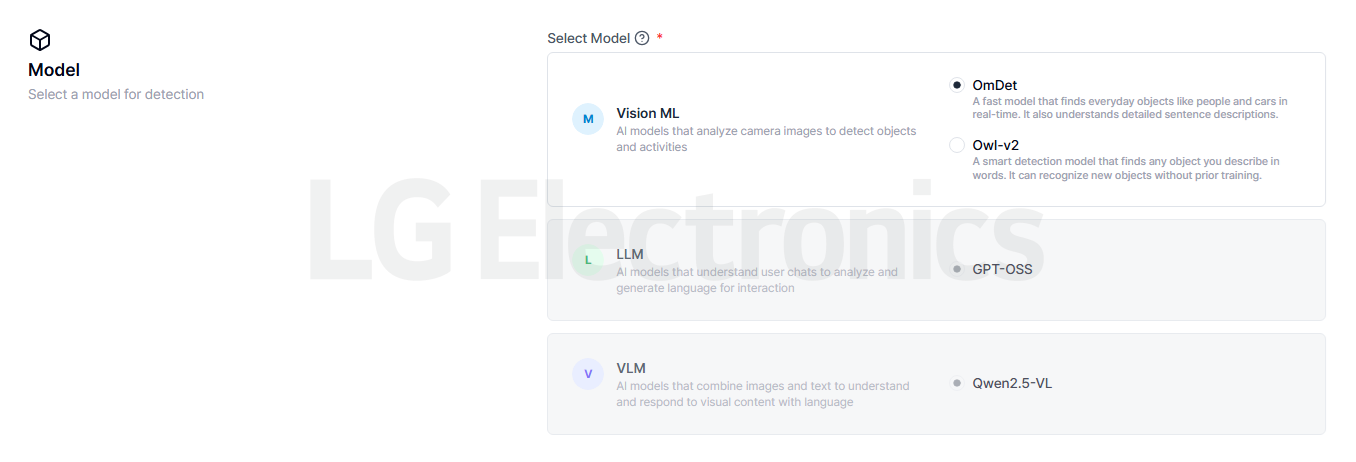

Model - Select AI Models

Step Description

EVA combines three types of AI models that work together for accurate detection and judgment.

- Roles of the Three AI Models

- Vision Model: Real-time object detection from video

- LLM: Understands and processes natural language instructions

- VLM: Combines vision and language to make final scenario judgments

- Model Selection Guidelines

- Accuracy priority: Complex scenarios, safety-critical

- Speed priority: Real-time response needed, limited resources

- First-time users: Use recommended default (OmDet + Qwen3-VL + Qwen3-VL)

Actions

- Select Vision Model Model

- Detects people, vehicles, objects in real time

- Options: OmDet (fast), LLMDet (high accuracy), Owl-v2 (special objects)

- Recommended: Start with OmDet

- Select LLM Model

- Understands your natural language scenario

- Recommended: Qwen3-VL (current default)

- Select VLM Model

- Final judgment combining vision and scenario

- Recommended: Qwen3-VL (current default)

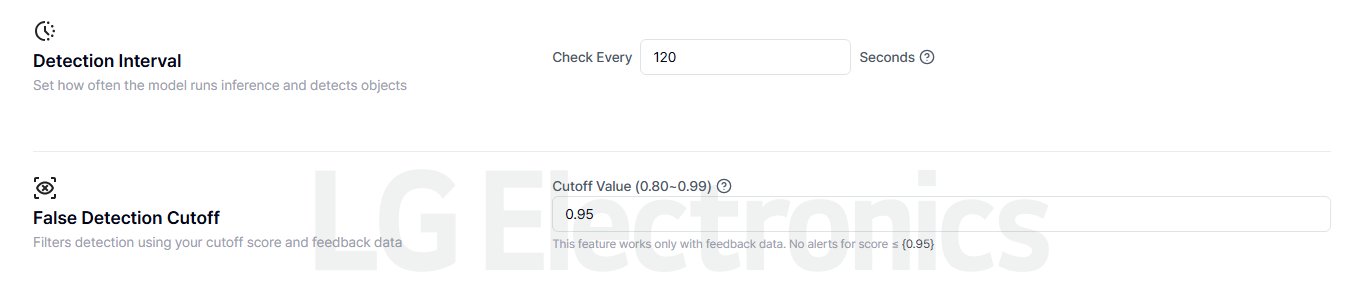

Detection Settings - Fine-Tune Detection Behavior

Step Description

Detection Settings control how often EVA analyzes video and how it filters false positives. These directly affect alert frequency and accuracy.

- Detection Interval

- How often (in seconds) EVA analyzes a frame

- Short (30–60s): High real-time performance, faster detection, higher resource use

- Long (180–240s): Resource-efficient, focuses on major events, may miss brief incidents

- False Detection Cutoff

- Automatically filters based on your false positive feedback

- Similar images to reported false positives are suppressed

- The more feedback you give, the smarter it gets

Actions

- Set Detection Interval

- Set how many seconds between analyses

- Example: 30 seconds → analyzes every 30 seconds

- Start with 120 seconds and adjust based on alert volume

- Set False Detection Cutoff

- Higher value = fewer false positives (may miss some true positives)

- Lower value = includes everything (more false positives)

Save & Verify

Step Description

Once all settings are complete, click Save to register the camera in EVA. You can always edit settings later.

- After Saving

- View live video on camera detail page

- Turn monitoring On/Off

- Edit detection scenarios

- Review detections and give feedback

Actions

- Click Save

- Click the "Save" button at the bottom of the page

- All settings are saved and you’re redirected to Camera List

- Verify in Camera List

- Confirm your new camera appears

- Check name, status, and registration time

- Click the camera name to open its detail page

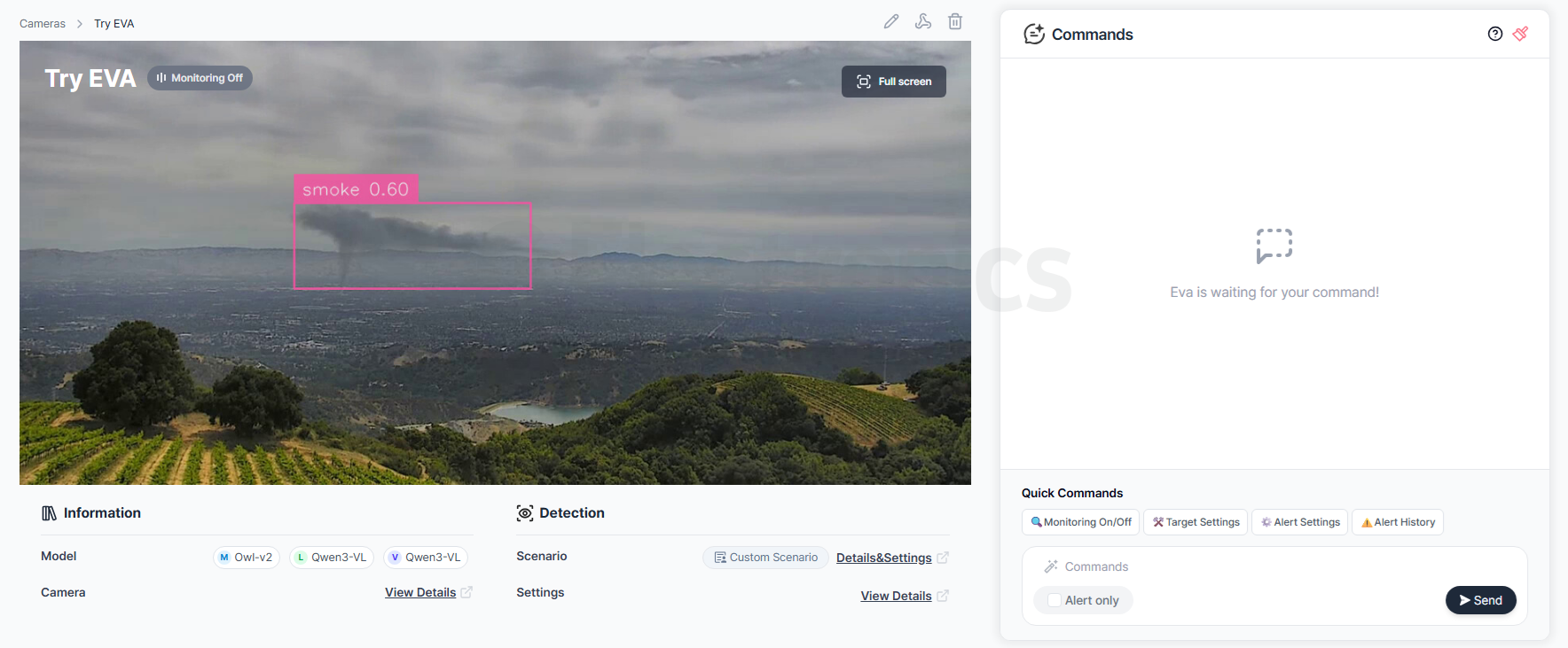

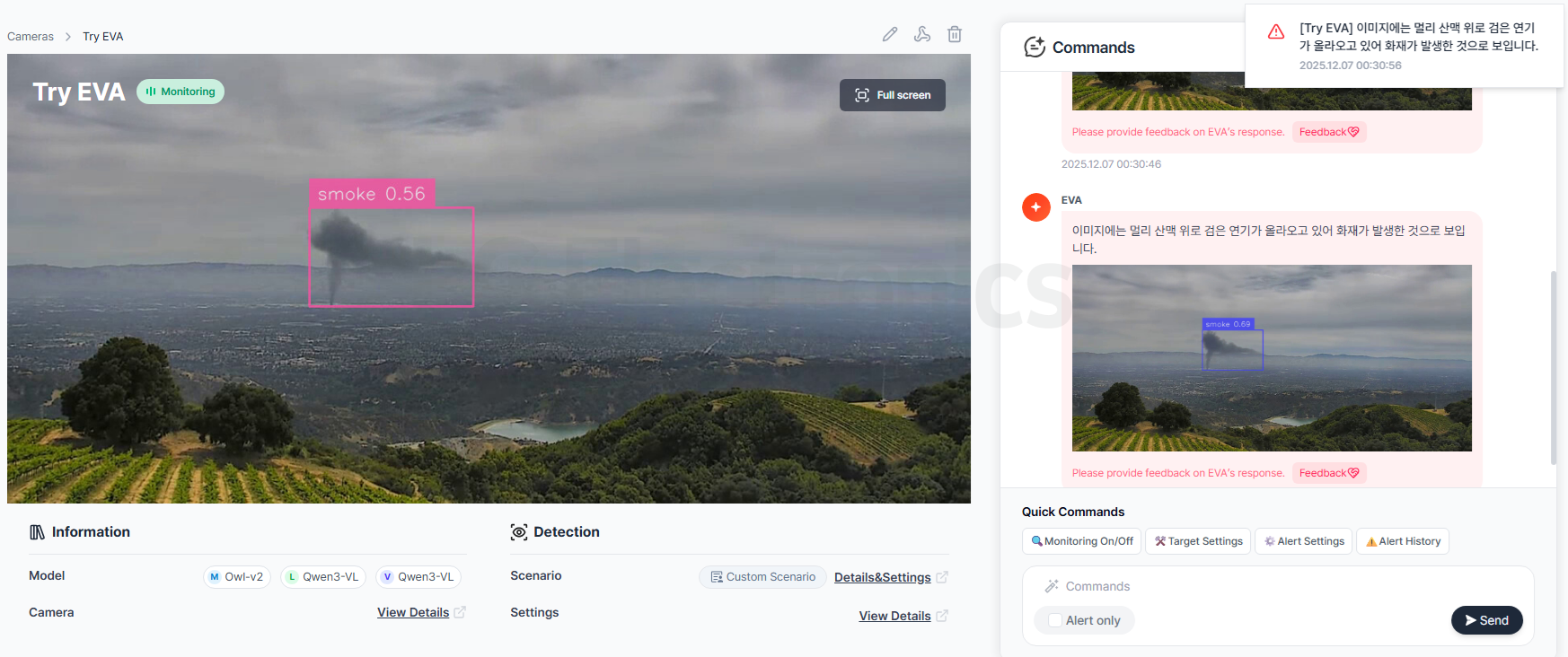

Start Monitoring

Step Description

Now start monitoring — EVA will analyze video in real time and send alerts when your scenario conditions are met.

- How Monitoring Works When On

- Analyzes frames at your set interval

- Vision Model detects objects → VLM judges scenario

- Matching events appear in the Commands panel

- Webhook-enabled → alerts sent to Teams/Slack (see Advanced Guide)

- Where to See Alerts

- Commands panel: Real-time messages + detection images

- Alert history: Search past alerts

- Webhook: Real-time delivery to Teams/Slack

Actions

- Turn Monitoring On

- Go to the camera detail page

- Click "Monitoring On/Off" in Quick Commands

- Or type "start monitoring" or "monitoring on" in the Commands box

- Confirm "Monitoring ON" appears top-left of Live Video Stream

- View Detection Results

- Alerts appear in Commands when your scenario triggers

- Each alert includes time, image, and description

- Watch Live Video

- Detected objects are highlighted with colored bounding boxes

- Confidence scores (0.0–1.0) shown next to boxes

- Click Full Screen for enlarged view