Data Security with AI

Building an MLOps Platform with Data Security in Mind

While artificial intelligence (AI) is driving transformative changes across various industries, concerns about data security remain a persistent challenge during implementation. Enterprises often handle sensitive information, and the risk of data leaks or misuse poses a significant barrier. As a result, many organizations hesitate to adopt AI technologies, with data security issues being a primary obstacle.

However, there is a solution to these concerns: the Mellerikat MLOps platform. Mellerikat combines data security with AI technology to enable enterprises to innovate safely. It minimizes outgoing data and uses only necessary data to operate AI models.

Mellerikat’s approach prioritizes data security while opening up diverse possibilities for enterprises to leverage AI technologies to enhance their competitiveness.

This article explores why data security is critical when adopting AI and how Mellerikat addresses these concerns. We will highlight the strengths of Mellerikat in delivering optimized AI solutions while maintaining data security and discuss the positive impact this can have on your business.

Optimized Service Operations with Private Networks

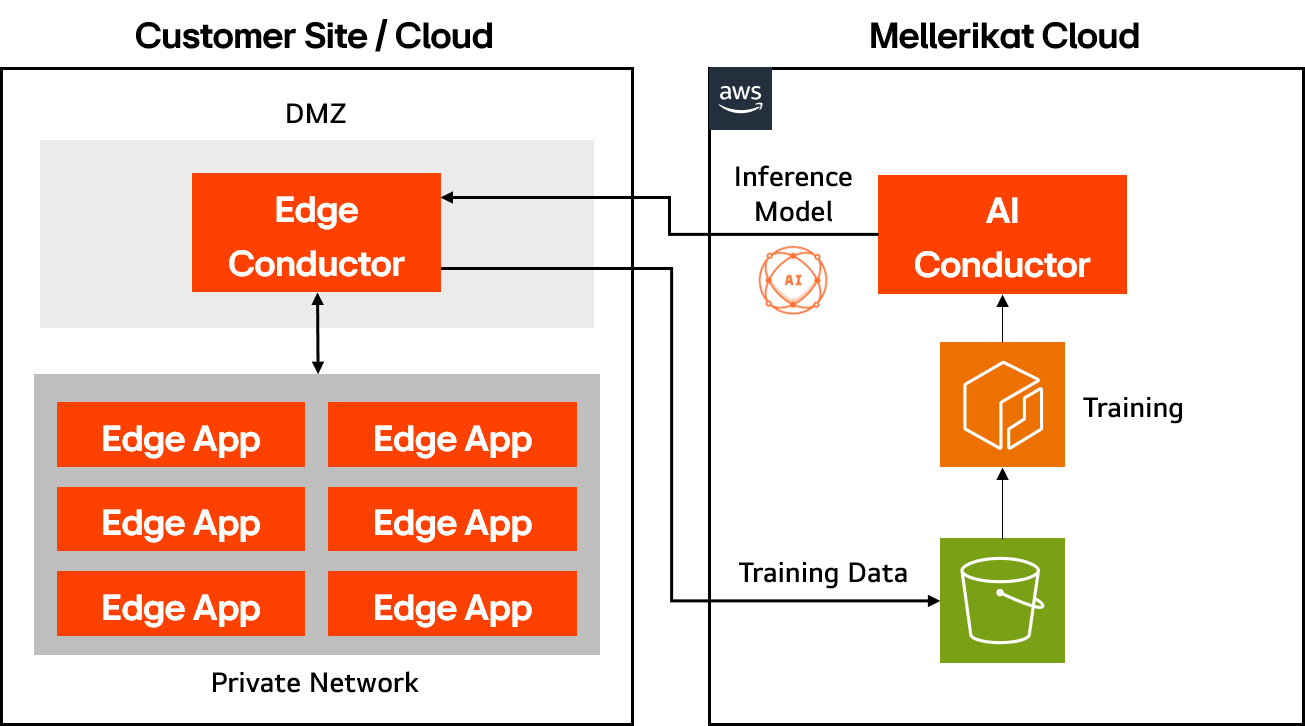

Many enterprises are concerned about transmitting data from private network environments to external systems. To address this, Mellerikat offers the following optimized service models:

- Edge App for Model Servicing: Installed within the customer’s private network where data resides, enabling immediate data access.

- Edge Conductor: Deployed on-site but capable of communicating with the private network and connecting to external systems via a DMZ.

This structure allows the Edge Conductor and Edge App to be installed on-site, ensuring that no data leaves the premises while operating AI models.

Secure Data Training and Inference Processes

For model training, only selected datasets are transmitted from the Edge Conductor to external systems. These selectively transmitted datasets can be periodically used for training. Once training is complete, the AI Conductor provides the inference model file to the Edge Conductor. The data sent to the Edge Conductor consists solely of this model file, and cloud data used for training can be deleted to ensure temporary retention.

Additionally, when registering an AI solution, the training data can be registered alongside it, allowing the model to be deployed and operated without sending Edge Conductor data externally. Alternatively, solutions that perform both training and inference within the Edge App can be deployed to operate the model.

Services Across Diverse Environments

AI Model Services in Multi-Cloud Environments

Enterprises store data across various cloud platforms such as AWS, GCP, and Azure. To utilize AI models while maintaining data security, the models must be executed within the respective cloud. By installing the Edge App on each cloud’s computing resources and, if needed, deploying the Edge Conductor, models can be serviced effectively.

AI Model Services on On-Premises Servers

For enterprises with policies that prohibit storing data in the cloud, data can be maintained in on-premises data centers or servers. In such cases, the Edge App and Edge Conductor can be installed on these servers to leverage AI models.

AI Model Services in Factories/Local Computers

In environments like factories, AI models can be used on computers connected to production lines for tasks such as inspections or predictive maintenance, typically operating within isolated factory networks for security. By installing the Edge App on these computers and deploying the Edge Conductor on an on-site server, AI models can be serviced. Dozens of computers can run Edge Apps for real-time inference, while the Edge Conductor continuously operates models that learn from on-site changes.

Inside Mellerikat

Mellerikat’s MLOps platform consists of the AI Conductor, which manages solutions and oversees AI model training, and the Edge Conductor and Edge App, which provide model management and inference services. This structure enables model servicing across diverse environments while maintaining data security. Unlock new possibilities with Mellerikat’s platform.

For more details on secure services, refer to this link.