Meta-Intelligence of LLM Observability

To effectively implement LLM services, a robust LLMOps framework is essential. Among its components, observability (o11y) has evolved beyond simple monitoring to become a critical enabler of the system’s meta-intelligence.

The Evolution of o11y into Meta-Intelligence

Early LLM o11y focused on collecting metrics such as token usage, response time, response content, and user feedback to monitor performance. We adopted Langsmith, a commercial tool, to monitor the execution process of AI logic. Later, we integrated Langfuse, an open-source tool, allowing our organization to selectively use either tool based on licensing requirements.

However, as the number of AI Agent service users grew, it became clear that accumulated data could no longer provide meaningful insights through simple log analysis. Consequently, we decided to transform o11y data from mere "observation logs" into a meta-intelligence tool. This system leverages AI Agent outputs and user feedback to automatically reformulate questions or enhance response quality by adjusting model behavior.

In essence, o11y data transcends real-time performance monitoring to become the cornerstone of a feedback loop that enables AI Agents to self-improve.

Academically, this approach aligns with the growing focus on AgentOps or Agentic AI observation systems. There is a movement to propose comprehensive observation frameworks for AgentOps, tracking various artifacts such as execution paths, internal logic, tool calls, and planning stages. Beyond black-box evaluations, the importance of inferring and optimizing behavioral patterns based on agent execution logs is increasingly emphasized.

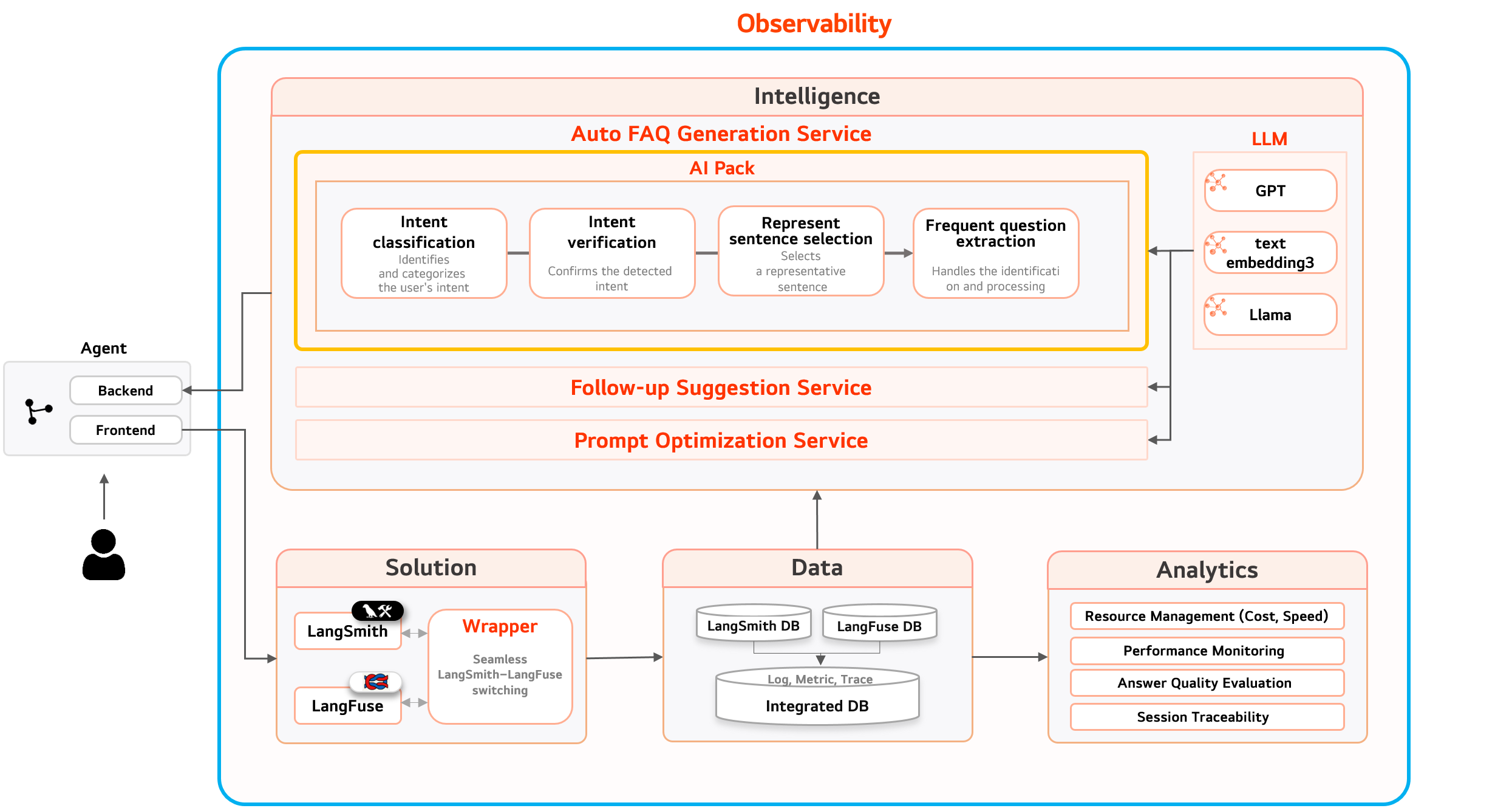

Mellerikat’s ‘Meta-Intelligence o11y’ Model Architecture

Our o11y meta-intelligence strategy is structured as follows:

-

Trace-Based Execution Logging Using Langsmith and Langfuse, we capture LLM calls, internal planning, token usage, responses, and feedback at the trace session level.

-

Real-Time Anomaly Detection and Feedback Loop When issues like latency or hallucinations are detected, alerts and automated reports are generated instantly, with an automatic tagging system triggered based on user feedback.

-

Meta-Layer Inference Engine Based on collected data, the system provides intelligent suggestions, such as "Which questions are more appropriate?" or "How should responses be restructured for better effectiveness?"

-

Automated Prompt Tuning and Deployment The meta-intelligence engine automatically refines prompts, and updated logic is applied in real-time through a CI/CD pipeline.

This architecture moves beyond manual tuning of LLM logic, aiming for meta-intelligence where the system continuously proposes and implements improvements.

Today, LLM-based AI Agents are evolving from mere response generators into agentic AI, capable of autonomous reasoning, planning, and execution. In this context, o11y transcends monitoring to become the core infrastructure for self-learning meta-intelligence. Ultimately, o11y is the key to transitioning beyond basic observability into a paradigm where AI Agents continuously evolve, enabling sustained learning Agent services.

For more details on Mellerikat o11y, refer to this link.

Mellerikat o11y Architecture