Edge App

What is Edge App?

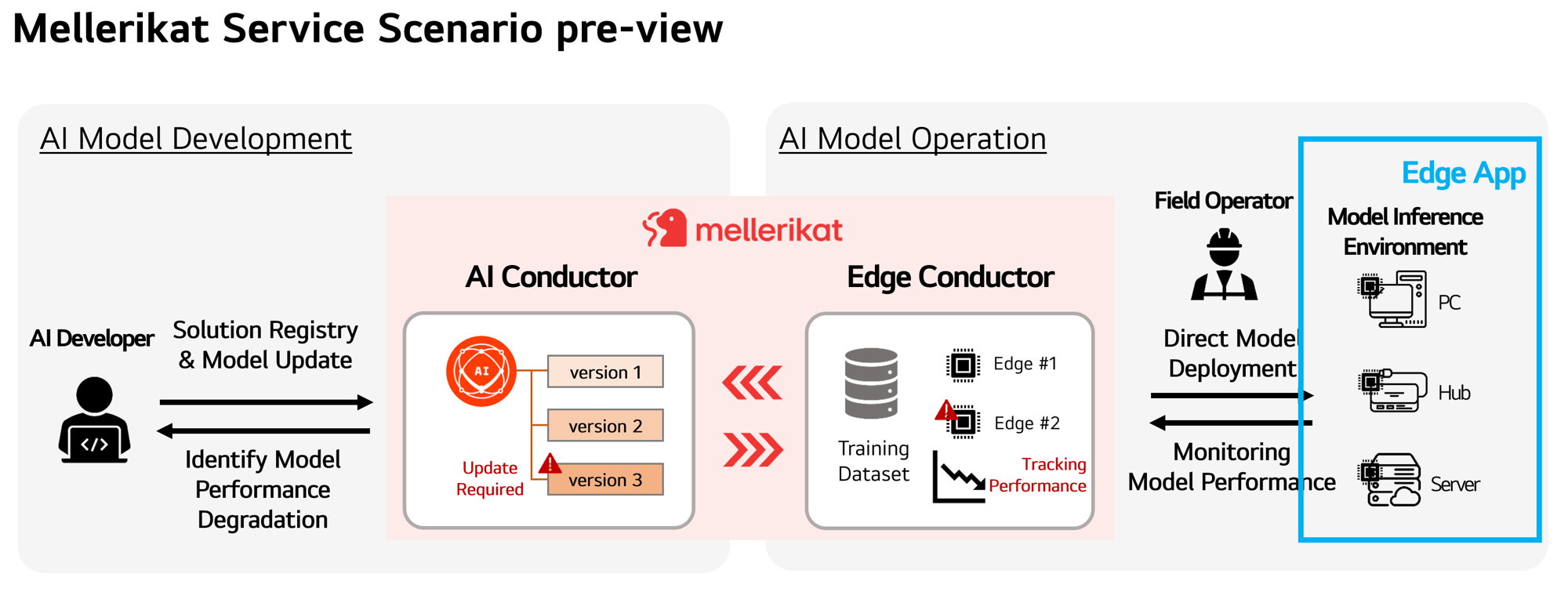

Edge App is an application installed on various devices to perform inference using AI models. It allows users to directly run AI models on devices such as smartphones, IoT devices, and servers, enabling real-time tasks like prediction and classification.

Key Features

Let's explore the core features of Edge App:

Perform AI Model Inference

Edge App executes AI model inference on the installed device. This means the model processes input data to perform tasks like prediction or classification. By running inference locally, Edge App enables real-time responsiveness on the device.

Data Collection and Validation

Edge App collects the necessary data from the device to run inference. It validates the data to ensure the model can produce accurate results. High-quality and reliable data is critical to model performance, making this function essential.

Error Detection and Handling

Edge App detects and handles potential issues or errors that may occur during inference. This is vital for maintaining model stability and ensuring reliability in real-world environments. Any detected errors are promptly identified and resolved to ensure continuous performance.

Data Transmission and Centralized Management

Inference results and other important information are transmitted to Edge Conductor. Edge Conductor manages communication and data flow across multiple devices. This enables centralized management of results and promotes collaboration between devices while maintaining consistency and supporting further analysis.

Model Performance Monitoring and Retraining

Edge App monitors inference outcomes and helps identify performance issues. If needed, it allows for data relabeling to improve model quality. Collected results can be used to generate new training datasets, retrain the AI model, and deploy the updated version back to the device through Edge App.

User Scenario

Typical usage scenarios for Edge App include:

-

Installation and Configuration: Install Edge App on various devices where AI models will be used (e.g., smartphones, IoT devices, servers). During setup, configuration settings must include connection details for Edge Conductor.

-

Perform Inference: Edge App runs the AI model's inference functionality on the installed device, processing input data in real time for prediction and classification tasks.

-

Data Collection and Validation: Collects input data from the device and validates it to ensure the model can generate accurate results.

-

Error Detection and Handling: Detects and handles errors that may occur during inference, ensuring model stability and operational reliability.

-

Transmit Results: Sends inference results and key information to Edge Conductor. Edge Conductor manages communication and data flow between devices and centralizes result management.

-

Performance Monitoring: Monitors inference results to detect any performance degradation. If needed, data can be relabeled to improve model quality.

-

Retraining and Redeployment: Uses inference results to create a new training dataset, retrains the model, and redeploys the updated version to the device via Edge App.