Physical AI Implemented with EVA

When Can AI Intervene in the Real World?

Accidents in industrial environments happen without warning. Moments such as a worker collapsing, an arm getting caught in machinery, or a fire breaking out usually occur within seconds.

Physical AI should not stop at recognizing these moments. It must be capable of translating perception into physical action on site.

In this post, we walk through a LEGO-based simulation to show how EVA detects incidents and how its decisions are connected to real equipment actions as a single, continuous flow.

Simplifying Industrial Scenarios with LEGO

Instead of replicating complex industrial environments in full detail, we simplified accident scenarios using LEGO.

We designed independent scenarios for:

- a worker collapsing,

- an arm being caught in equipment,

- and a fire breaking out.

Arm caught in equipment – conveyor belt stops and warning light activates

Worker collapse – warning light and buzzer activated

Fire detected – conveyor belt stops and warning light activates

EVA: Interpreting Situations as Events

What matters in this simulation is not simply detecting a person or recognizing flames.

EVA interprets each situation through predefined detection scenarios and evaluates them as meaningful events.

Below is the interface where detection scenarios are configured in EVA.

Detection immediately becomes a trigger condition for deciding the next action.

Physical Action Trigger: When AI Decisions Move Reality

When an event occurs, EVA’s role does not end at detection.

EVA transforms detected events into Physical Action Triggers, connecting them directly to on-site equipment and devices so they can respond immediately.

The key point is that each accident scenario is mapped to a predefined physical response. Without waiting for human intervention, AI decisions are translated directly into real-world actions.

Through this structure, AI judgments do not remain as on-screen alerts or logs. They become actions that actively change the state of the现场.

Physical Action Triggers represent the point where AI moves beyond “what it sees” to executing what must change in the real world.

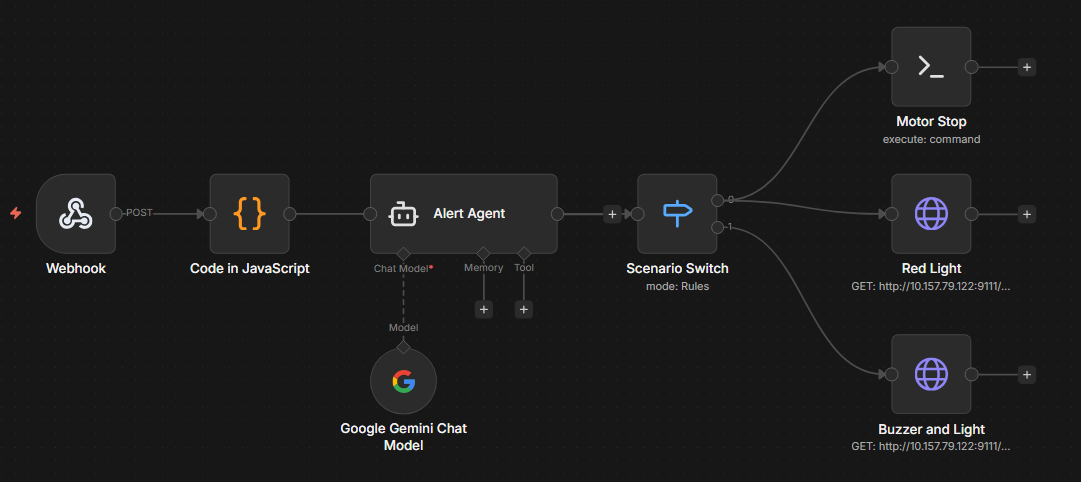

EVA → n8n → Equipment Control Workflow

These Physical Actions are not implemented with hardcoded logic. They are built using a workflow-based approach.

Detection events generated by EVA are delivered to n8n via Webhooks. Based on the severity and context of the event, an Agent within n8n sends the appropriate control signals to on-site equipment.

With this structure, even if equipment changes or scenarios expand, workflows can be reused and adapted flexibly.

A Structure That Makes Physical AI Tangible

This LEGO simulation does not replicate a real industrial site in full detail.

However, the structure— where an incident occurs, AI perceives it, and a decision leads to a physical action— is identical to real-world environments.

EVA does not leave AI as a result on a screen. It enables AI to directly intervene in the physical world, realizing the concept of Physical AI.