Vision Data Soluion Service

Experience a service utilizing Vision data through an AI Solution created based on Vision Anomaly Detection AI Contents from the AI Solution tutorial.

Service Environment Setup

To simply configure an environment that provides Vision-based AI Solution services, connect a USB camera to a Windows PC and set it up to photograph objects from a fixed angle.

It is recommended to select objects that can easily create both normal and abnormal states for data collection and inspection.

Note: If the surroundings, such as the camera's field of view or lighting, change or the object moves significantly, you will need to collect the data again, so pay close attention.

Windows PC

The PC on which the Edge App will be installed should meet the following specifications to operate the Vision Solution:

- CPU: i5

- Memory: 16GB

- OS: Windows 10

When installing the Edge App, set the DataInputPath to the path where the images captured by the USB camera are saved.

Reference Path: C:\Users\User\Pictures\Camera Roll

USB Camera Equipment

Prepare a USB camera to collect image data by connecting it to the PC. The following specifications are recommended for the camera, and depending on the shooting environment or the object, a specialized camera configuration may be necessary.

- Camera Body: Configured to shoot up to 12MP resolution according to the target.

- Camera Lens: Adjust the focus with a 8-50mm telephoto lens depending on the distance from the object.

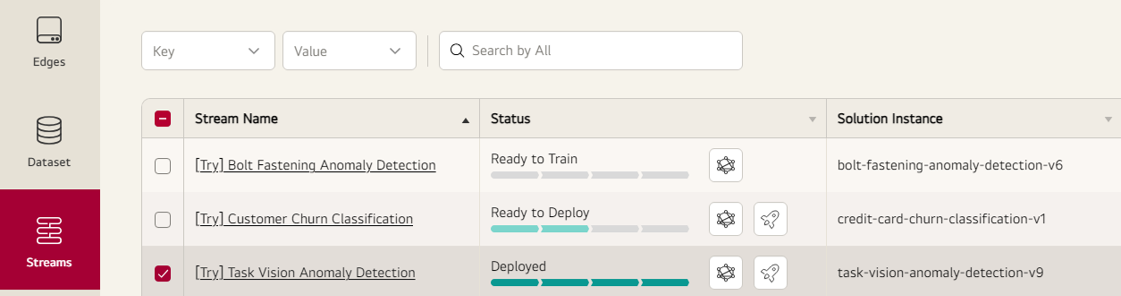

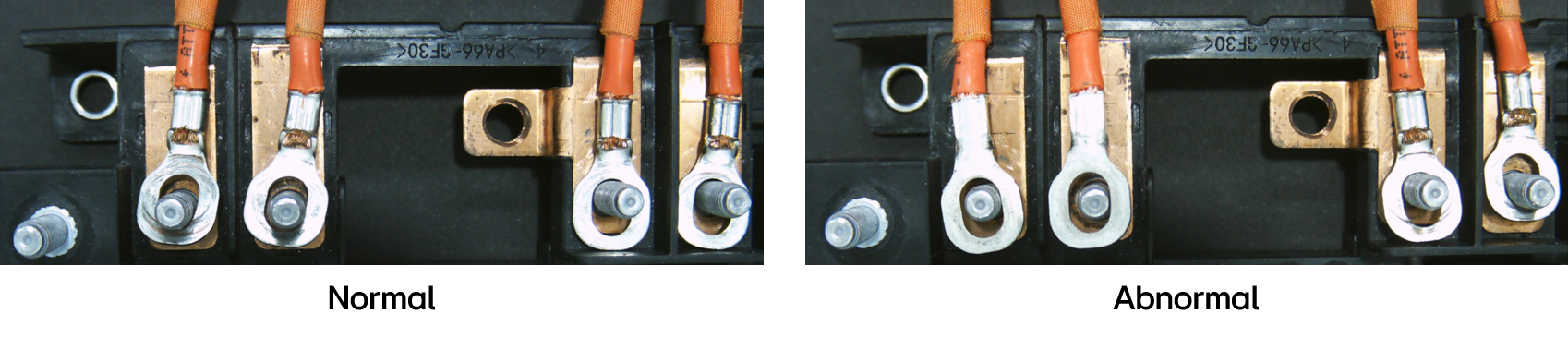

Selection of Shooting Objects

Select objects that allow the collection of both normal and abnormal images to operate the Vision Anomaly Detection AI Solution. Since the Vision Anomaly Detection performs inference through an AI model trained with mostly normal images and a few abnormal images, the object should allow the collection of various normal images and also easily create abnormal states for verification.

- Examples of Inspection Objects

- Machine parts

- Biscuits

- Coffee capsules

AI Solution Operation

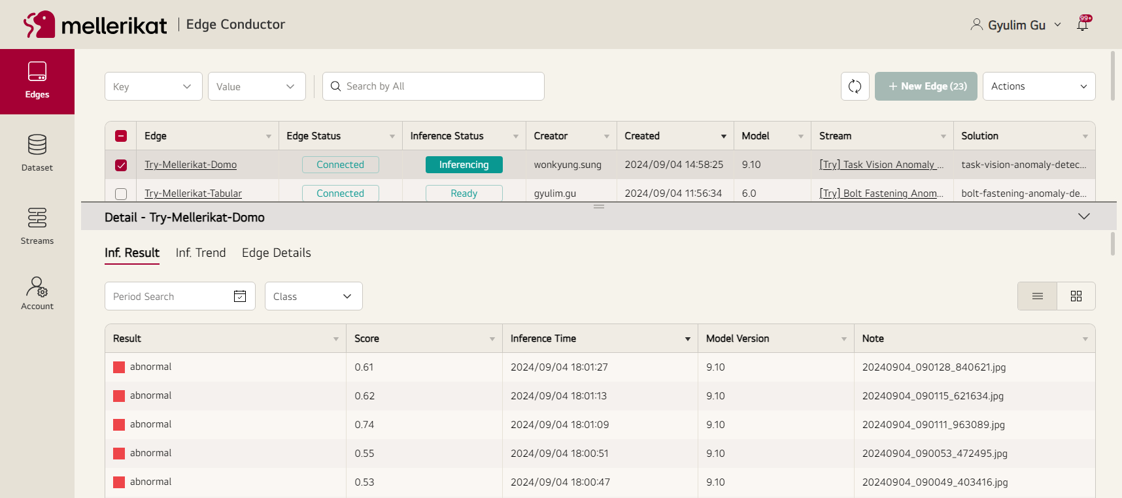

Deploying Inference Model for Data Collection

Deploy the Vision Anomaly Detection Task model to the Edge App through the Streams page of Edge Conductor to perform inference.

For more details, refer to Deploy Manual.

Once the deployment is completed, the Edge App will switch to Disconnected status and then move to Connected status after downloading the inference Docker and completing its execution.

The Inference Docker for Vision Anomaly Detection requires about 6GB of free space, and if the memory is low, downloading and executing can take up to 30 minutes.

Data Collection

Capture images of the selected object, collecting about 200 normal images and 5 abnormal images.

During collection, the camera must remain fixed, and the object should not move significantly within the view.

Since Vision Anomaly Detection learns the relationships in normal data to identify abnormalities, the more various normal data collected by moving or changing the object, the better the model's performance.

- Normal: The coil should be visible.

- Abnormal: The connector is flipped, hiding the coil.

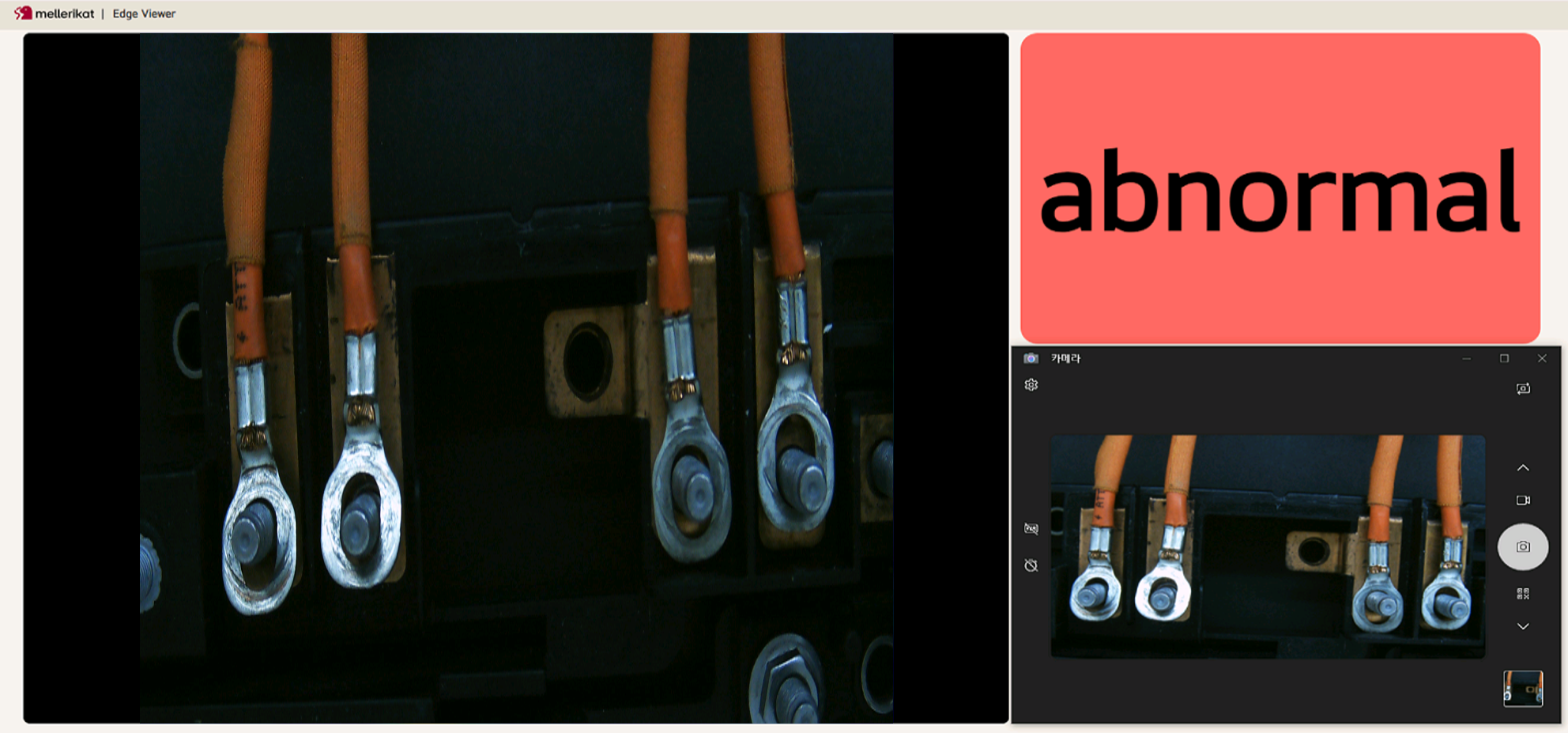

Run the Edge App, then start the Edge Viewer and the default Camera application on Windows to capture photos. If the Edge App operates correctly, the captured images will be displayed in the Edge Viewer.

Since the data hasn't been trained, the model will only determine as Abnormal.

Note: If the Edge App doesn't operate, double-check the DataInputPath set during installation.

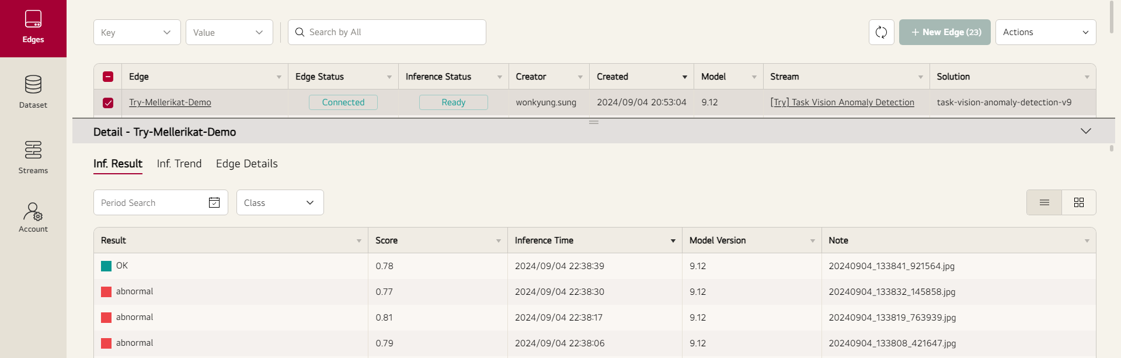

Once inference is performed by the Edge App, the data and results are uploaded to Edge Conductor and displayed in a table format.

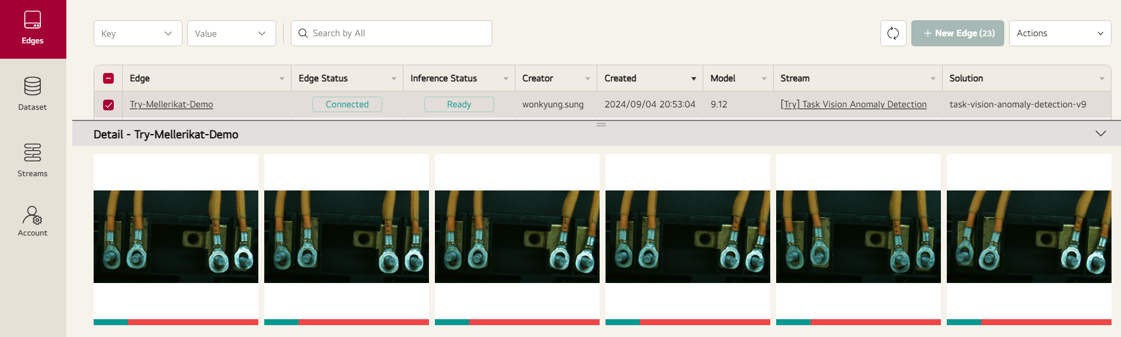

Click the album view to see the inference results based on the images.

Dataset Creation and Labeling

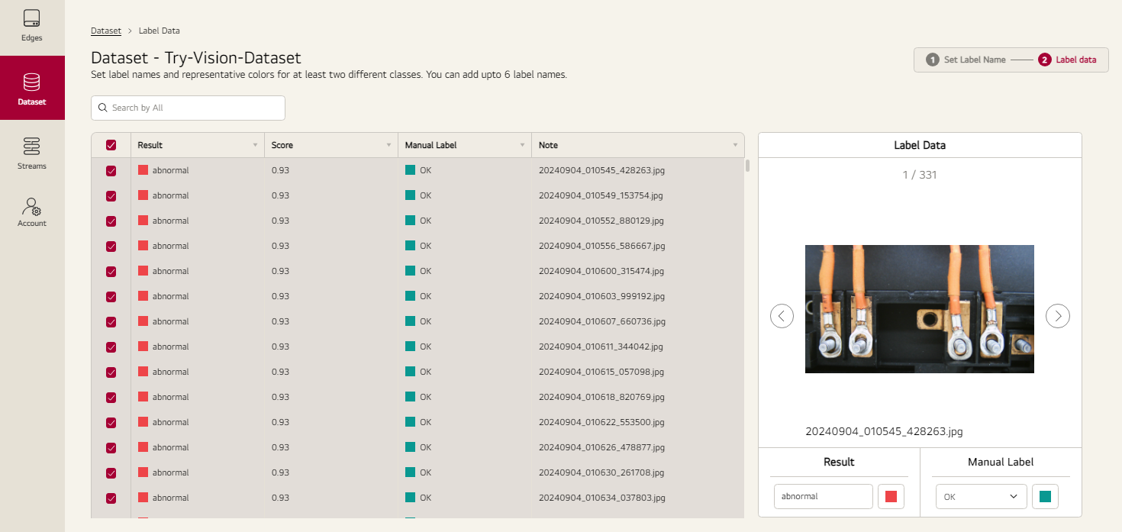

Move to the Dataset menu to create and label a Dataset based on the data inferred by the Edge App.

Initially, the model judges all data as Abnormal, so change the entire selection to OK, then find and change the labels of the abnormal images.

Refer to Dataset Manual for more details.

Model Training and Deployment

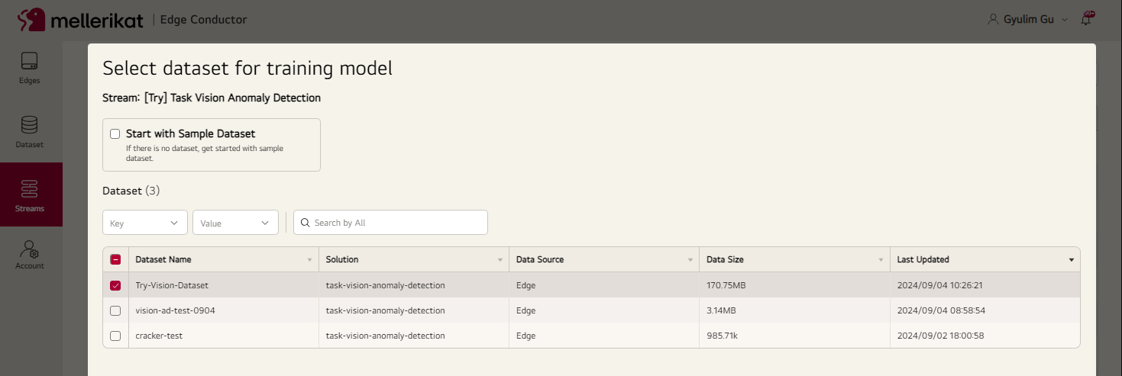

Click the Train button for the Vision Anomaly Detection Task on the Streams menu, select the created Dataset, and request training.

After the training request, the Dataset's data is sent to the cloud S3, and AI Conductor retrieves it to run the training pipeline, generating a model.

Once training is complete and the model is created, AI Conductor sends the model to Edge Conductor for deployment to the Edge App.

Click the Deploy button again to deploy the model to the Edge App and verify if it is functioning correctly.

Refer to Model Train Manual for more details.

Inference

With the same conditions as data collection, change the state of the inspection object to verify if the trained model works correctly. In actual operational environments, you can automate photo capturing by integrating with existing systems using PLC communication or Object Detection techniques.

XAI (Explainable AI)

The parts identified as abnormal by the AI model are highlighted brighter in the Heatmap. This allows users to identify where the anomalies are located. If incorrect judgments are frequent, refer to the Heatmap to collect more data and improve the model through training.

If the camera has moved, the Heatmap will be bright overall, and if there is a change in lighting, the parts where the light reflects will appear brighter. Consider these factors to check whether environmental changes have occurred and ensure no external influences.

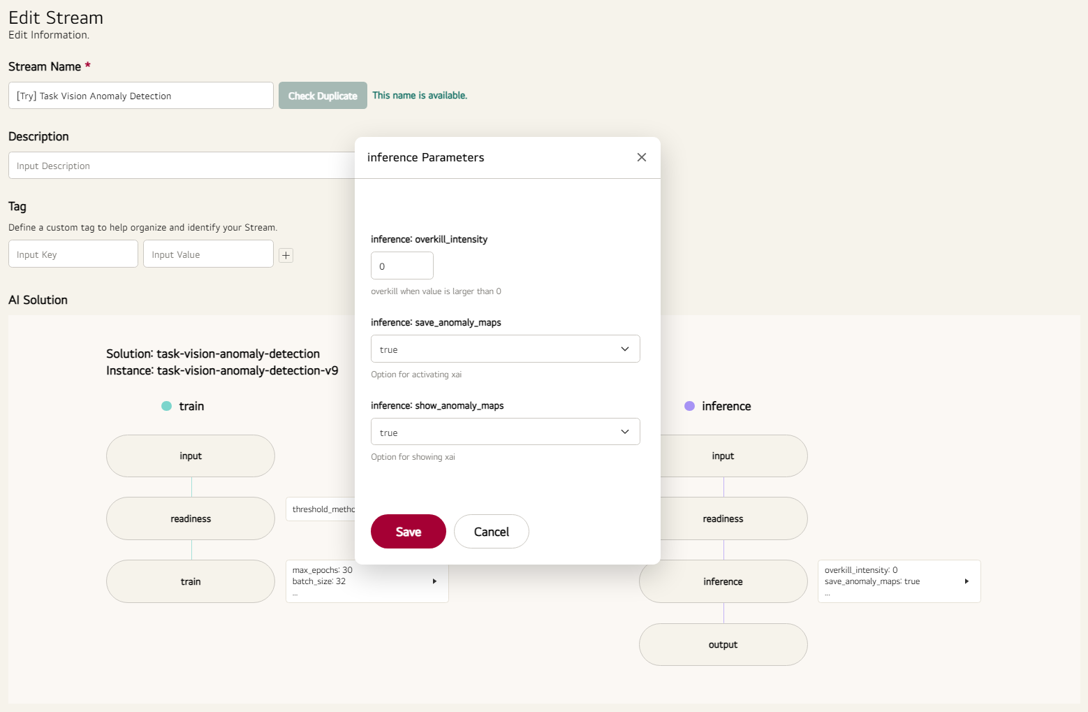

Parameter Adjustment

You can modify the parameters in Edit Stream. If the training hasn't converged, increase the epoch parameter for training or adjust the learning rate. To display XAI images, modify the inference parameters. After modifying the inference parameters, redeploy the model to apply changes to the Edge App.

Retraining

A model trained with a small amount of data may have limited performance. In this case, collect additional data, label the incorrect judgments, and improve the AI model through retraining.

Since you can easily collect additional data, create Datasets in Edge Conductor, and perform training and deployment of AI models, AI Operators in the field can manage and operate the AI models.

For more details on the role of AI Operator, refer to AI Operator Manual.