Mellerikat for Splunk User Manual

Sequence of Progression:

- Initial Setup

- Register AI Solution

- Select Train Data

- Create Dataset, Train, and Deploy Model

- Perform Inference

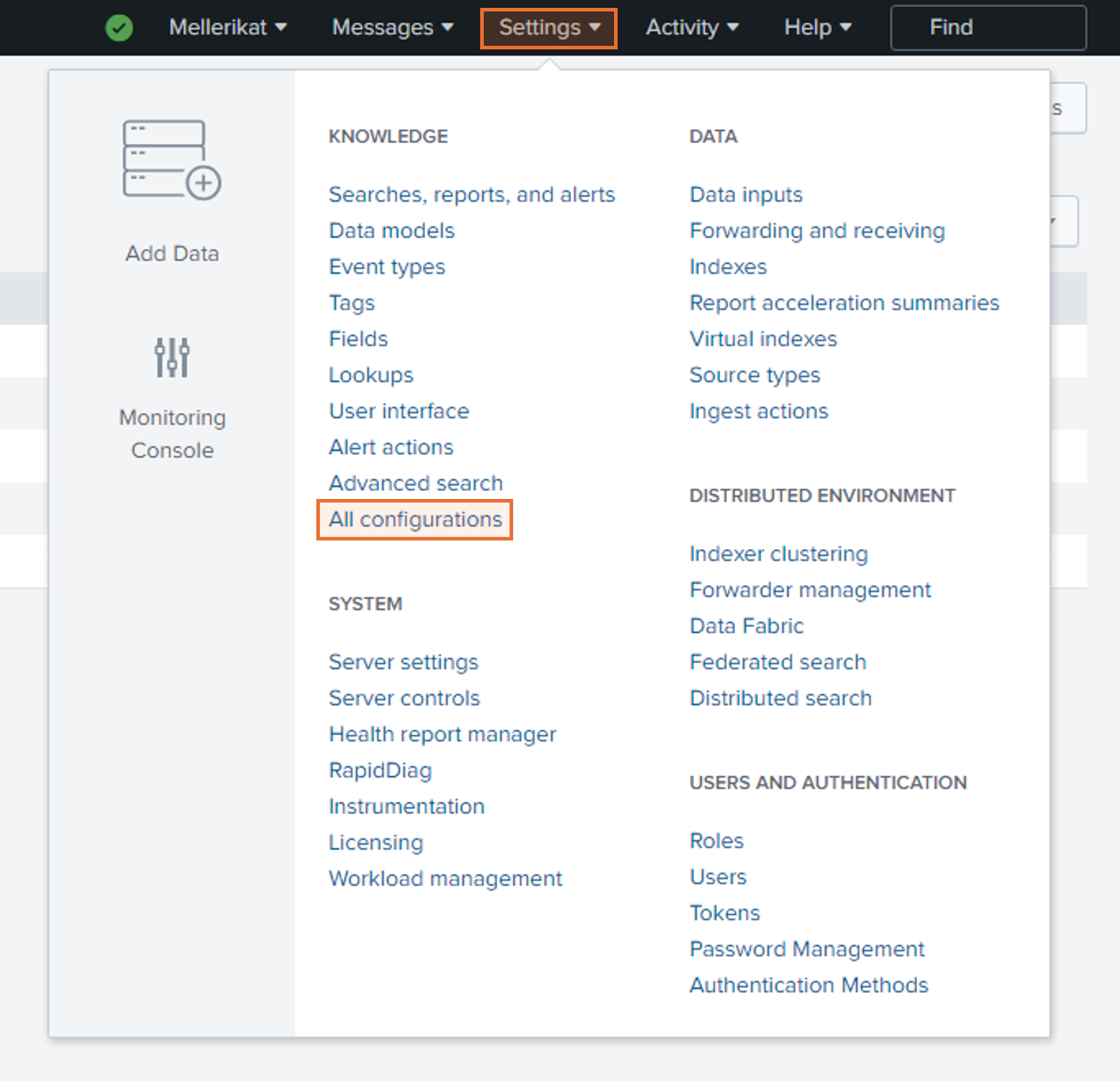

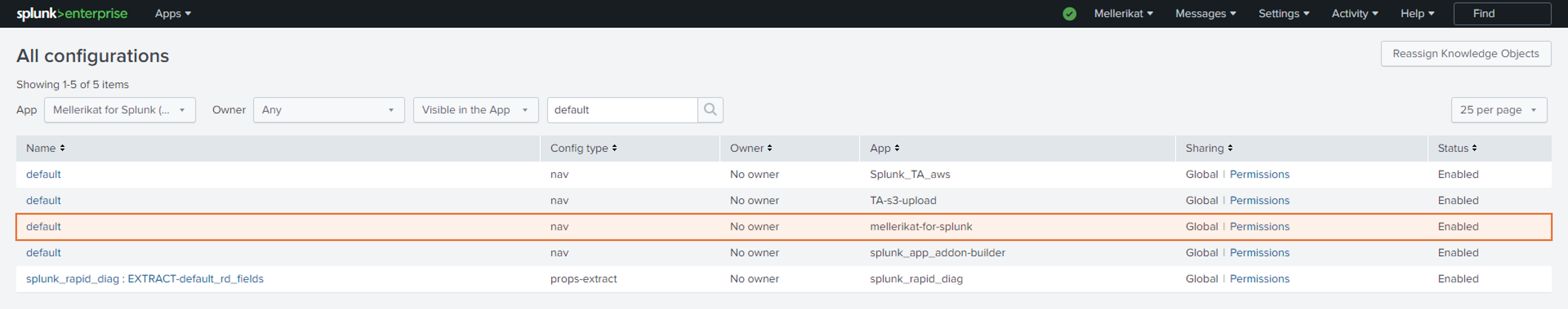

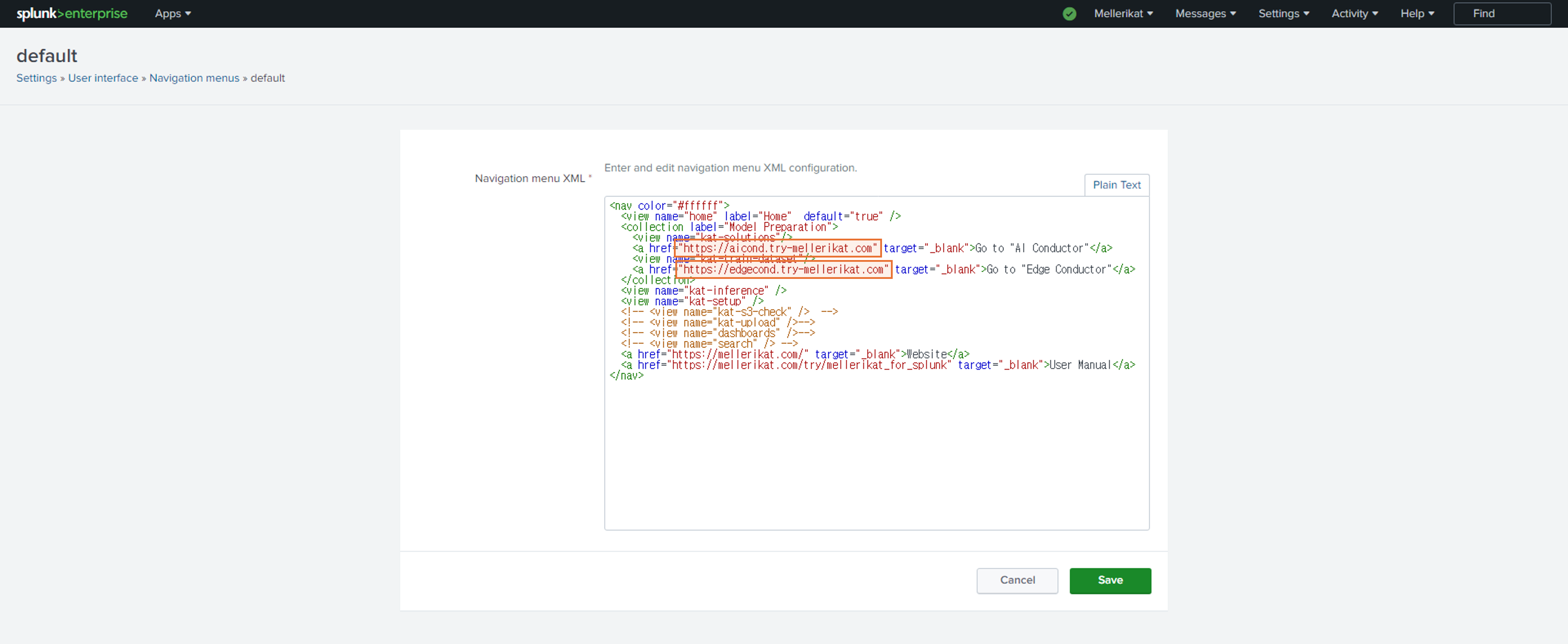

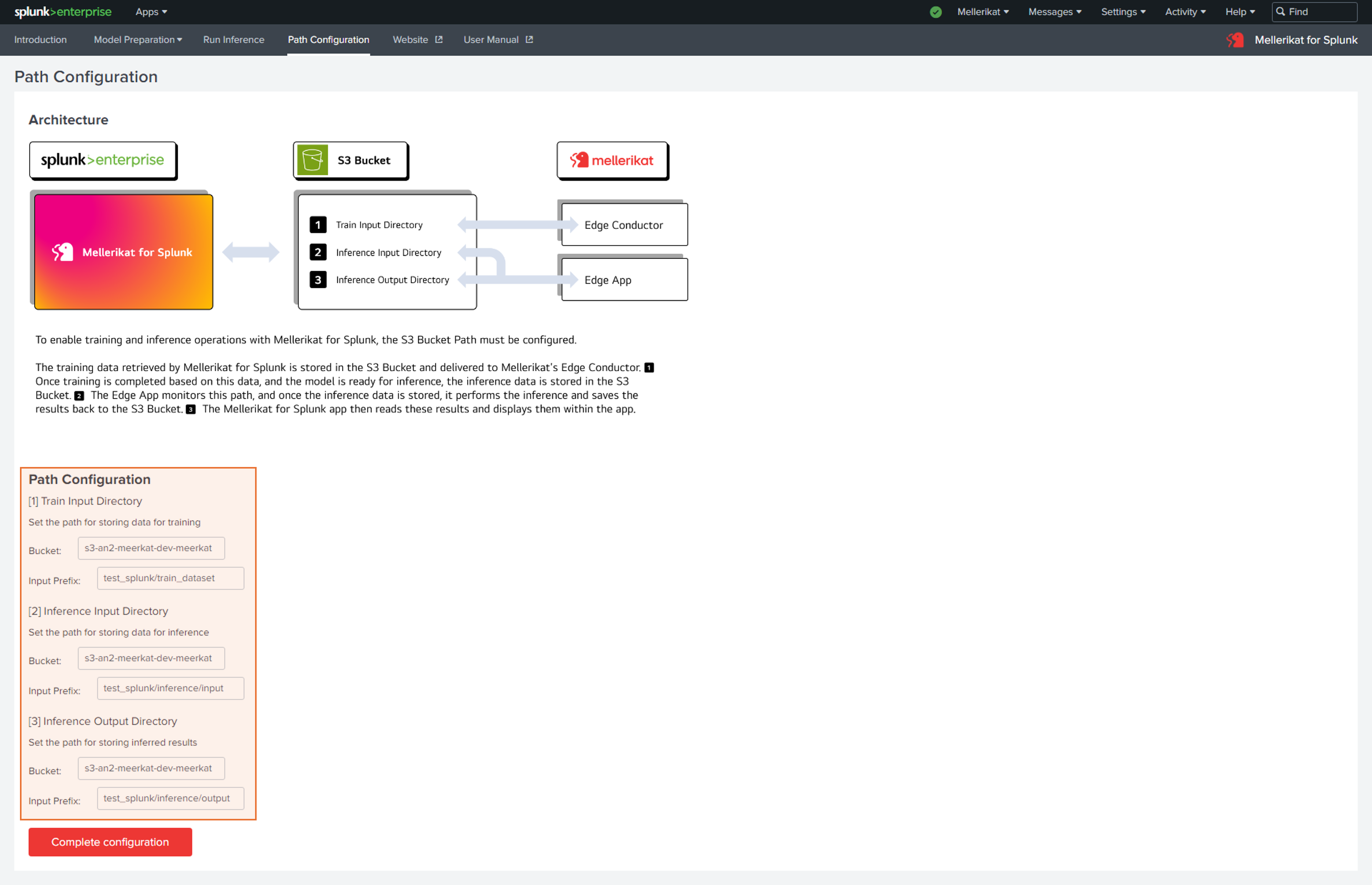

1. Initial Setup

- Go to 'All configurations' in Splunk Enterprise and configure the web addresses of AI Conductor and Edge Conductor.

- Select the 'Path Configuration' tab and set the path where data will be stored and loaded from.

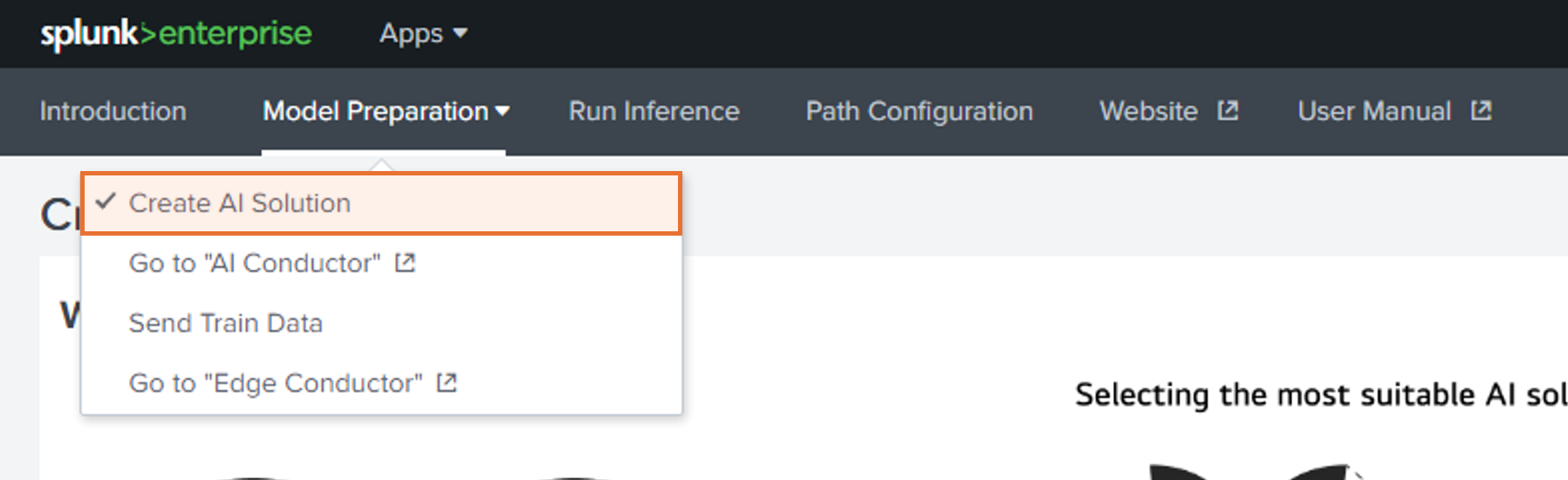

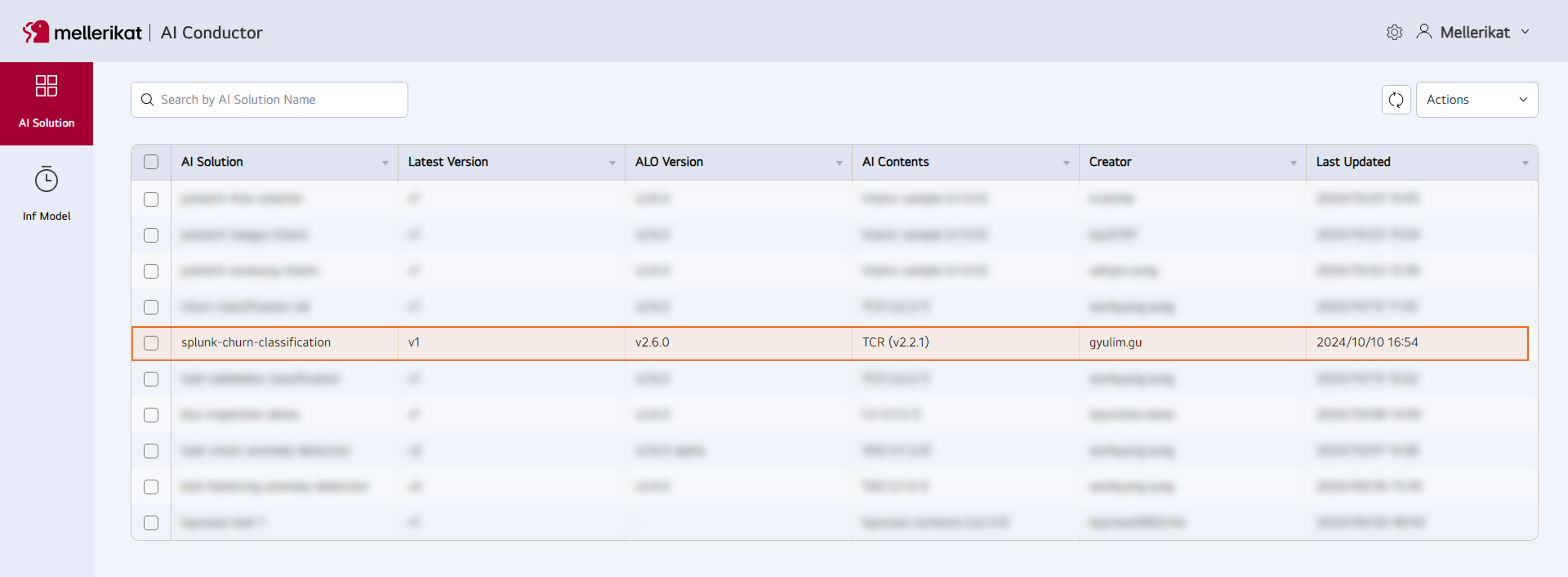

2. Register AI Solution

- Move to the 'Create AI Solution' tab and save the splunk.yaml file according to the guide.

- Check the registered AI Solution in the AI Conductor.

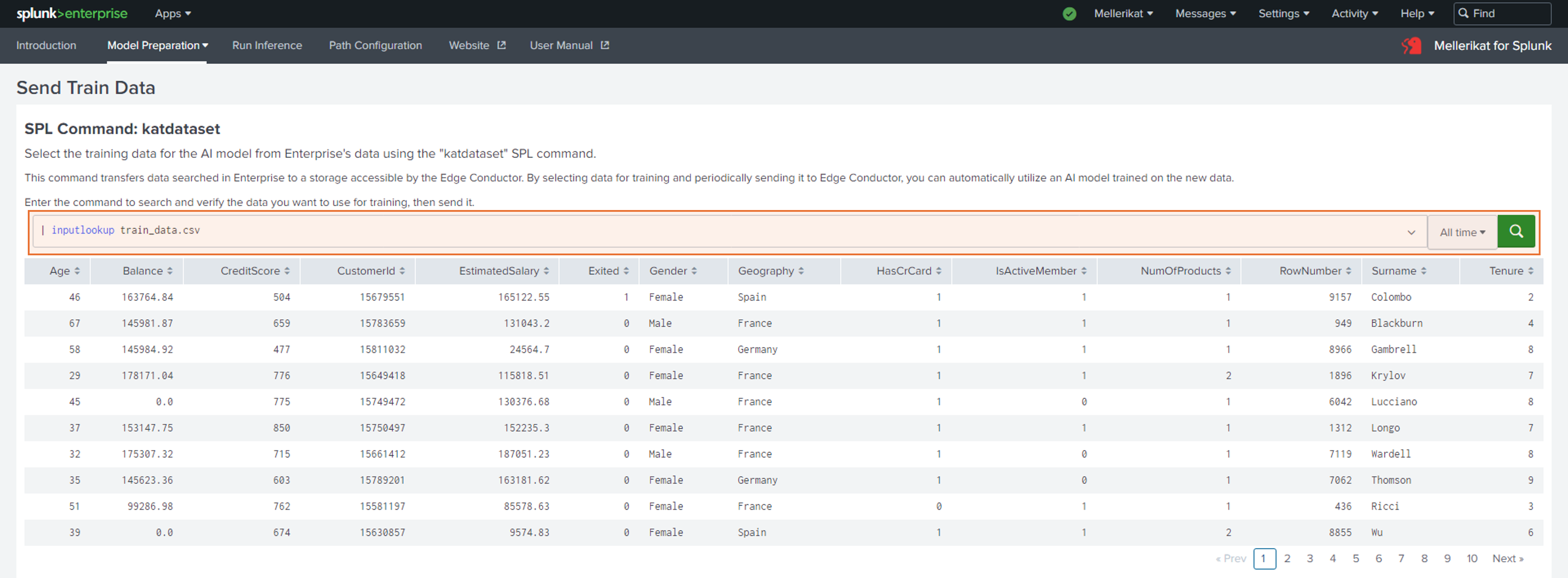

3. Train 데이터 선택

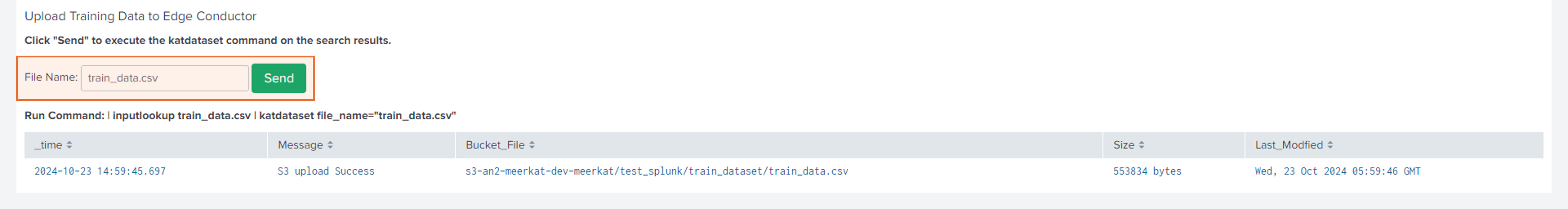

- Check the data for model training.

- Enter the file name to be saved and click the Send button to transfer the data to the specified path.

4. Create Dataset, Train, and Deploy Model

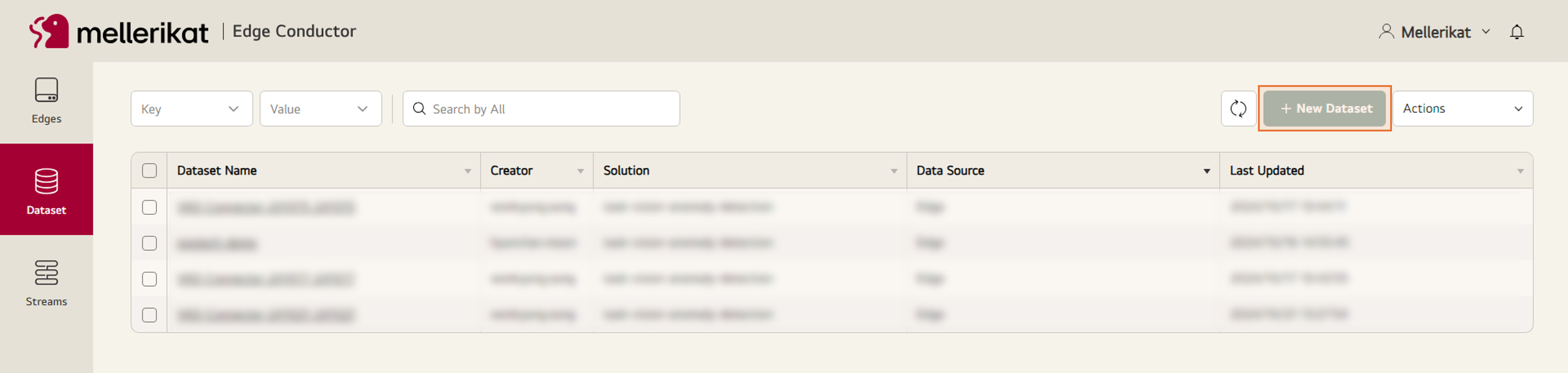

- Access the Edge Conductor, go to the Dataset tab, and click the New Dataset button.

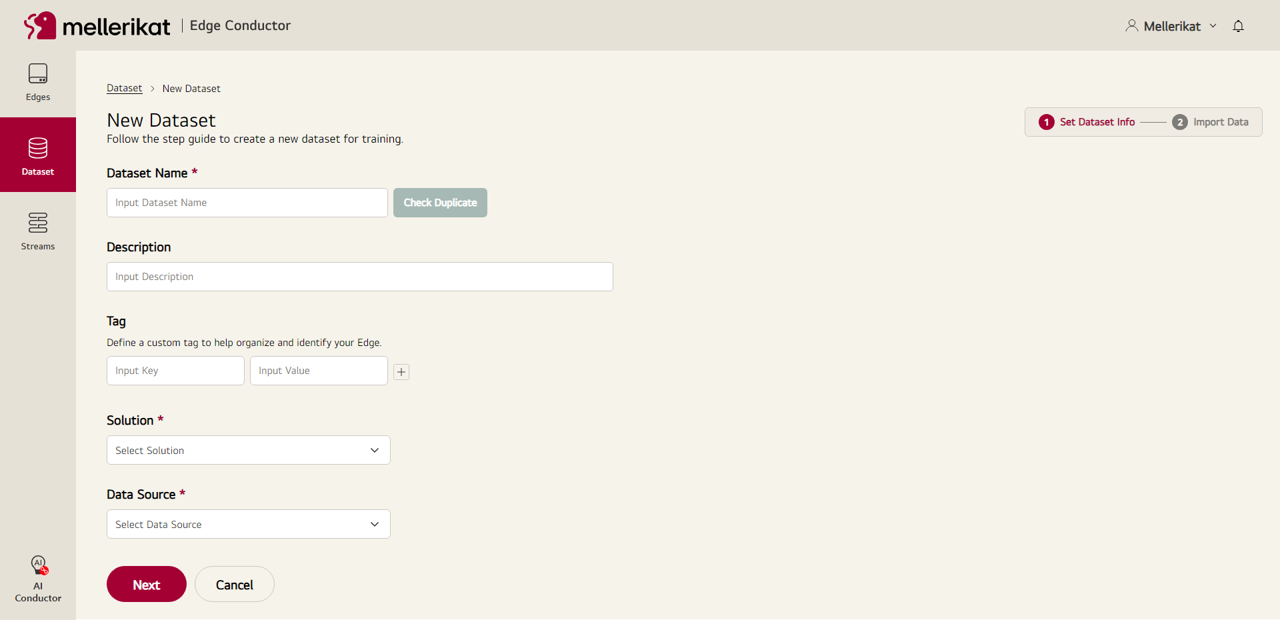

- Enter the necessary information for the Dataset and click the Next button.

- Dataset Name: Name of the Dataset to be created * required

- Description: Description of the Dataset to be created

- Tag: Tag information to be assigned to the Dataset

- Solution: Name of the AI Solution to be linked with the Dataset * required

- Data Source: Type of the path from which the Dataset will be obtained * required

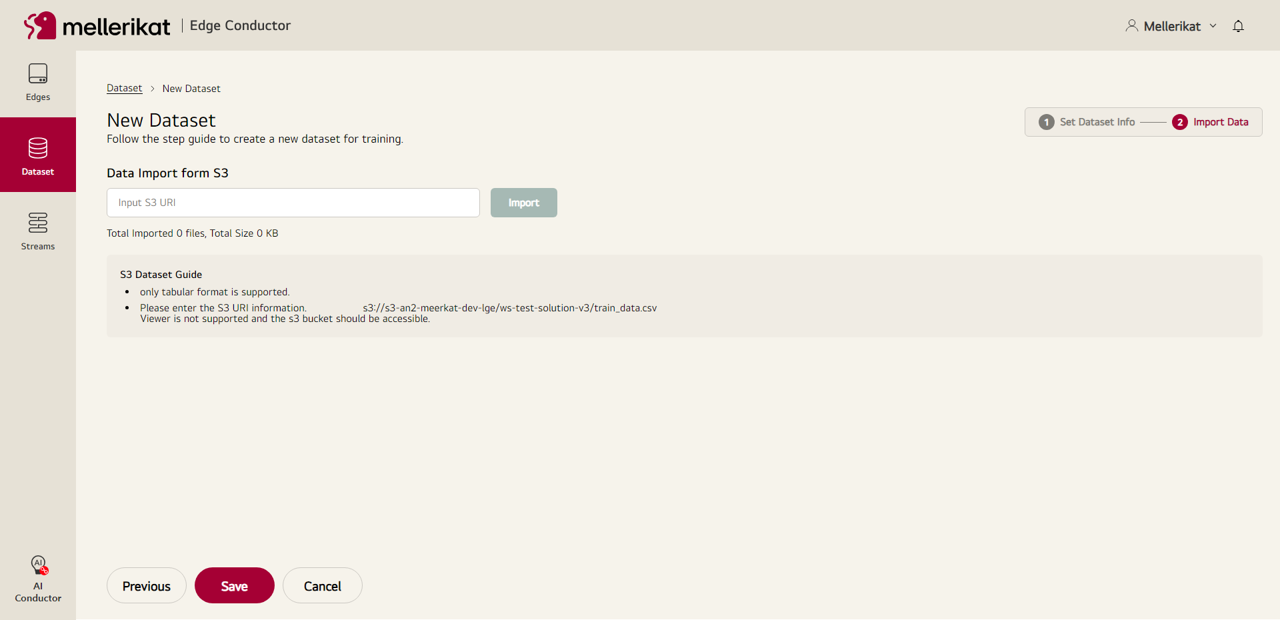

- Enter the S3 path configured in the Configuration tab, click the Import button to import the data, and then click the Save button to save the Dataset.

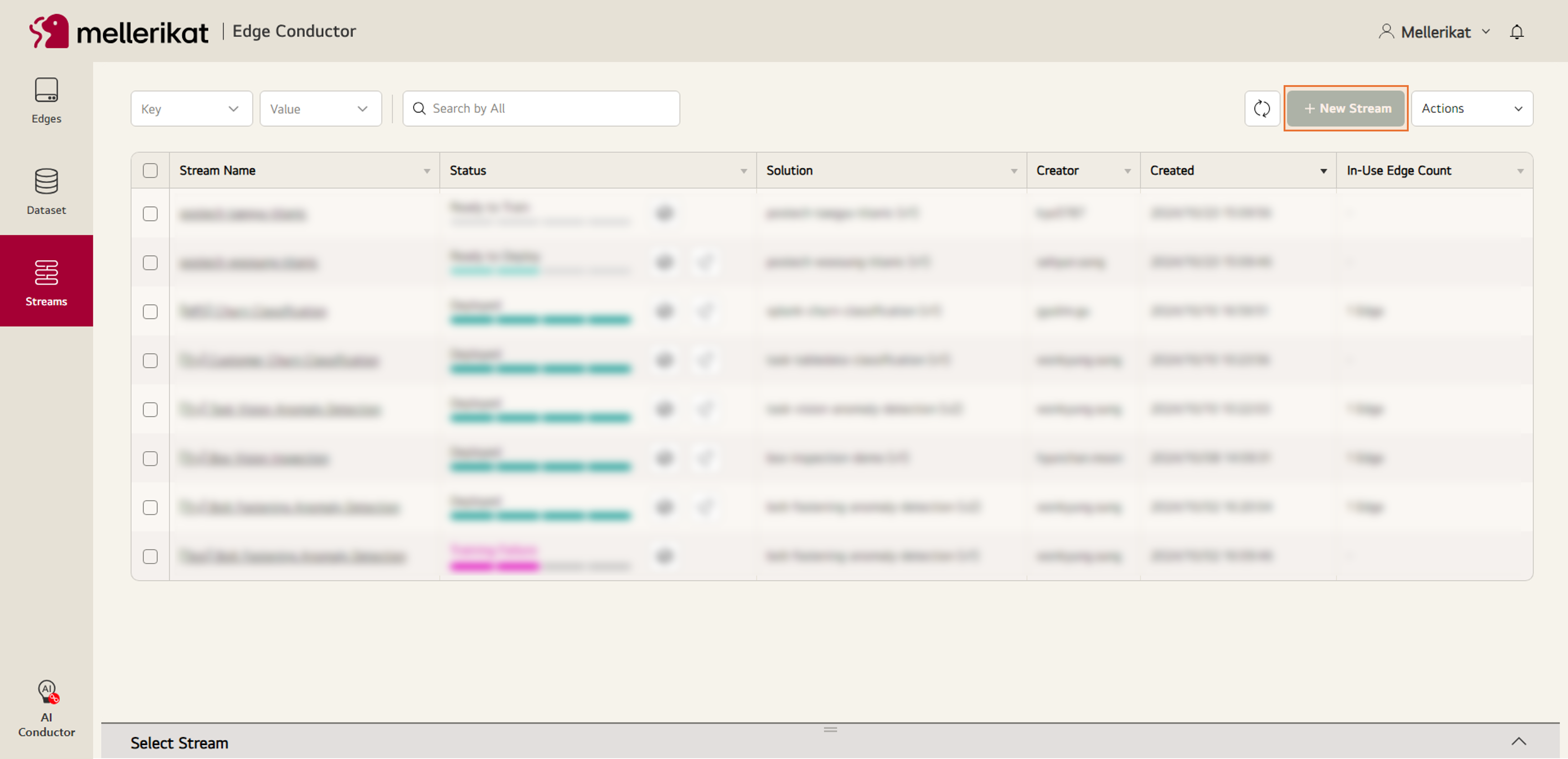

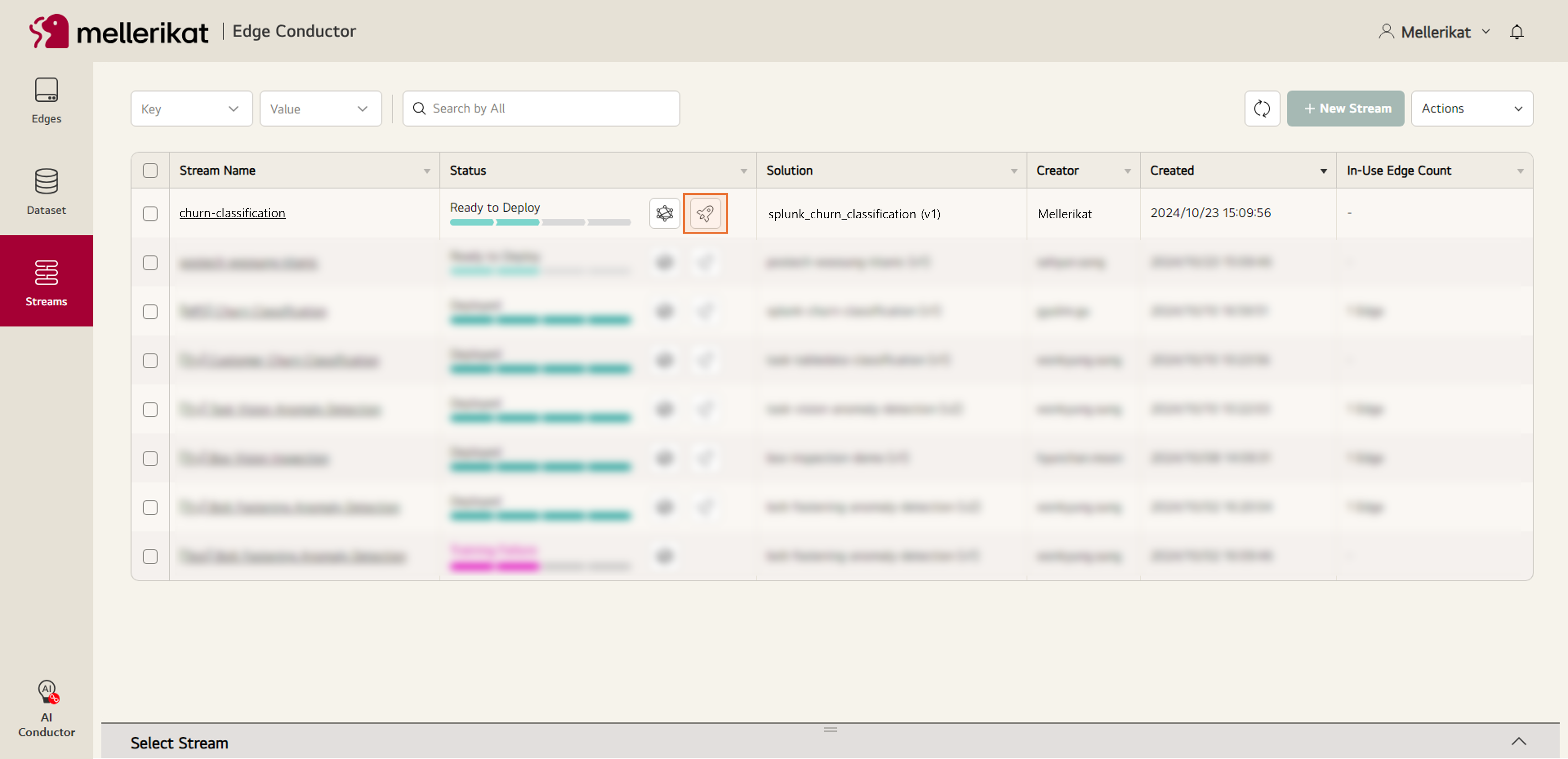

- Move to the Streams tab and click the New Stream button.

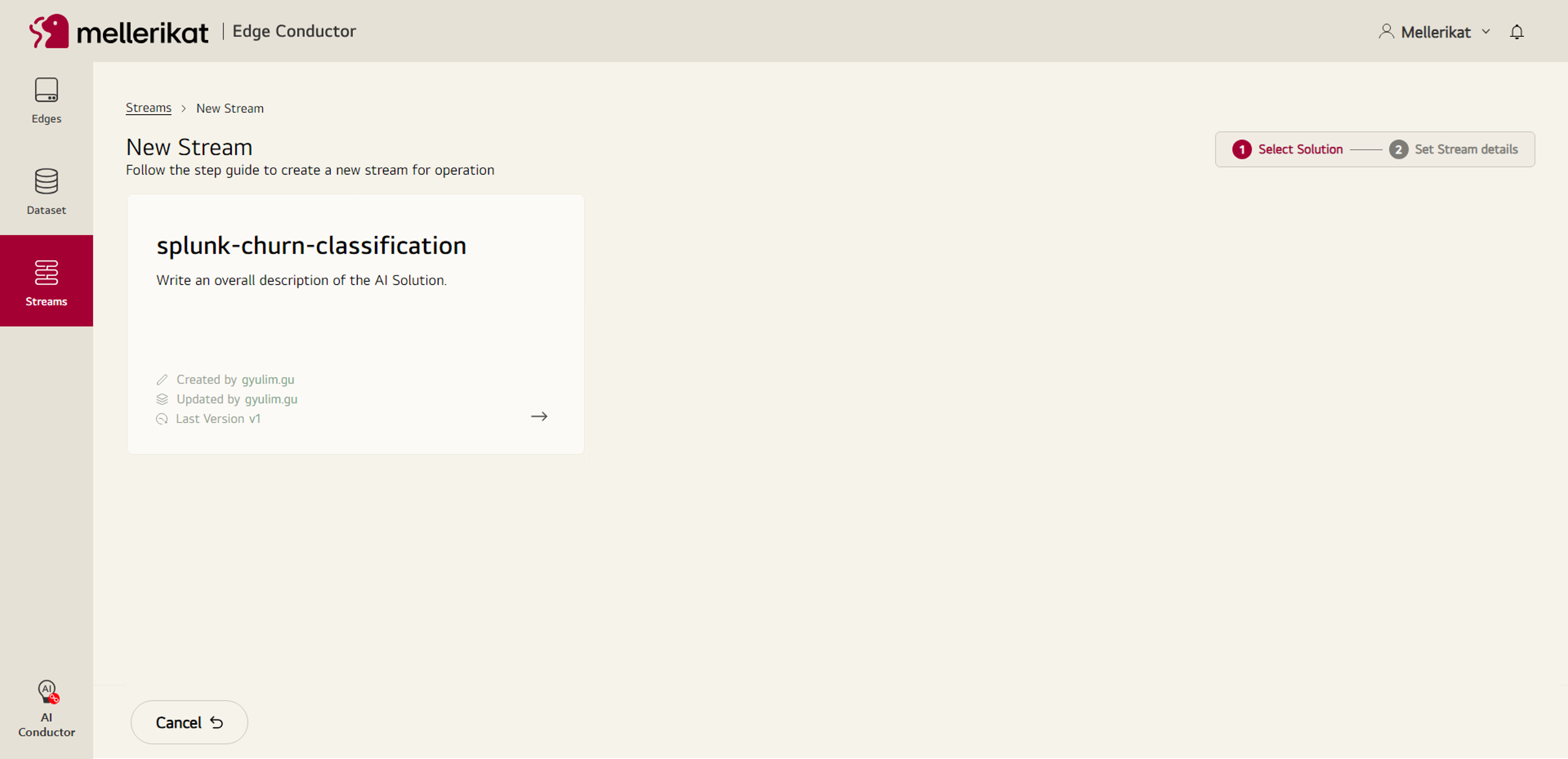

- Select the created AI Solution.

- Enter the necessary settings for training and complete the Stream creation.

- Stream Name: Name of the Stream to be created * required

- Description: Description of the Stream to be created

- Tag: Tag information to be assigned to the Stream

- AI Solution: Set Parameters for training

- Inference Warning Setting: Set the lower limit for model performance warnings

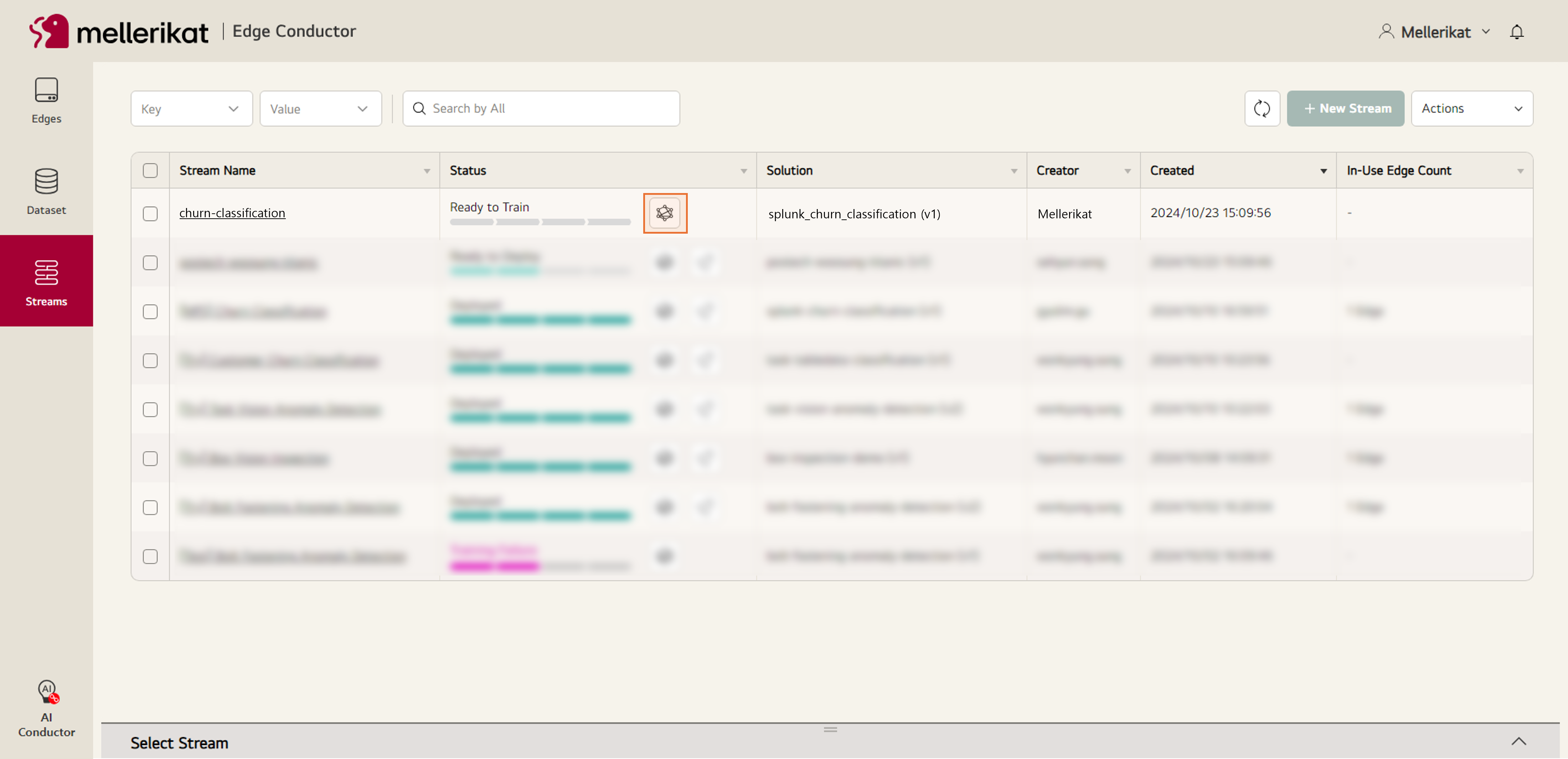

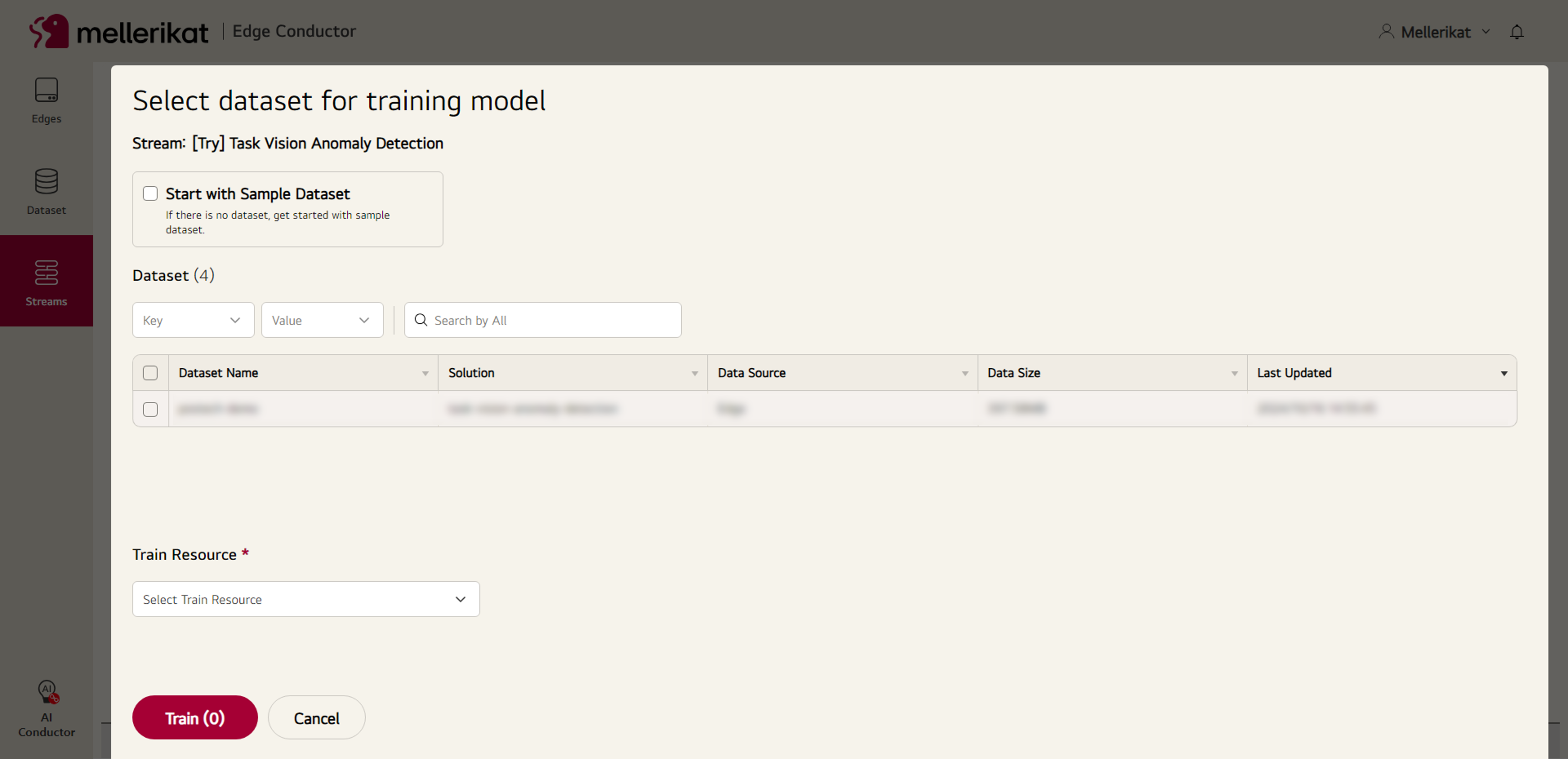

- Click the Train button on the created Stream, select the Dataset to be used, and proceed with the training.

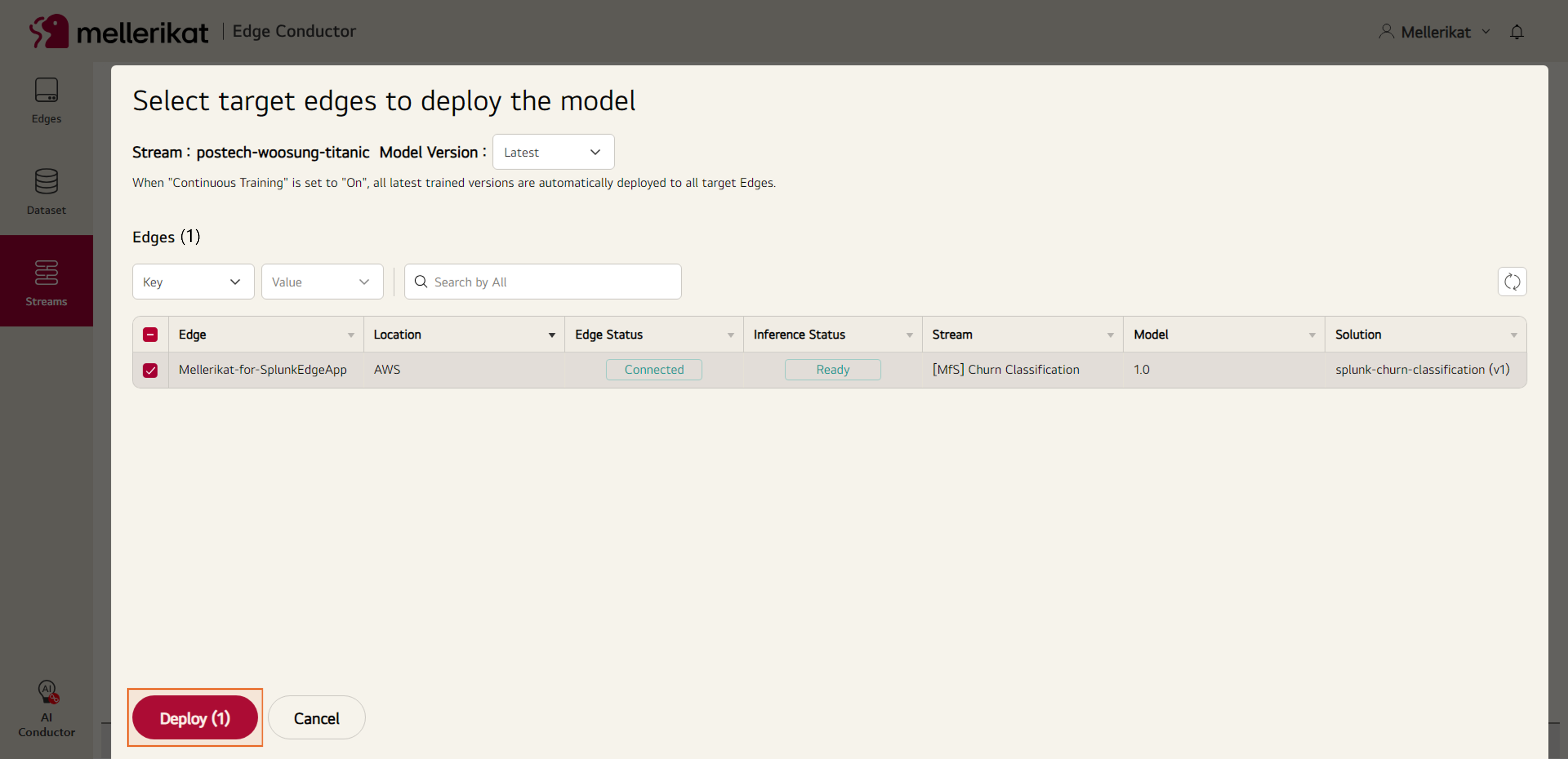

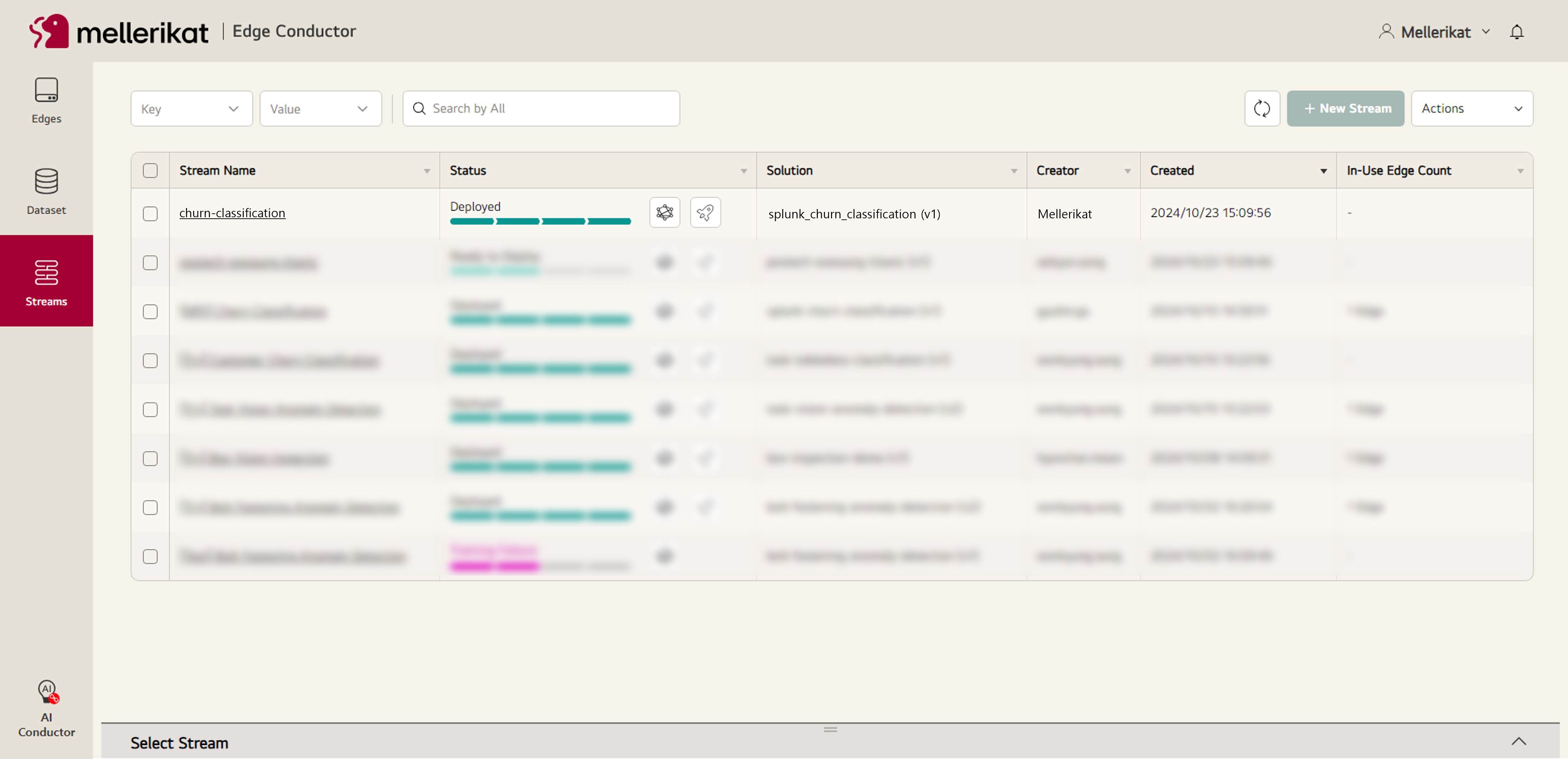

- Once training is complete, deploy the model to the installed Edge App.

- Verify that the model is successfully deployed and the Stream status is "Deployed."

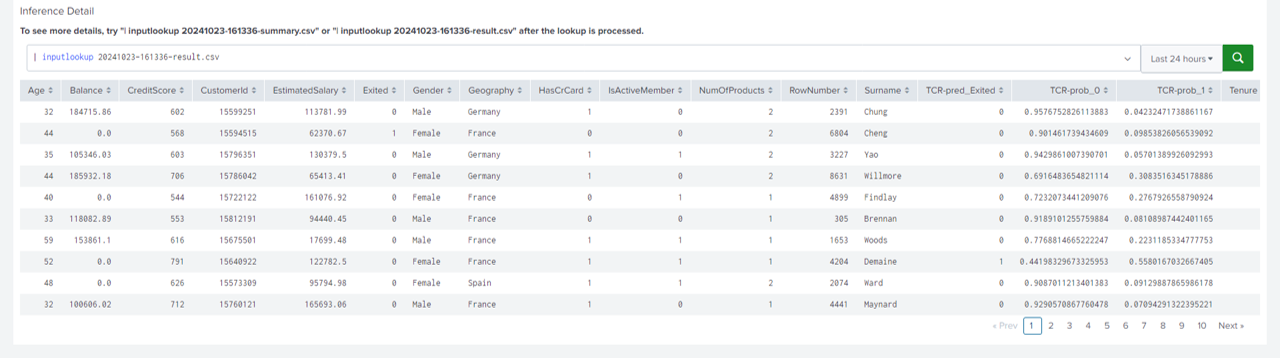

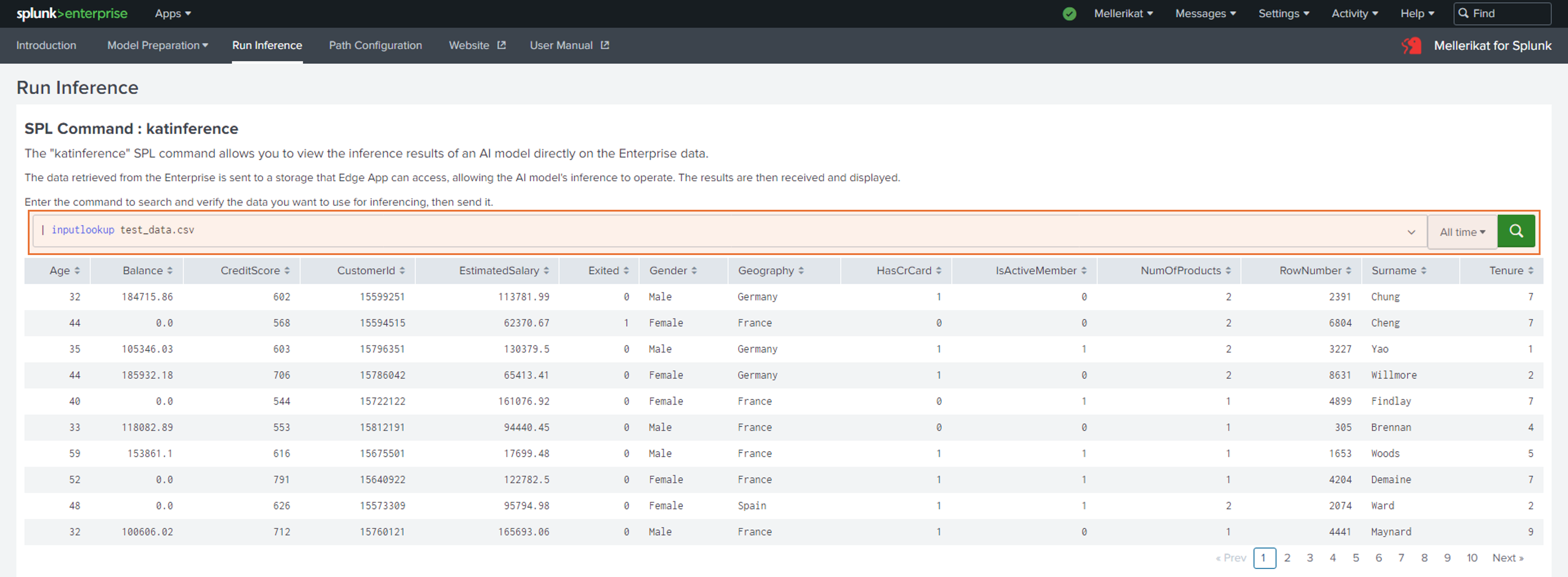

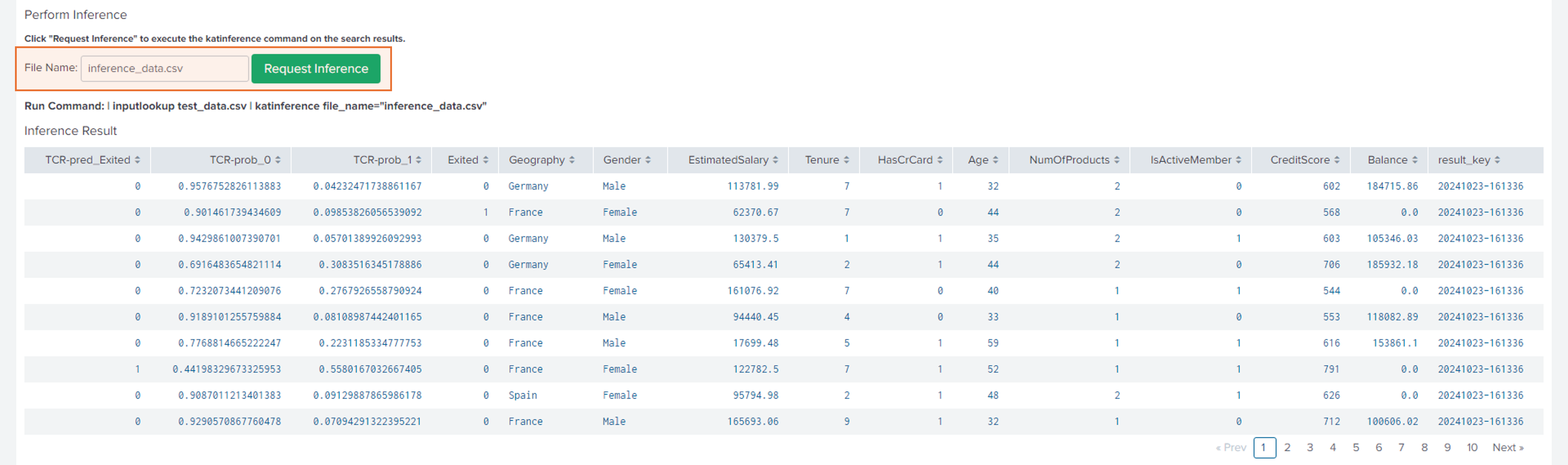

5. Perform Inference

- Go to the Inference tab and check the data for performing inference.

- Click the Request Inference button to check the inferred result values.

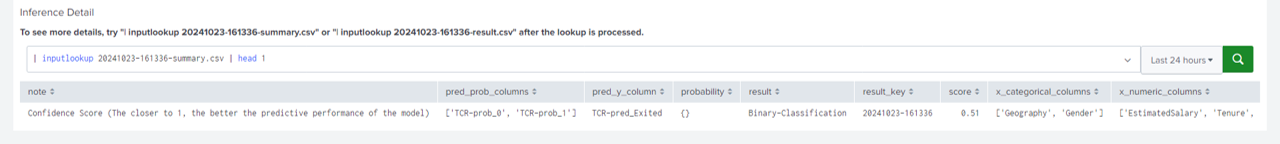

- Check the inferred result values in the Inference Detail section. You can view the Inference Detail in two ways:

- View overall summary

- View individual results