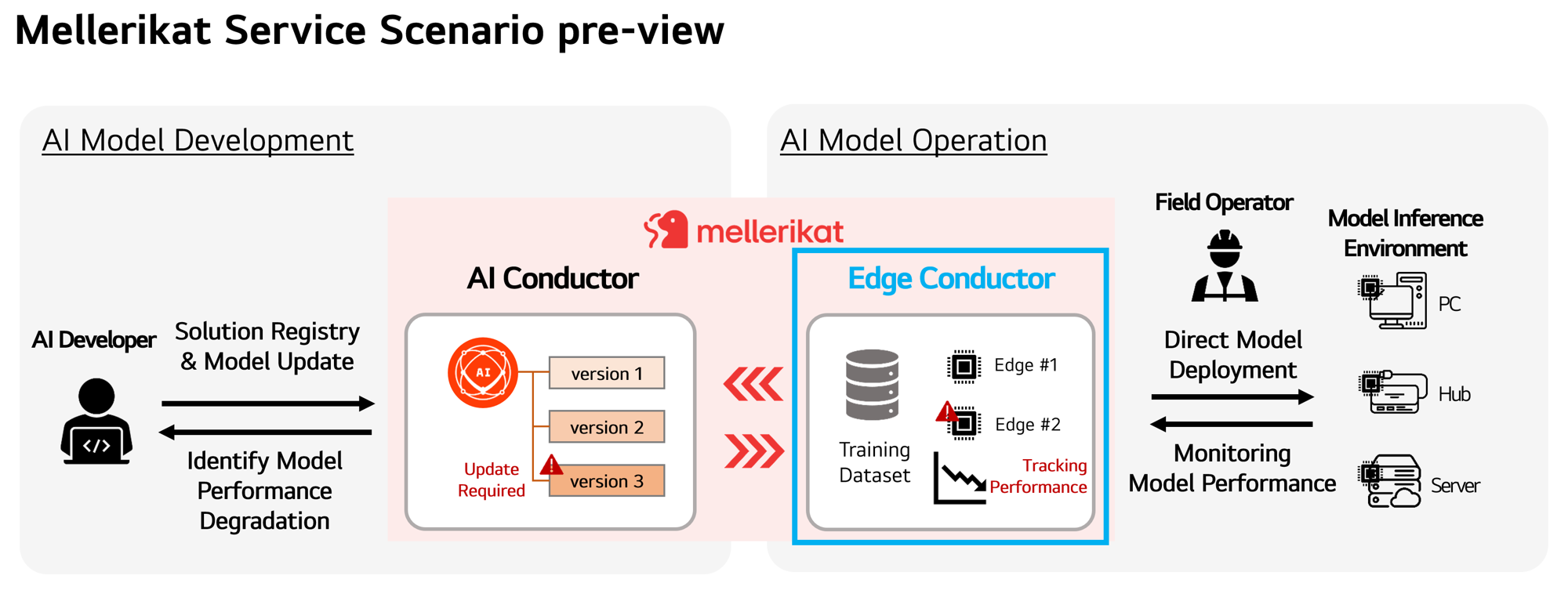

Edge Conductor

What is Edge Conductor?

Edge Conductor is a web-based service that enables integrated monitoring of inference results from deep learning models running on edge devices, and supports efficient maintenance of the models required for inference. It offers a wide range of functionalities including model deployment, training dataset management, model training requests, inference result monitoring, and data relabeling. Through Edge Conductor, users can deploy AI models to each edge device and collect, review, and manage inference results in real time.

Key Features

Edge Conductor helps improve operational efficiency by monitoring inference on edge devices and supporting training dataset management, model training, and deployment. Let’s explore its core features:

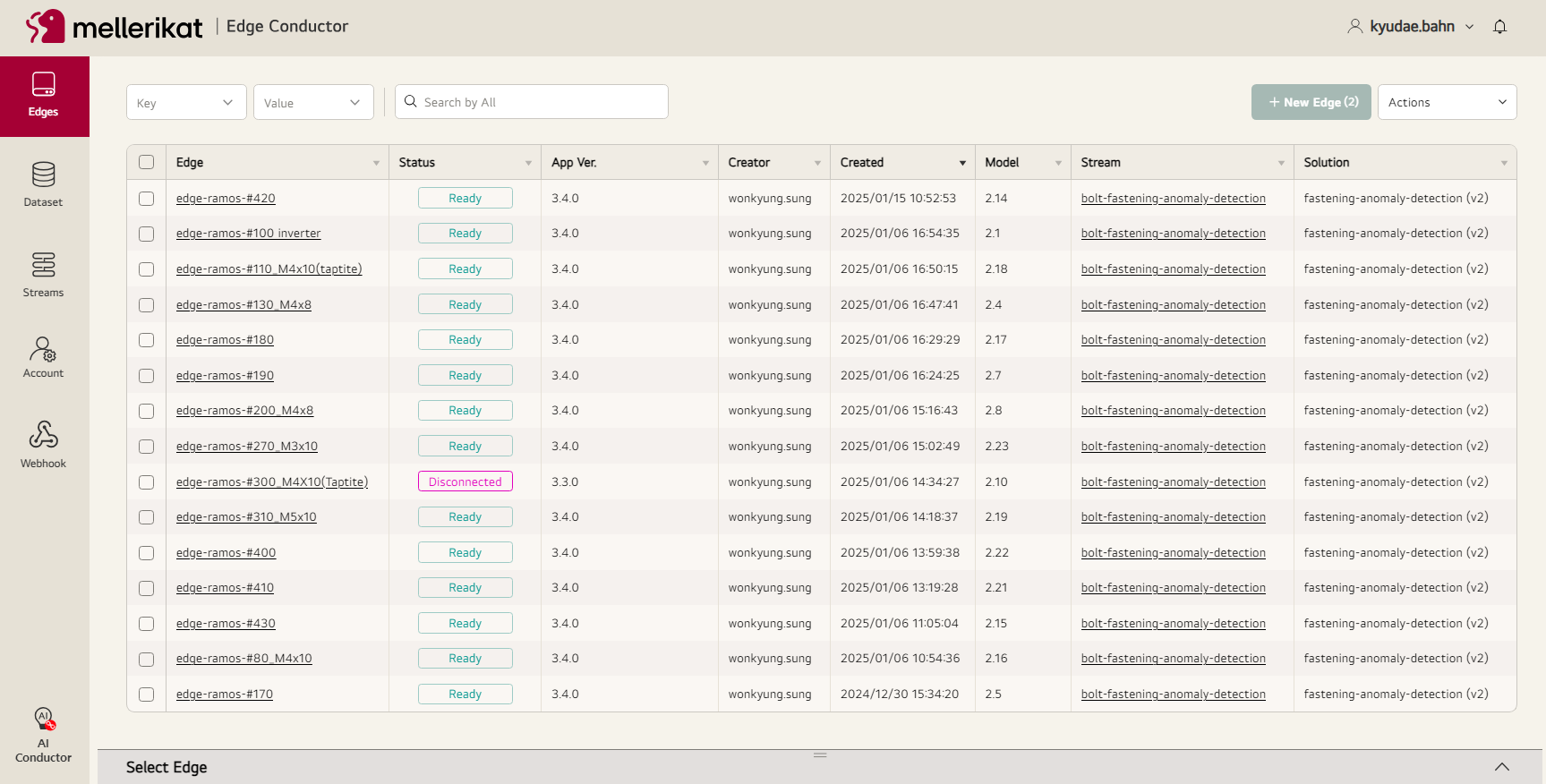

Integrated Edge Monitoring

Manage and centrally monitor the status and activity of multiple edges performing inference. It collects various types of information, including device data and inference performance metrics. Users can also review inference logs and failure history if needed.

Training Data Management

To achieve accurate inference, users can create and manage training datasets and use them to request model training. Datasets can be generated from various sources including edge-collected inference data, local files, or S3 storage. Support for additional sources is planned. For AI Solutions that support relabeling, users can redefine labels in the dataset using the built-in relabeling tool.

Leverage a Variety of Solutions

Users can browse various supported AI Solutions from Mellerikat and choose the ones that suit their needs. Using a selected solution, they can create a stream, request model training via AI Conductor, and then deploy and manage the resulting model on edge devices.

Model Management and Deployment

Users can select training datasets and request updated models. They can check performance metrics for trained models and deploy them to edge devices to improve inference accuracy.

User Scenario

A typical workflow for Edge Conductor includes:

-

Register Edge: Select and register the edge device to which the AI model will be deployed. Each edge can run only one AI model at a time.

-

Prepare Dataset: Prepare the dataset for training. You can upload your own files or select data from cloud storage.

-

Create Stream: Select an AI Solution instance and create a stream. Parameters can be configured within the allowed range during this step.

-

Request Model Training: After creating the stream, select a dataset and request model training. The training is carried out by AI Conductor, and the trained model is saved to the stream.

-

Deploy Model: Deploy the trained model to the edge. Models can be deployed to multiple edge devices simultaneously.

-

Check Inference Results: Inference results from the edge are transmitted to Edge Conductor, where they can be monitored and reviewed.

-

Create Dataset: Use collected inference results to create a new training dataset. If results are incorrect, relabel the data to improve dataset quality.

-

Retrain and Redeploy: Retrain the AI model using the new dataset and deploy the updated model back to the edge.